Adam Kepecs

Digital Modeling of Spatial Pathway Activity from Histology Reveals Tumor Microenvironment Heterogeneity

Dec 18, 2025

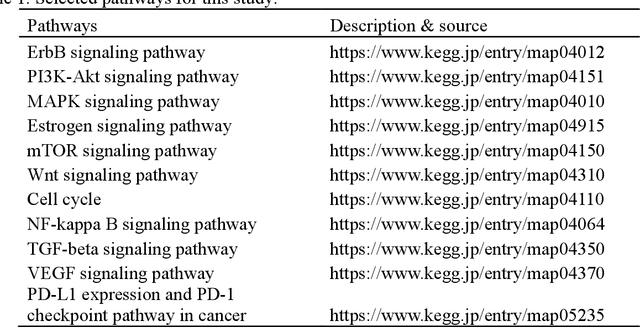

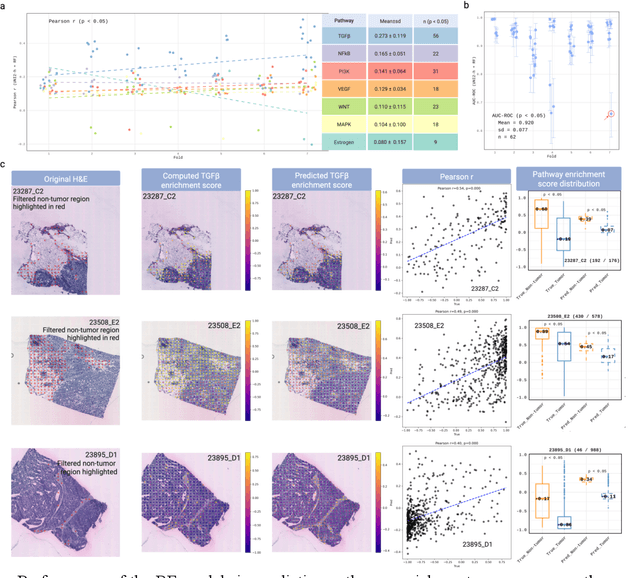

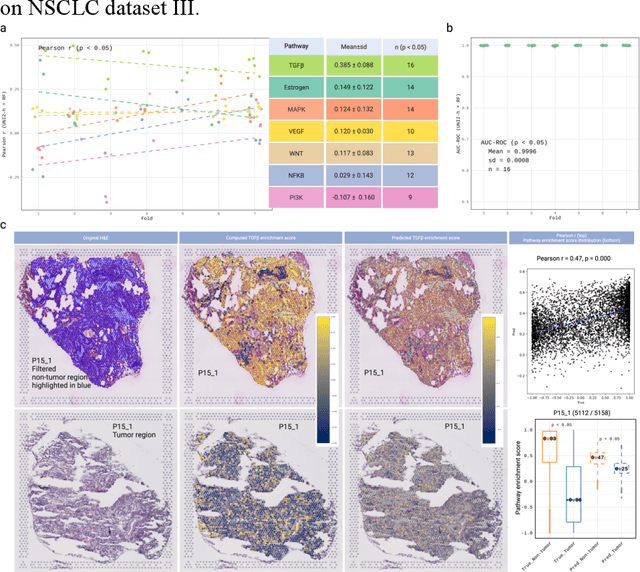

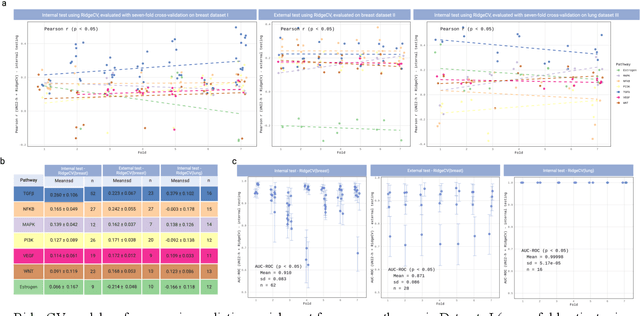

Abstract:Spatial transcriptomics (ST) enables simultaneous mapping of tissue morphology and spatially resolved gene expression, offering unique opportunities to study tumor microenvironment heterogeneity. Here, we introduce a computational framework that predicts spatial pathway activity directly from hematoxylin-and-eosin-stained histology images at microscale resolution 55 and 100 um. Using image features derived from a computational pathology foundation model, we found that TGFb signaling was the most accurately predicted pathway across three independent breast and lung cancer ST datasets. In 87-88% of reliably predicted cases, the resulting spatial TGFb activity maps reflected the expected contrast between tumor and adjacent non-tumor regions, consistent with the known role of TGFb in regulating interactions within the tumor microenvironment. Notably, linear and nonlinear predictive models performed similarly, suggesting that image features may relate to pathway activity in a predominantly linear fashion or that nonlinear structure is small relative to measurement noise. These findings demonstrate that features extracted from routine histopathology may recover spatially coherent and biologically interpretable pathway patterns, offering a scalable strategy for integrating image-based inference with ST information in tumor microenvironment studies.

Prospective Learning: Back to the Future

Jan 19, 2022

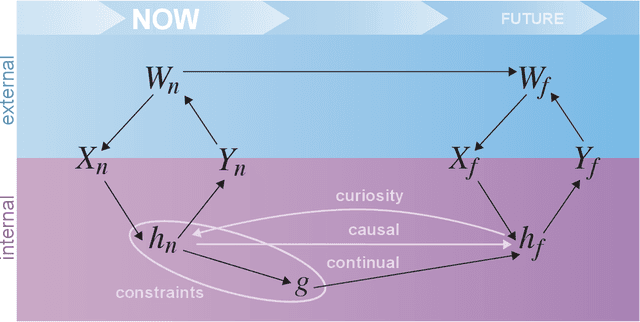

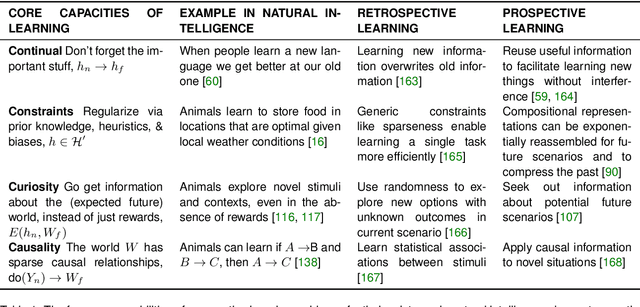

Abstract:Research on both natural intelligence (NI) and artificial intelligence (AI) generally assumes that the future resembles the past: intelligent agents or systems (what we call 'intelligence') observe and act on the world, then use this experience to act on future experiences of the same kind. We call this 'retrospective learning'. For example, an intelligence may see a set of pictures of objects, along with their names, and learn to name them. A retrospective learning intelligence would merely be able to name more pictures of the same objects. We argue that this is not what true intelligence is about. In many real world problems, both NIs and AIs will have to learn for an uncertain future. Both must update their internal models to be useful for future tasks, such as naming fundamentally new objects and using these objects effectively in a new context or to achieve previously unencountered goals. This ability to learn for the future we call 'prospective learning'. We articulate four relevant factors that jointly define prospective learning. Continual learning enables intelligences to remember those aspects of the past which it believes will be most useful in the future. Prospective constraints (including biases and priors) facilitate the intelligence finding general solutions that will be applicable to future problems. Curiosity motivates taking actions that inform future decision making, including in previously unmet situations. Causal estimation enables learning the structure of relations that guide choosing actions for specific outcomes, even when the specific action-outcome contingencies have never been observed before. We argue that a paradigm shift from retrospective to prospective learning will enable the communities that study intelligence to unite and overcome existing bottlenecks to more effectively explain, augment, and engineer intelligences.

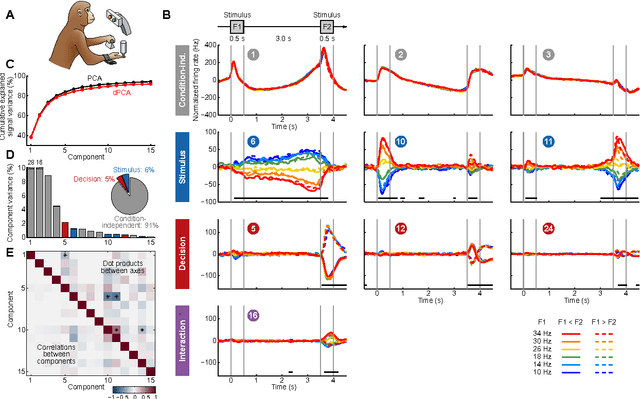

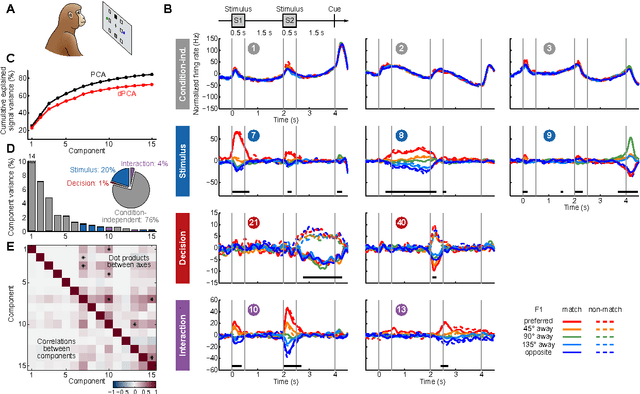

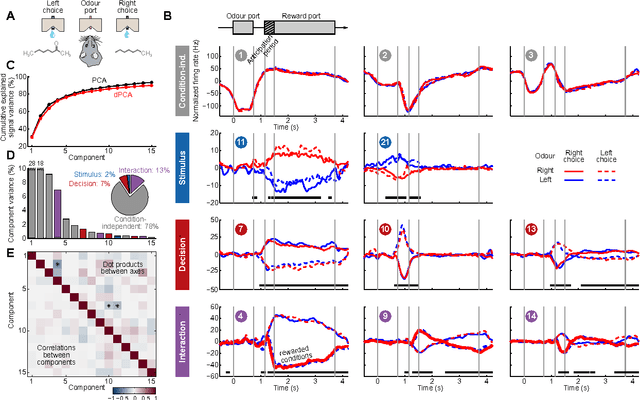

Demixed principal component analysis of population activity in higher cortical areas reveals independent representation of task parameters

Oct 22, 2014

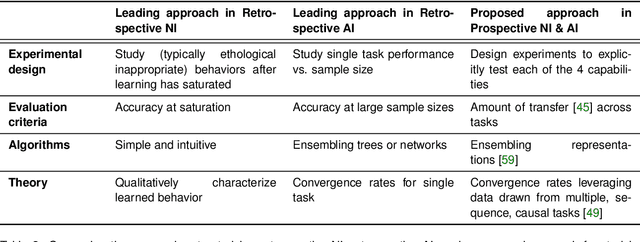

Abstract:Neurons in higher cortical areas, such as the prefrontal cortex, are known to be tuned to a variety of sensory and motor variables. The resulting diversity of neural tuning often obscures the represented information. Here we introduce a novel dimensionality reduction technique, demixed principal component analysis (dPCA), which automatically discovers and highlights the essential features in complex population activities. We reanalyze population data from the prefrontal areas of rats and monkeys performing a variety of working memory and decision-making tasks. In each case, dPCA summarizes the relevant features of the population response in a single figure. The population activity is decomposed into a few demixed components that capture most of the variance in the data and that highlight dynamic tuning of the population to various task parameters, such as stimuli, decisions, rewards, etc. Moreover, dPCA reveals strong, condition-independent components of the population activity that remain unnoticed with conventional approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge