Adam Dai

Multi-Robot Collaborative Localization and Planning with Inter-Ranging

Jun 24, 2024

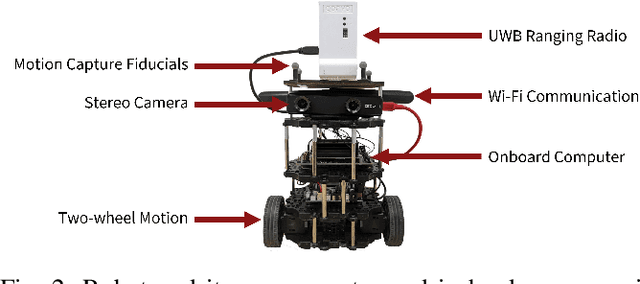

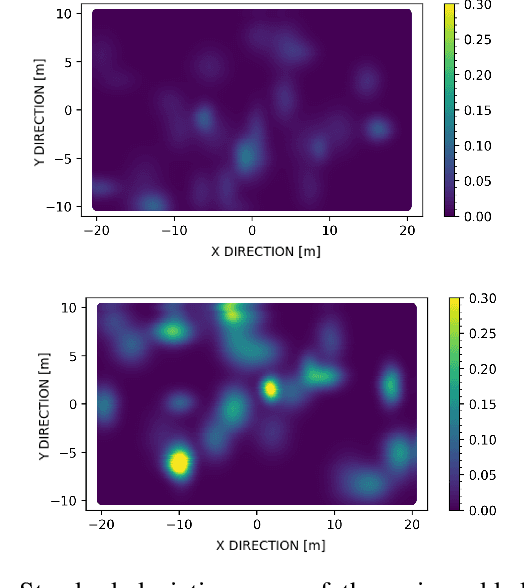

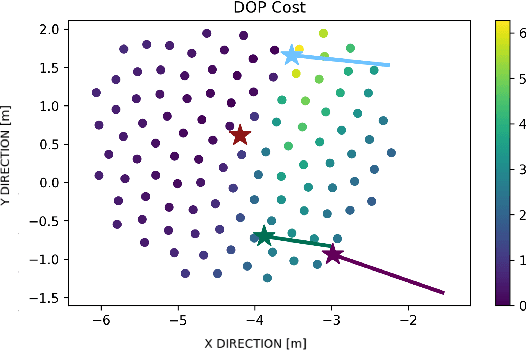

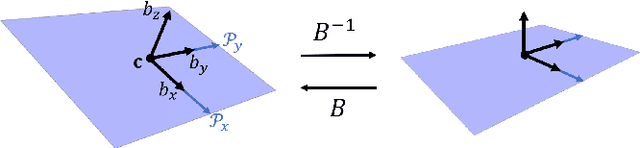

Abstract:Robots often use feature-based image tracking to identify their position in their surrounding environment; however, feature-based image tracking is prone to errors in low-textured and poorly lit environments. Specifically, we investigate a scenario where robots are tasked with exploring the surface of the Moon and are required to have an accurate estimate of their position to be able to correctly geotag scientific measurements. To reduce localization error, we complement traditional feature-based image tracking with ultra-wideband (UWB) distance measurements between the robots. The robots use an advanced mesh-ranging protocol that allows them to continuously share distance measurements amongst each other rather than relying on the common "anchor" and "tag" UWB architecture. We develop a decentralized multi-robot coordination algorithm that actively plans paths based on measurement line-of-sight vectors amongst all robots to minimize collective localization error. We then demonstrate the emergent behavior of the proposed multi-robot coordination algorithm both in simulation and hardware to lower a geometry-based uncertainty metric and reduce localization error.

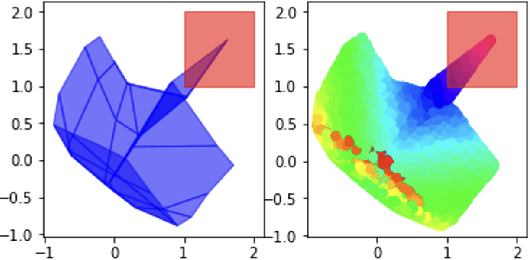

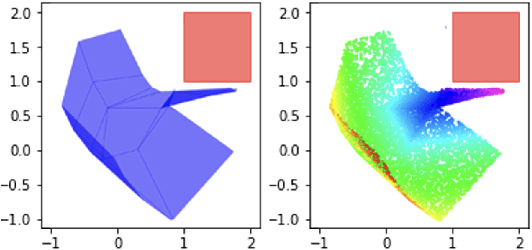

Neural Elevation Models for Terrain Mapping and Path Planning

May 24, 2024Abstract:This work introduces Neural Elevations Models (NEMos), which adapt Neural Radiance Fields to a 2.5D continuous and differentiable terrain model. In contrast to traditional terrain representations such as digital elevation models, NEMos can be readily generated from imagery, a low-cost data source, and provide a lightweight representation of terrain through an implicit continuous and differentiable height field. We propose a novel method for jointly training a height field and radiance field within a NeRF framework, leveraging quantile regression. Additionally, we introduce a path planning algorithm that performs gradient-based optimization of a continuous cost function for minimizing distance, slope changes, and control effort, enabled by differentiability of the height field. We perform experiments on simulated and real-world terrain imagery, demonstrating NEMos ability to generate high-quality reconstructions and produce smoother paths compared to discrete path planning methods. Future work will explore the incorporation of features and semantics into the height field, creating a generalized terrain model.

Spoofing-Resilient LiDAR-GPS Factor Graph Localization with Chimera Authentication

Jul 10, 2023

Abstract:Many vehicle platforms typically use sensors such as LiDAR or camera for locally-referenced navigation with GPS for globally-referenced navigation. However, due to the unencrypted nature of GPS signals, all civilian users are vulner-able to spoofing attacks, where a malicious spoofer broadcasts fabricated signals and causes the user to track a false position fix. To protect against such GPS spoofing attacks, Chips-Message Robust Authentication (Chimera) has been developed and will be tested on the Navigation Technology Satellite 3 (NTS-3) satellite being launched later this year. However, Chimera authentication is not continuously available and may not provide sufficient protection for vehicles which rely on more frequent GPS measurements. In this paper, we propose a factor graph-based state estimation framework which integrates LiDAR and GPS while simultaneously detecting and mitigating spoofing attacks experienced between consecutive Chimera authentications. Our proposed framework combines GPS pseudorange measurements with LiDAR odometry to provide a robust navigation solution. A chi-squared detector, based on pseudorange residuals, is used to detect and mitigate any potential GPS spoofing attacks. We evaluate our method using real-world LiDAR data from the KITTI dataset and simulated GPS measurements, both nominal and with spoofing. Across multiple trajectories and Monte Carlo runs, our method consistently achieves position errors under 5 m during nominal conditions, and successfully bounds positioning error to within odometry drift levels during spoofed conditions.

PlaneSLAM: Plane-based LiDAR SLAM for Motion Planning in Structured 3D Environments

Sep 29, 2022

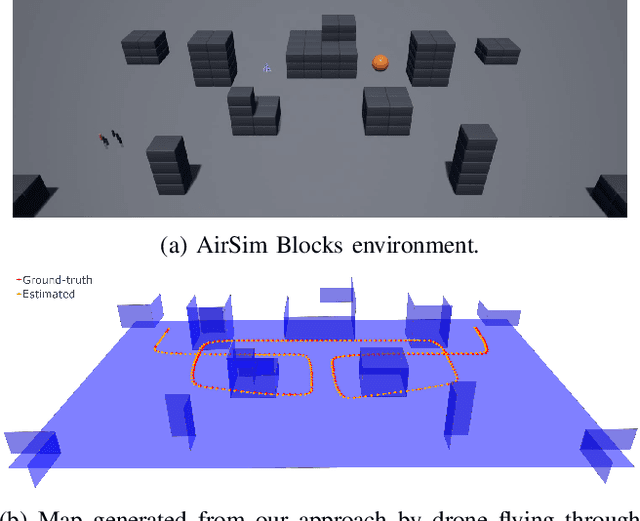

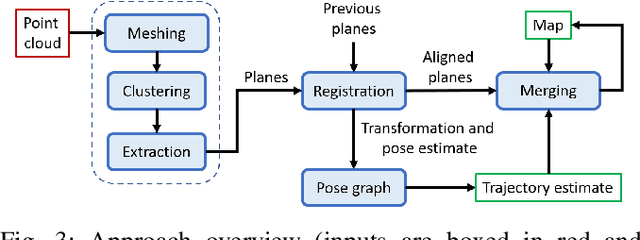

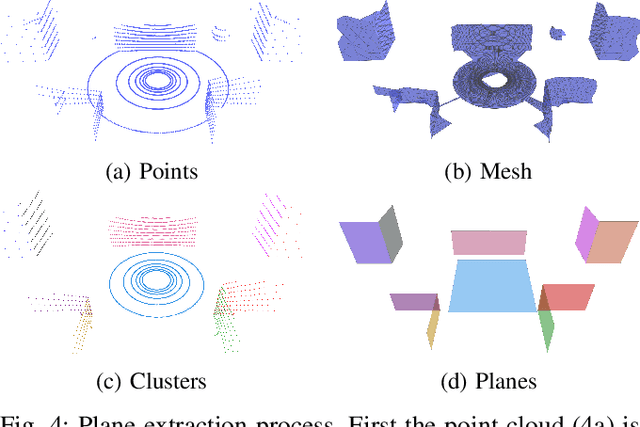

Abstract:LiDAR sensors are a powerful tool for robot simultaneous localization and mapping (SLAM) in unknown environments, but the raw point clouds they produce are dense, computationally expensive to store, and unsuited for direct use by downstream autonomy tasks, such as motion planning. For integration with motion planning, it is desirable for SLAM pipelines to generate lightweight geometric map representations. Such representations are also particularly well-suited for man-made environments, which can often be viewed as a so-called "Manhattan world" built on a Cartesian grid. In this work we present a 3D LiDAR SLAM algorithm for Manhattan world environments which extracts planar features from point clouds to achieve lightweight, real-time localization and mapping. Our approach generates plane-based maps which occupy significantly less memory than their point cloud equivalents, and are suited towards fast collision checking for motion planning. By leveraging the Manhattan world assumption, we target extraction of orthogonal planes to generate maps which are more structured and organized than those of existing plane-based LiDAR SLAM approaches. We demonstrate our approach in the high-fidelity AirSim simulator and in real-world experiments with a ground rover equipped with a Velodyne LiDAR. For both cases, we are able to generate high quality maps and trajectory estimates at a rate matching the sensor rate of 10 Hz.

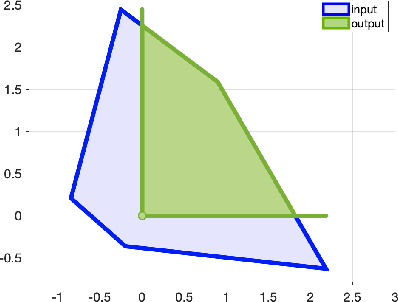

Constrained Feedforward Neural Network Training via Reachability Analysis

Jul 16, 2021

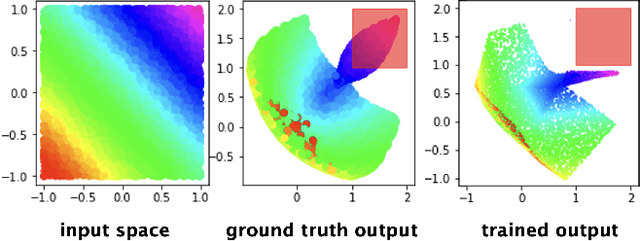

Abstract:Neural networks have recently become popular for a wide variety of uses, but have seen limited application in safety-critical domains such as robotics near and around humans. This is because it remains an open challenge to train a neural network to obey safety constraints. Most existing safety-related methods only seek to verify that already-trained networks obey constraints, requiring alternating training and verification. Instead, this work proposes a constrained method to simultaneously train and verify a feedforward neural network with rectified linear unit (ReLU) nonlinearities. Constraints are enforced by computing the network's output-space reachable set and ensuring that it does not intersect with unsafe sets; training is achieved by formulating a novel collision-check loss function between the reachable set and unsafe portions of the output space. The reachable and unsafe sets are represented by constrained zonotopes, a convex polytope representation that enables differentiable collision checking. The proposed method is demonstrated successfully on a network with one nonlinearity layer and approximately 50 parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge