Long Kiu Chung

A Closed-Form Geometric Retargeting Solver for Upper Body Humanoid Robot Teleoperation

Feb 02, 2026Abstract:Retargeting human motion to robot poses is a practical approach for teleoperating bimanual humanoid robot arms, but existing methods can be suboptimal and slow, often causing undesirable motion or latency. This is due to optimizing to match robot end-effector to human hand position and orientation, which can also limit the robot's workspace to that of the human. Instead, this paper reframes retargeting as an orientation alignment problem, enabling a closed-form, geometric solution algorithm with an optimality guarantee. The key idea is to align a robot arm to a human's upper and lower arm orientations, as identified from shoulder, elbow, and wrist (SEW) keypoints; hence, the method is called SEW-Mimic. The method has fast inference (3 kHz) on standard commercial CPUs, leaving computational overhead for downstream applications; an example in this paper is a safety filter to avoid bimanual self-collision. The method suits most 7-degree-of-freedom robot arms and humanoids, and is agnostic to input keypoint source. Experiments show that SEW-Mimic outperforms other retargeting methods in computation time and accuracy. A pilot user study suggests that the method improves teleoperation task success. Preliminary analysis indicates that data collected with SEW-Mimic improves policy learning due to being smoother. SEW-Mimic is also shown to be a drop-in way to accelerate full-body humanoid retargeting. Finally, hardware demonstrations illustrate SEW-Mimic's practicality. The results emphasize the utility of SEW-Mimic as a fundamental building block for bimanual robot manipulation and humanoid robot teleoperation.

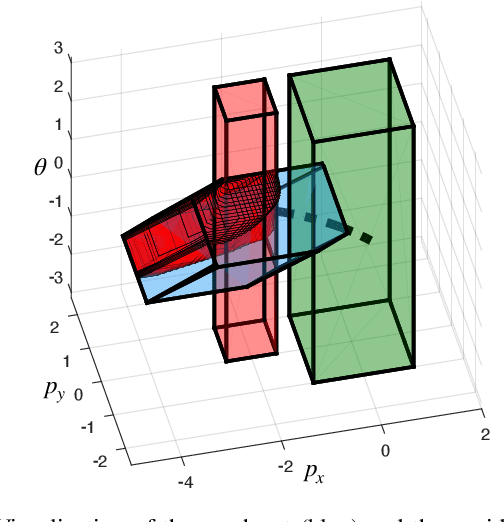

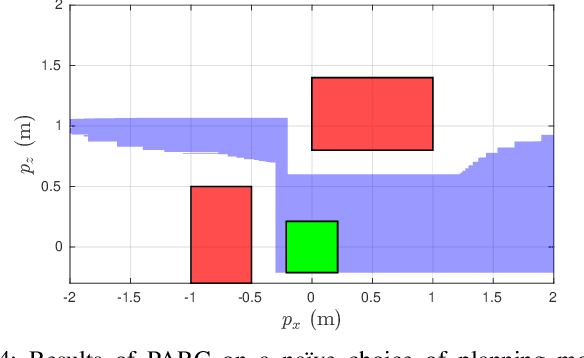

Goal-Reaching Trajectory Design Near Danger with Piecewise Affine Reach-avoid Computation

Feb 23, 2024

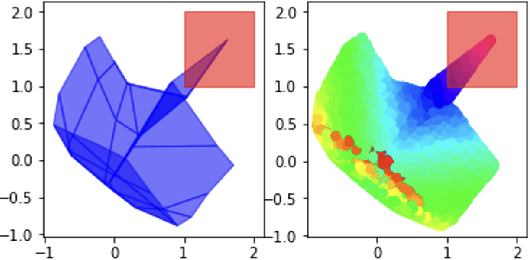

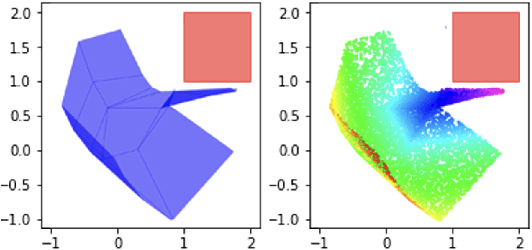

Abstract:Autonomous mobile robots must maintain safety, but should not sacrifice performance, leading to the classical reach-avoid problem. This paper seeks to compute trajectory plans for which a robot is guaranteed to reach a goal and avoid obstacles in the specific near-danger case that the obstacles and goal are near each other. The proposed method builds off of a common approach of using a simplified planning model to generate plans, which are then tracked using a high-fidelity tracking model and controller. Existing safe planning approaches use reachability analysis to overapproximate the error between these models, but this introduces additional numerical approximation error and thereby conservativeness that prevents goal-reaching. The present work instead proposes a Piecewise Affine Reach-avoid Computation (PARC) method to tightly approximate the reachable set of the planning model. With PARC, the main source of conservativeness is the model mismatch, which can be mitigated by careful controller and planning model design. The utility of this method is demonstrated through extensive numerical experiments in which PARC outperforms state-of-the-art reach-avoid methods in near-danger goal-reaching. Furthermore, in a simulated demonstration, PARC enables the generation of provably-safe extreme vehicle dynamics drift parking maneuvers.

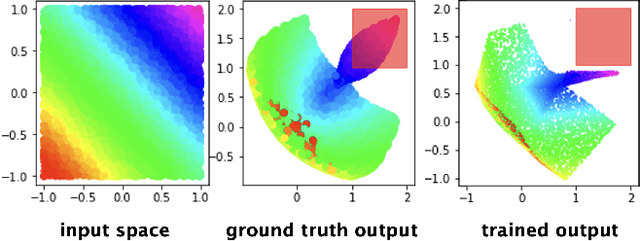

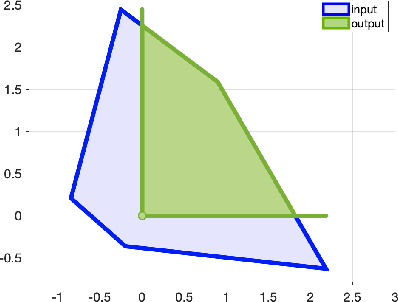

Constrained Feedforward Neural Network Training via Reachability Analysis

Jul 16, 2021

Abstract:Neural networks have recently become popular for a wide variety of uses, but have seen limited application in safety-critical domains such as robotics near and around humans. This is because it remains an open challenge to train a neural network to obey safety constraints. Most existing safety-related methods only seek to verify that already-trained networks obey constraints, requiring alternating training and verification. Instead, this work proposes a constrained method to simultaneously train and verify a feedforward neural network with rectified linear unit (ReLU) nonlinearities. Constraints are enforced by computing the network's output-space reachable set and ensuring that it does not intersect with unsafe sets; training is achieved by formulating a novel collision-check loss function between the reachable set and unsafe portions of the output space. The reachable and unsafe sets are represented by constrained zonotopes, a convex polytope representation that enables differentiable collision checking. The proposed method is demonstrated successfully on a network with one nonlinearity layer and approximately 50 parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge