Multi-Robot Collaborative Localization and Planning with Inter-Ranging

Paper and Code

Jun 24, 2024

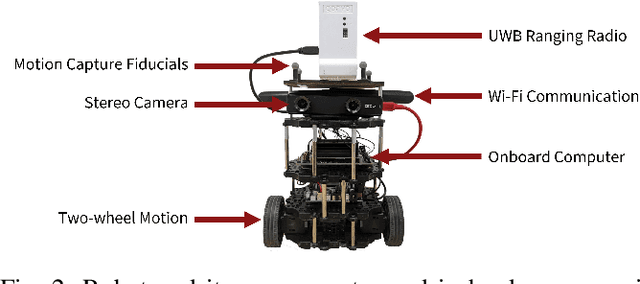

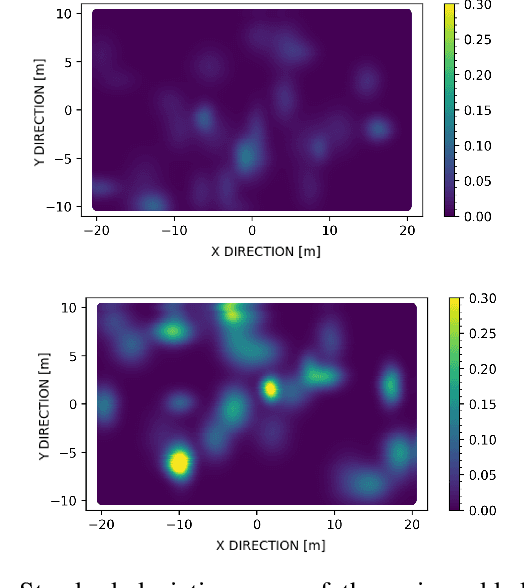

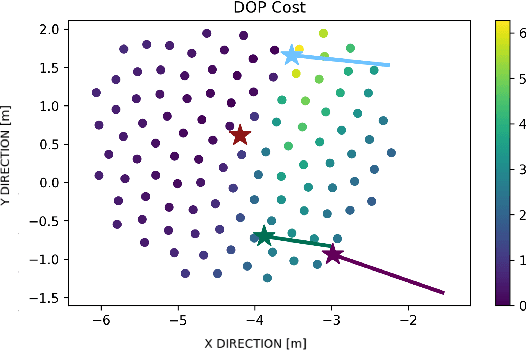

Robots often use feature-based image tracking to identify their position in their surrounding environment; however, feature-based image tracking is prone to errors in low-textured and poorly lit environments. Specifically, we investigate a scenario where robots are tasked with exploring the surface of the Moon and are required to have an accurate estimate of their position to be able to correctly geotag scientific measurements. To reduce localization error, we complement traditional feature-based image tracking with ultra-wideband (UWB) distance measurements between the robots. The robots use an advanced mesh-ranging protocol that allows them to continuously share distance measurements amongst each other rather than relying on the common "anchor" and "tag" UWB architecture. We develop a decentralized multi-robot coordination algorithm that actively plans paths based on measurement line-of-sight vectors amongst all robots to minimize collective localization error. We then demonstrate the emergent behavior of the proposed multi-robot coordination algorithm both in simulation and hardware to lower a geometry-based uncertainty metric and reduce localization error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge