Aboul Ella Hassanien

Scientific Research Group in Egypt

Fashion Industry in the Age of Generative Artificial Intelligence and Metaverse: A systematic Review

May 22, 2025Abstract:The fashion industry is an extremely profitable market that generates trillions of dollars in revenue by producing and distributing apparel, footwear, and accessories. This systematic literature review (SLR) seeks to systematically review and analyze the research landscape about the Generative Artificial Intelligence (GAI) and metaverse in the fashion industry. Thus, investigating the impact of integrating both technologies to enhance the fashion industry. This systematic review uses the Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) methodology, including three essential phases: identification, evaluation, and reporting. In the identification phase, the target search problems are determined by selecting appropriate keywords and alternative synonyms. After that 578 documents from 2014 to the end of 2023 are retrieved. The evaluation phase applies three screening steps to assess papers and choose 118 eligible papers for full-text reading. Finally, the reporting phase thoroughly examines and synthesizes the 118 eligible papers to identify key themes associated with GAI and Metaverse in the fashion industry. Based on Strengths, Weaknesses, Opportunities, and Threats (SWOT) analyses performed for both GAI and metaverse for the fashion industry, it is concluded that the integration of GAI and the metaverse holds the capacity to profoundly revolutionize the fashion sector, presenting chances for improved manufacturing, design, sales, and client experiences. Accordingly, the research proposes a new framework to integrate GAI and metaverse to enhance the fashion industry. The framework presents different use cases to promote the fashion industry using the integration. Future research points for achieving a successful integration are demonstrated.

Harmony-Search and Otsu based System for Coronavirus Disease Detection using Lung CT Scan Images

Apr 06, 2020

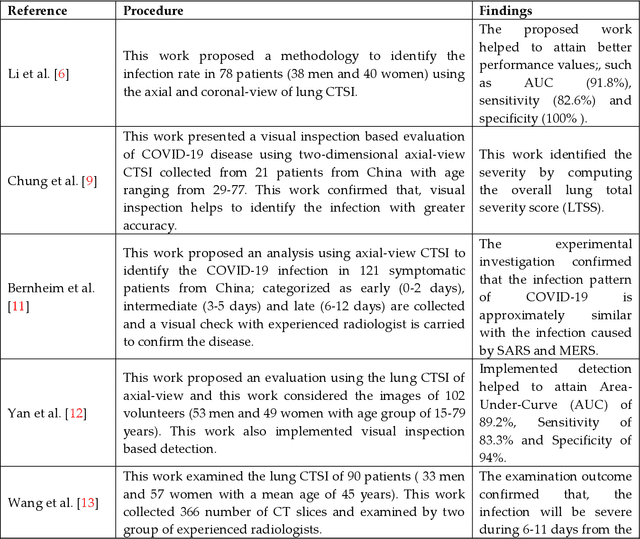

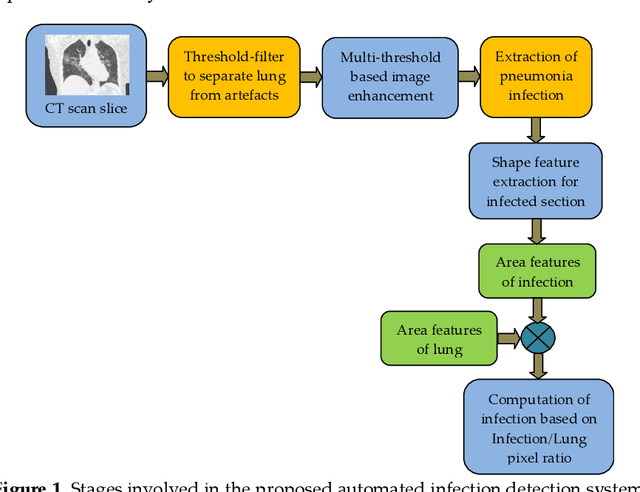

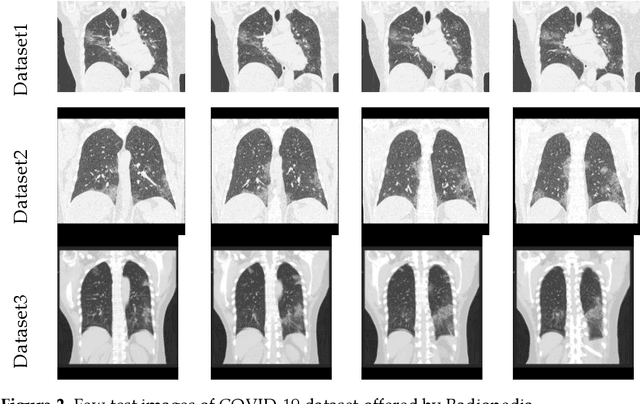

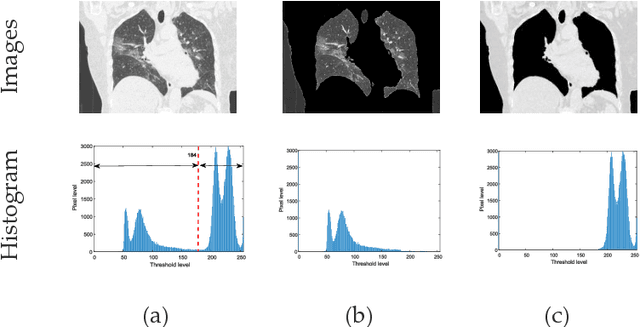

Abstract:Pneumonia is one of the foremost lung diseases and untreated pneumonia will lead to serious threats for all age groups. The proposed work aims to extract and evaluate the Coronavirus disease (COVID-19) caused pneumonia infection in lung using CT scans. We propose an image-assisted system to extract COVID-19 infected sections from lung CT scans (coronal view). It includes following steps: (i) Threshold filter to extract the lung region by eliminating possible artifacts; (ii) Image enhancement using Harmony-Search-Optimization and Otsu thresholding; (iii) Image segmentation to extract infected region(s); and (iv) Region-of-interest (ROI) extraction (features) from binary image to compute level of severity. The features that are extracted from ROI are then employed to identify the pixel ratio between the lung and infection sections to identify infection level of severity. The primary objective of the tool is to assist the pulmonologist not only to detect but also to help plan treatment process. As a consequence, for mass screening processing, it will help prevent diagnostic burden.

COVID-19 forecasting based on an improved interior search algorithm and multi-layer feed forward neural network

Apr 06, 2020

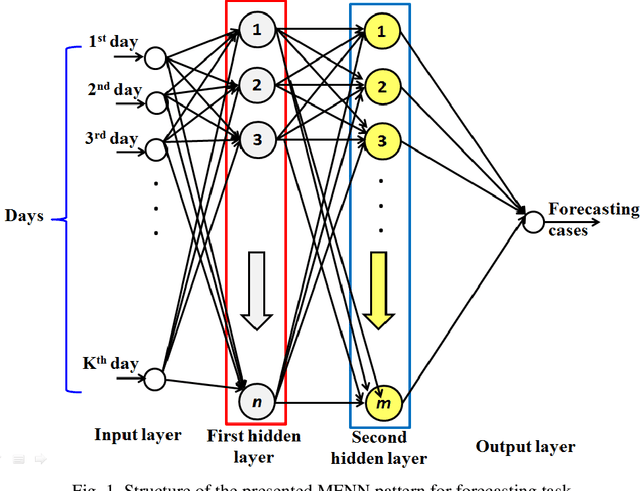

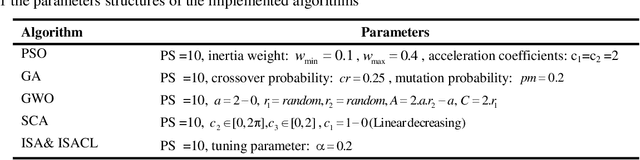

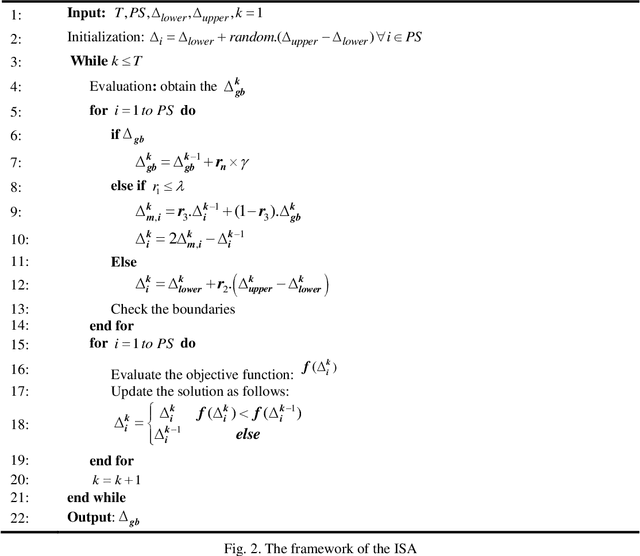

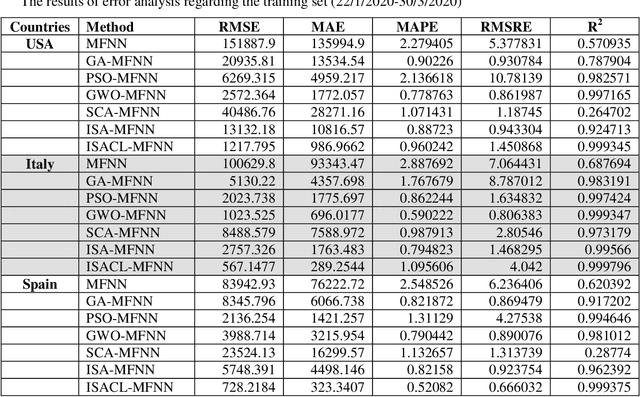

Abstract:COVID-19 is a novel coronavirus that was emerged in December 2019 within Wuhan, China. As the crisis of its serious increasing dynamic outbreak in all parts of the globe, the forecast maps and analysis of confirmed cases (CS) becomes a vital great changeling task. In this study, a new forecasting model is presented to analyze and forecast the CS of COVID-19 for the coming days based on the reported data since 22 Jan 2020. The proposed forecasting model, named ISACL-MFNN, integrates an improved interior search algorithm (ISA) based on chaotic learning (CL) strategy into a multi-layer feed-forward neural network (MFNN). The ISACL incorporates the CL strategy to enhance the performance of ISA and avoid the trapping in the local optima. By this methodology, it is intended to train the neural network by tuning its parameters to optimal values and thus achieving high-accuracy level regarding forecasted results. The ISACL-MFNN model is investigated on the official data of the COVID-19 reported by the World Health Organization (WHO) to analyze the confirmed cases for the upcoming days. The performance regarding the proposed forecasting model is validated and assessed by introducing some indices including the mean absolute error (MAE), root mean square error (RMSE) and mean absolute percentage error (MAPE) and the comparisons with other optimization algorithms are presented. The proposed model is investigated in the most affected countries (i.e., USA, Italy, and Spain). The experimental simulations illustrate that the proposed ISACL-MFNN provides promising performance rather than the other algorithms while forecasting task for the candidate countries.

Detection of Coronavirus (COVID-19) Associated Pneumonia based on Generative Adversarial Networks and a Fine-Tuned Deep Transfer Learning Model using Chest X-ray Dataset

Apr 02, 2020

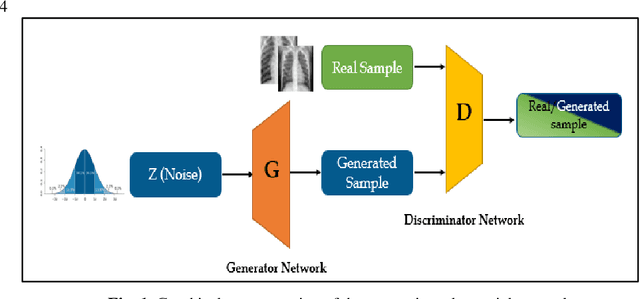

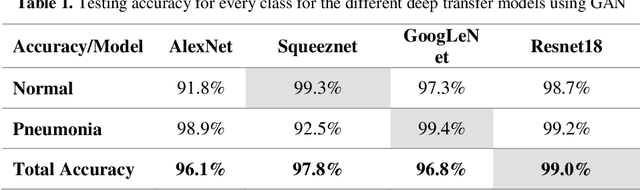

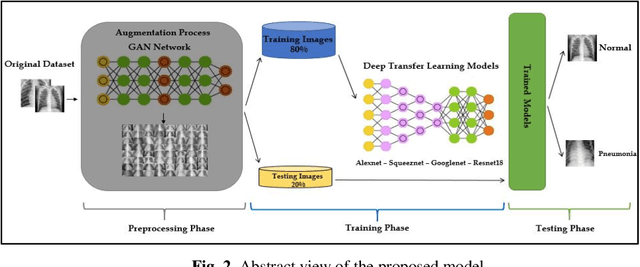

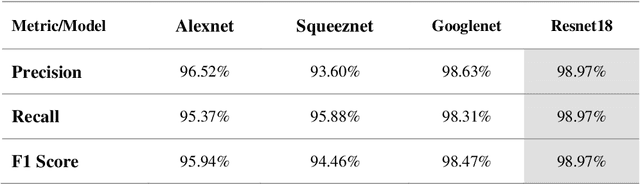

Abstract:The COVID-19 coronavirus is one of the devastating viruses according to the world health organization. This novel virus leads to pneumonia, which is an infection that inflames the lungs' air sacs of a human. One of the methods to detect those inflames is by using x-rays for the chest. In this paper, a pneumonia chest x-ray detection based on generative adversarial networks (GAN) with a fine-tuned deep transfer learning for a limited dataset will be presented. The use of GAN positively affects the proposed model robustness and made it immune to the overfitting problem and helps in generating more images from the dataset. The dataset used in this research consists of 5863 X-ray images with two categories: Normal and Pneumonia. This research uses only 10% of the dataset for training data and generates 90% of images using GAN to prove the efficiency of the proposed model. Through the paper, AlexNet, GoogLeNet, Squeeznet, and Resnet18 are selected as deep transfer learning models to detect the pneumonia from chest x-rays. Those models are selected based on their small number of layers on their architectures, which will reflect in reducing the complexity of the models and the consumed memory and time. Using a combination of GAN and deep transfer models proved it is efficiency according to testing accuracy measurement. The research concludes that the Resnet18 is the most appropriate deep transfer model according to testing accuracy measurement and achieved 99% with the other performance metrics such as precision, recall, and F1 score while using GAN as an image augmenter. Finally, a comparison result was carried out at the end of the research with related work which used the same dataset except that this research used only 10% of original dataset. The presented work achieved a superior result than the related work in terms of testing accuracy.

A Proposed Artificial intelligence Model for Real-Time Human Action Localization and Tracking

Nov 09, 2019

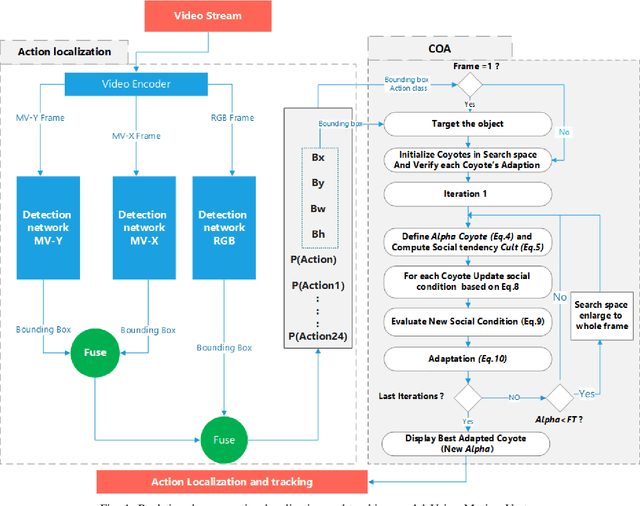

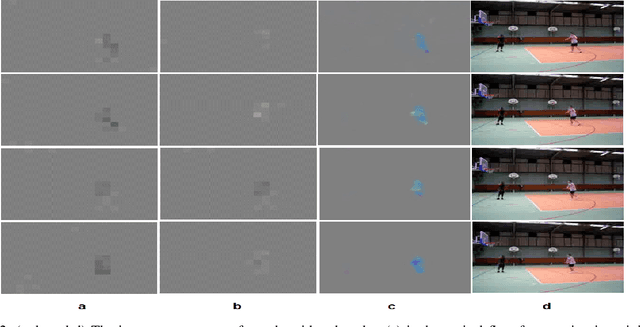

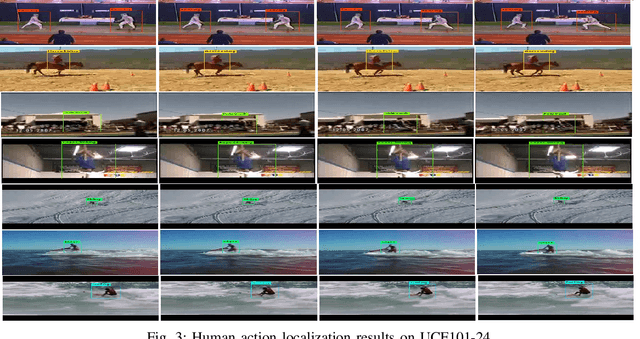

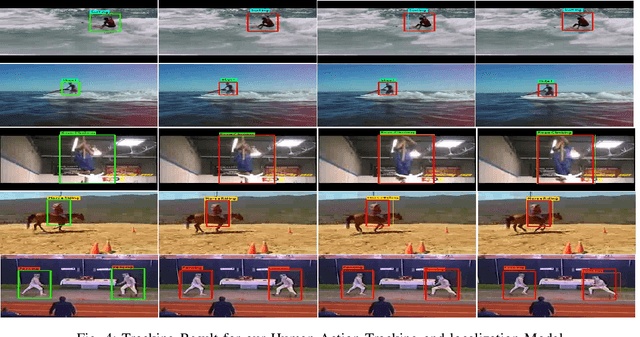

Abstract:In recent years, artificial intelligence (AI) based on deep learning (DL) has sparked tremendous global interest. DL is widely used today and has expanded into various interesting areas. It is becoming more popular in cross-subject research, such as studies of smart city systems, which combine computer science with engineering applications. Human action detection is one of these areas. Human action detection is an interesting challenge due to its stringent requirements in terms of computing speed and accuracy. High-accuracy real-time object tracking is also considered a significant challenge. This paper integrates the YOLO detection network, which is considered a state-of-the-art tool for real-time object detection, with motion vectors and the Coyote Optimization Algorithm (COA) to construct a real-time human action localization and tracking system. The proposed system starts with the extraction of motion information from a compressed video stream and the extraction of appearance information from RGB frames using an object detector. Then, a fusion step between the two streams is performed, and the results are fed into the proposed action tracking model. The COA is used in object tracking due to its accuracy and fast convergence. The basic foundation of the proposed model is the utilization of motion vectors, which already exist in a compressed video bit stream and provide sufficient information to improve the localization of the target action without requiring high consumption of computational resources compared with other popular methods of extracting motion information, such as optical flows. This advantage allows the proposed approach to be implemented in challenging environments where the computational resources are limited, such as Internet of Things (IoT) systems.

Monkey Optimization System with Active Membranes: A New Meta-heuristic Optimization System

Sep 30, 2019

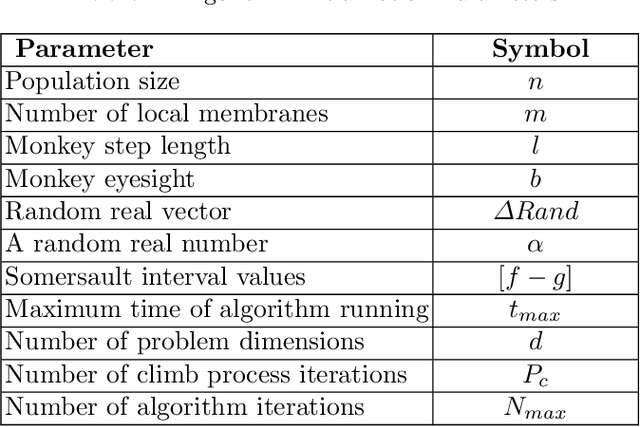

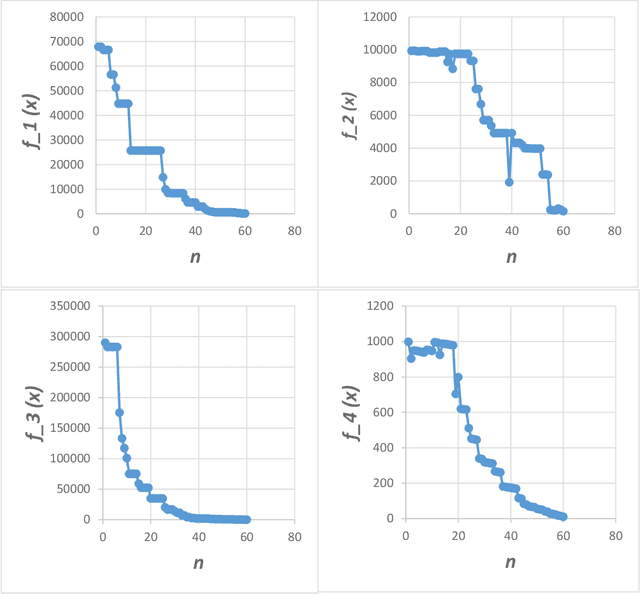

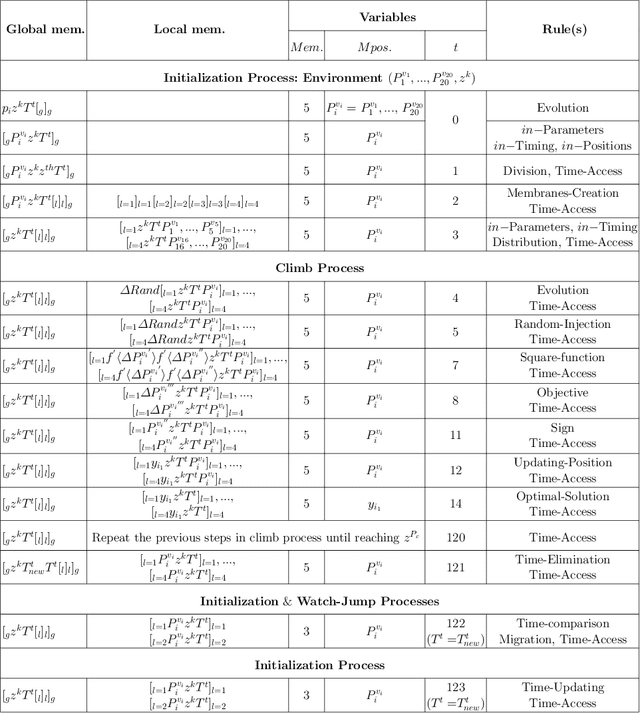

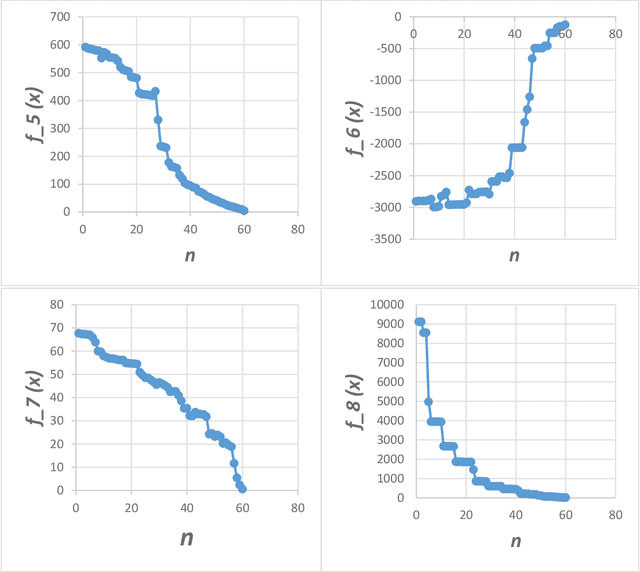

Abstract:Optimization techniques, used to get the optimal solution in search spaces, have not solved the time-consuming problem. The objective of this study is to tackle the sequential processing problem in Monkey Algorithm and simulating the natural parallel behavior of monkeys. Therefore, a P system with active membranes is constructed by providing a codification for Monkey Algorithm within the context of a cell-like P system, defining accordingly the elements of the model - membrane structure, objects, rules and the behavior of it. The proposed algorithm has modeled the natural behavior of climb process using separate membranes, rather than the original algorithm. Moreover, it introduced the membrane migration process to select the best solution and the time stamp was added as an additional stopping criterion to control the timing of the algorithm. The results indicate a substantial solution for the time consumption problem, significant representation of the natural behavior of monkeys, and considerable chance to reach the best solution in the context of meta-heuristics purpose. In addition, experiments use the commonly used benchmark functions to test the performance of the algorithm as well as the expected time of the proposed P Monkey optimization algorithm and the traditional Monkey Algorithm running on population size. The unit times are calculated based on the complexity of algorithms, where P Monkey takes a time unit to fire rule(s) over a population size n; as soon as, Monkey Algorithm takes a time unit to run a step every mathematical equation over a population size.

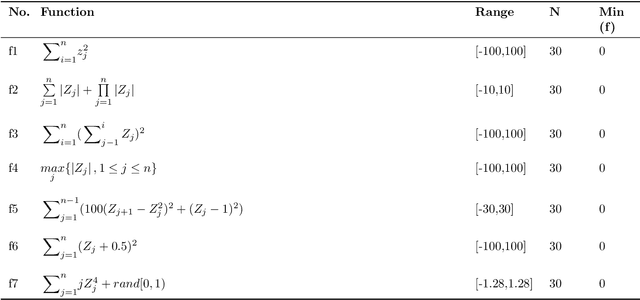

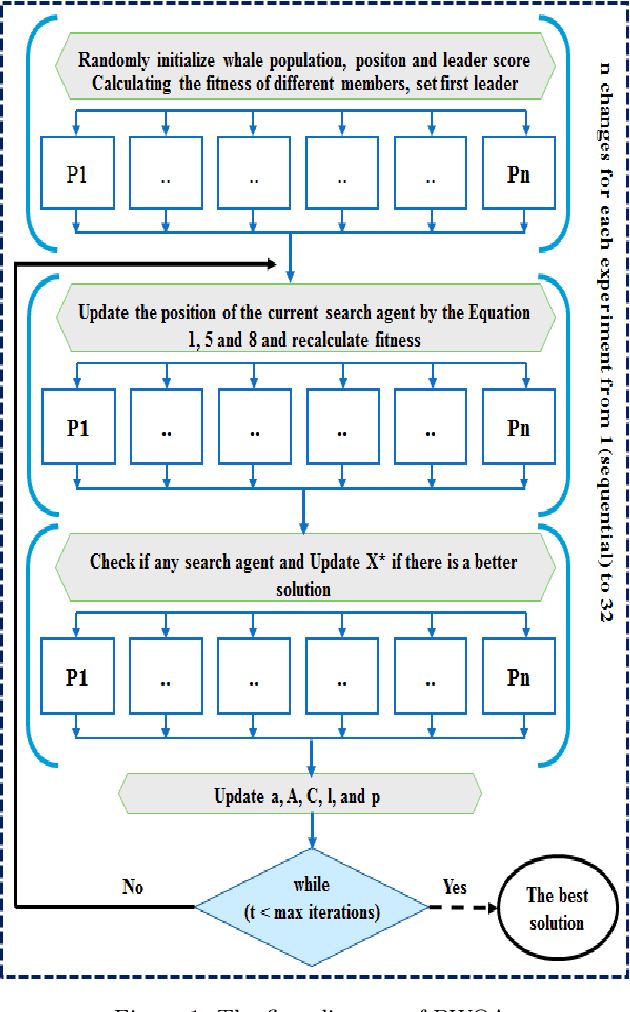

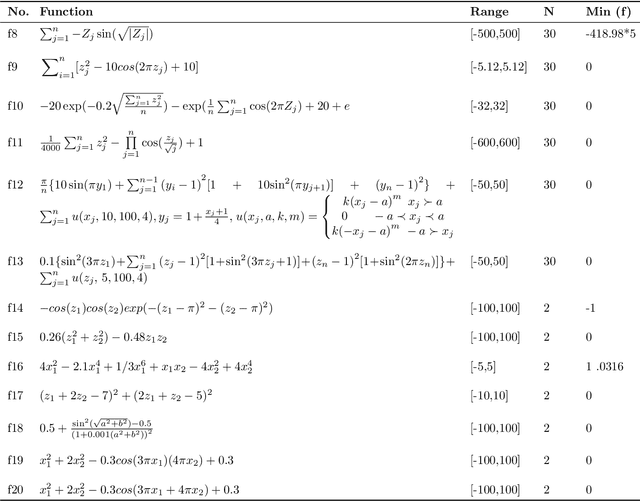

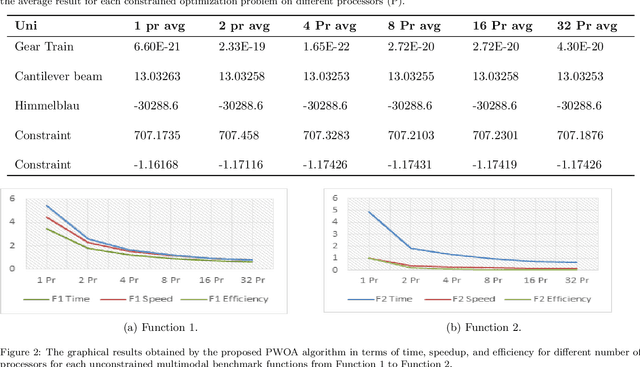

Parallel Whale Optimization Algorithm for Solving Constrained and Unconstrained Optimization Problems

Jun 21, 2018

Abstract:Recently the engineering optimization problems require large computational demands and long solution time even on high multi-processors computational devices. In this paper, an OpenMP inspired parallel version of the whale optimization algorithm (PWOA) to obtain enhanced computational throughput and global search capability is presented. It automatically detects the number of available processors and divides the workload among them to accomplish the effective utilization of the available resources. PWOA is applied on twenty unconstrained optimization functions on multiple dimensions and five constrained optimization engineering functions. The proposed parallelism PWOA algorithms performance is evaluated using parallel metrics such as speedup, efficiency. The comparison illustrates that the proposed PWOA algorithm has obtained the same results while exceeding the sequential version in performance. Furthermore, PWOA algorithm in the term of computational time and speed of parallel metric was achieved better results over the sequential processing compared to the standard WOA.

Combining Support Vector Machine and Elephant Herding Optimization for Cardiac Arrhythmias

Jun 20, 2018

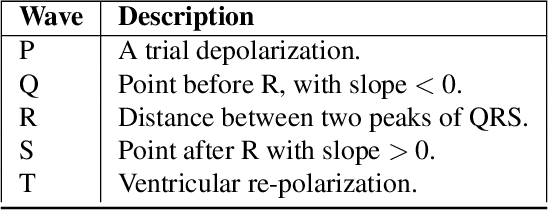

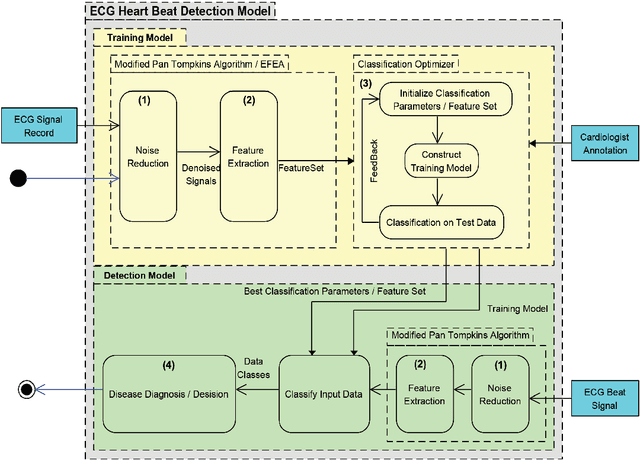

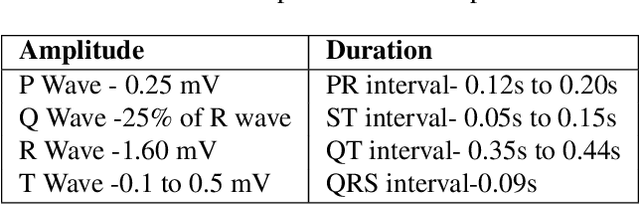

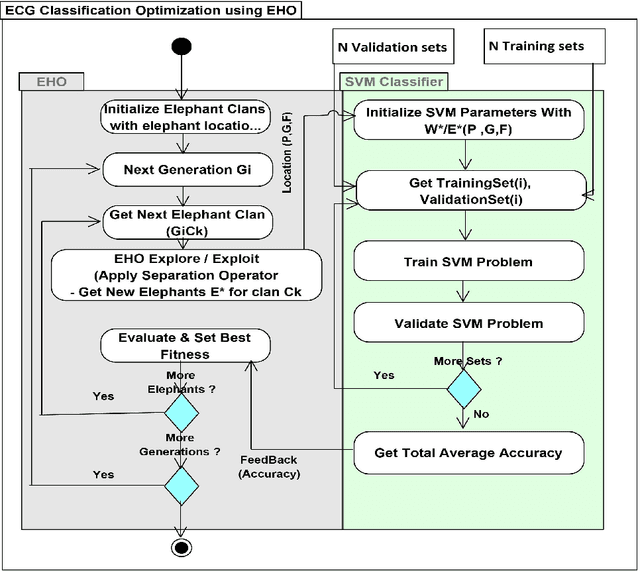

Abstract:Many people are currently suffering from heart diseases that can lead to untimely death. The most common heart abnormality is arrhythmia, which is simply irregular beating of the heart. A prediction system for the early intervention and prevention of heart diseases, including cardiovascular diseases (CDVs) and arrhythmia, is important. This paper introduces the classification of electrocardiogram (ECG) heartbeats into normal or abnormal. The approach is based on the combination of swarm optimization algorithms with a modified PannTompkins algorithm (MPTA) and support vector machines (SVMs). The MPTA was implemented to remove ECG noise, followed by the application of the extended features extraction algorithm (EFEA) for ECG feature extraction. Then, elephant herding optimization (EHO) was used to find a subset of ECG features from a larger feature pool that provided better classification performance than that achieved using the whole set. Finally, SVMs were used for classification. The results show that the EHOSVM approach achieved good classification results in terms of five statistical indices: accuracy, 93.31%; sensitivity, 45.49%; precision, 46.45%; F-measure, 45.48%; and specificity, 45.48%. Furthermore, the results demonstrate a clear improvement in accuracy compared to that of other methods when applied to the MITBIH arrhythmia database.

Orbital Petri Nets: A Novel Petri Net Approach

Jun 08, 2018

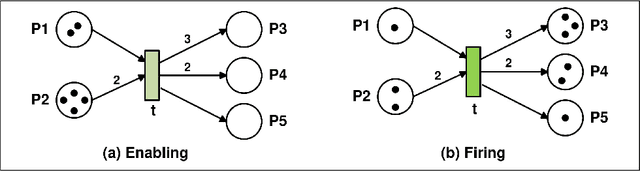

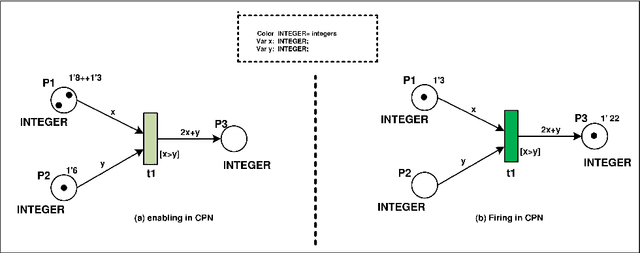

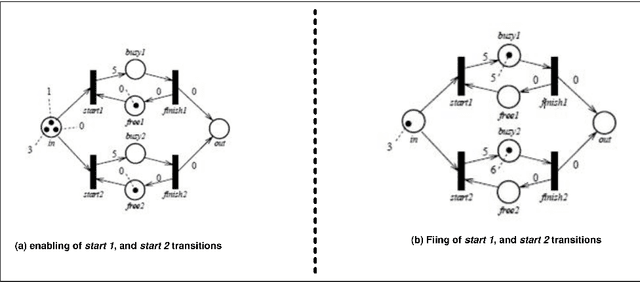

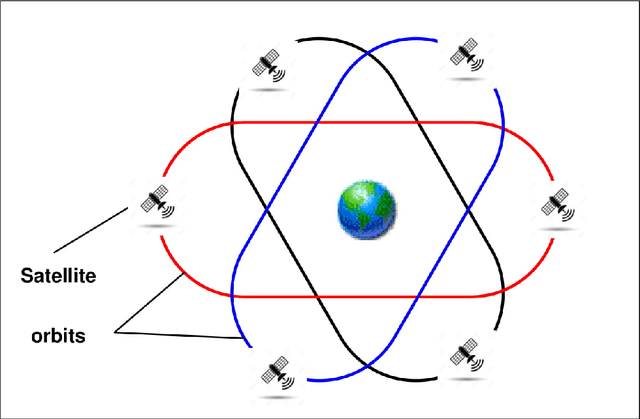

Abstract:Petri Nets is very interesting tool for studying and simulating different behaviors of information systems. It can be used in different applications based on the appropriate class of Petri Nets whereas it is classical, colored or timed Petri Nets. In this paper we introduce a new approach of Petri Nets called orbital Petri Nets (OPN) for studying the orbital rotating systems within a specific domain. The study investigated and analyzed OPN with highlighting the problem of space debris collision problem as a case study. The mathematical investigation results of two OPN models proved that space debris collision problem can be prevented based on the new method of firing sequence in OPN. By this study, new smart algorithms can be implemented and simulated by orbital Petri Nets for mitigating the space debris collision problem as a next work.

Arabian Horse Identification Benchmark Dataset

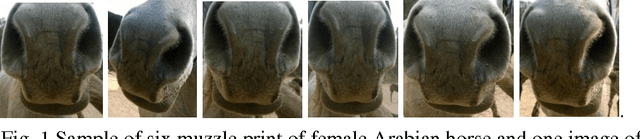

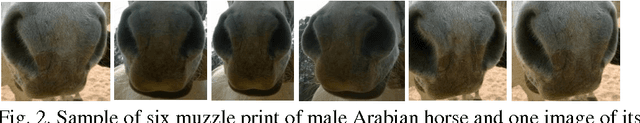

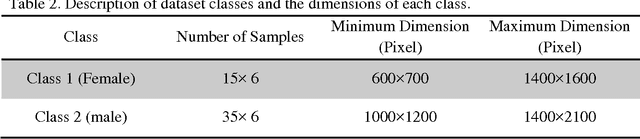

Jun 15, 2017

Abstract:The lack of a standard muzzle print database is a challenge for conducting researches in Arabian horse identification systems. Therefore, collecting a muzzle print images database is a crucial decision. The dataset presented in this paper is an option for the studies that need a dataset for testing and comparing the algorithms under development for Arabian horse identification. Our collected dataset consists of 300 color images that were collected from 50 Arabian horse muzzle species. This dataset has been collected from 50 Arabian horses with 6 muzzle print images each. A special care has been given to the quality of the collected images. The collected images cover different quality levels and degradation factors such as image rotation and image partiality for simulating real time identification operations. This dataset can be used to test the identification of Arabian horse system including the extracted features and the selected classifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge