Eman Ahmed

Fashion Industry in the Age of Generative Artificial Intelligence and Metaverse: A systematic Review

May 22, 2025Abstract:The fashion industry is an extremely profitable market that generates trillions of dollars in revenue by producing and distributing apparel, footwear, and accessories. This systematic literature review (SLR) seeks to systematically review and analyze the research landscape about the Generative Artificial Intelligence (GAI) and metaverse in the fashion industry. Thus, investigating the impact of integrating both technologies to enhance the fashion industry. This systematic review uses the Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) methodology, including three essential phases: identification, evaluation, and reporting. In the identification phase, the target search problems are determined by selecting appropriate keywords and alternative synonyms. After that 578 documents from 2014 to the end of 2023 are retrieved. The evaluation phase applies three screening steps to assess papers and choose 118 eligible papers for full-text reading. Finally, the reporting phase thoroughly examines and synthesizes the 118 eligible papers to identify key themes associated with GAI and Metaverse in the fashion industry. Based on Strengths, Weaknesses, Opportunities, and Threats (SWOT) analyses performed for both GAI and metaverse for the fashion industry, it is concluded that the integration of GAI and the metaverse holds the capacity to profoundly revolutionize the fashion sector, presenting chances for improved manufacturing, design, sales, and client experiences. Accordingly, the research proposes a new framework to integrate GAI and metaverse to enhance the fashion industry. The framework presents different use cases to promote the fashion industry using the integration. Future research points for achieving a successful integration are demonstrated.

Deep Learning Advances on Different 3D Data Representations: A Survey

Aug 04, 2018

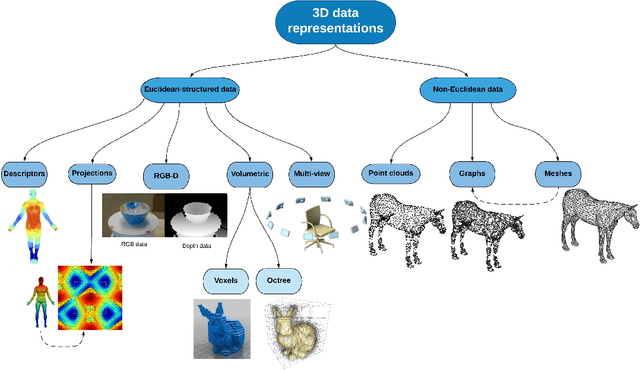

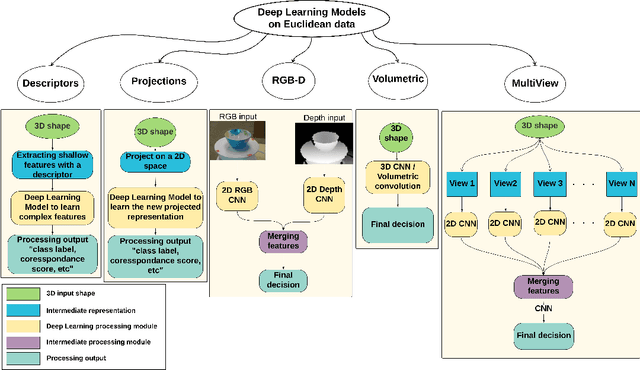

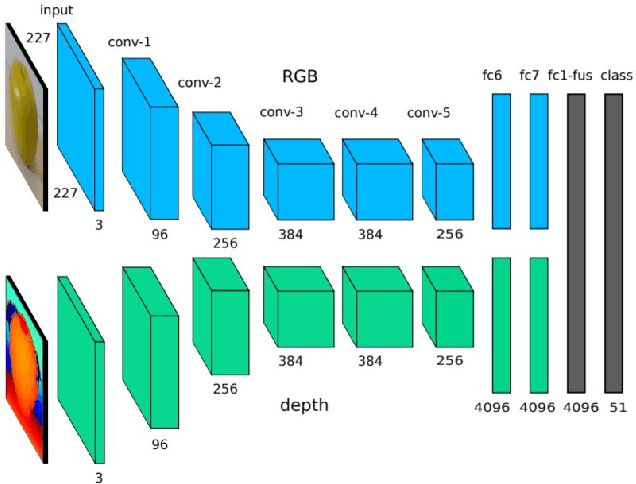

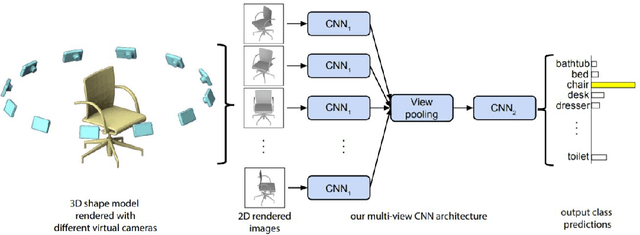

Abstract:3D data is a valuable asset in the field of computer vision as it provides rich information about the full geometry of sensed objects and scenes. With the recent availability of large 3D datasets and the increase in computational power, it is today possible to consider applying deep learning to learn specific tasks on 3D data such as segmentation, recognition and correspondence. Depending on the considered 3D data representation, different challenges may be foreseen in using existent deep learning architectures. In this paper, we provide a comprehensive overview of various 3D data representations highlighting the difference between Euclidean and non-Euclidean ones. We also discuss how deep learning methods are applied on each representation, analyzing the challenges to overcome.

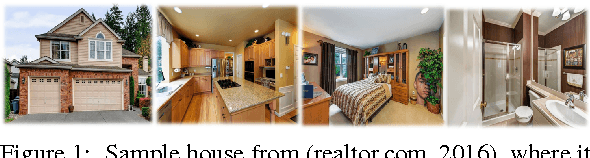

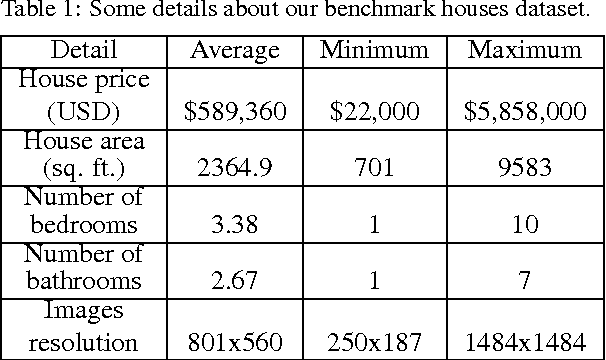

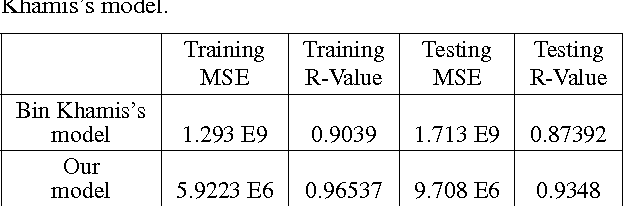

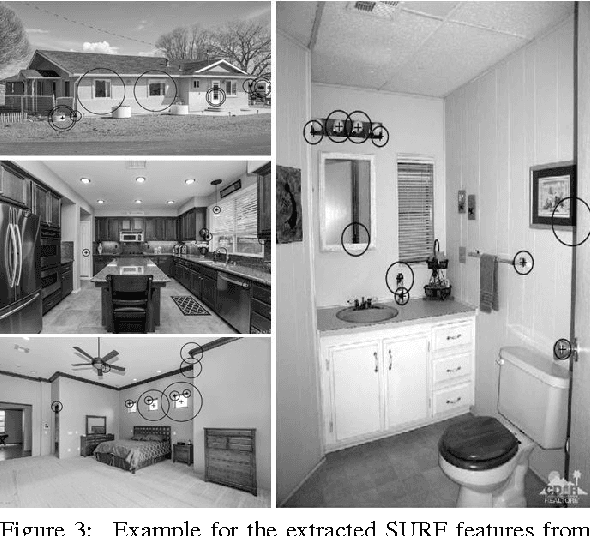

House price estimation from visual and textual features

Sep 27, 2016

Abstract:Most existing automatic house price estimation systems rely only on some textual data like its neighborhood area and the number of rooms. The final price is estimated by a human agent who visits the house and assesses it visually. In this paper, we propose extracting visual features from house photographs and combining them with the house's textual information. The combined features are fed to a fully connected multilayer Neural Network (NN) that estimates the house price as its single output. To train and evaluate our network, we have collected the first houses dataset (to our knowledge) that combines both images and textual attributes. The dataset is composed of 535 sample houses from the state of California, USA. Our experiments showed that adding the visual features increased the R-value by a factor of 3 and decreased the Mean Square Error (MSE) by one order of magnitude compared with textual-only features. Additionally, when trained on the benchmark textual-only features housing dataset, our proposed NN still outperformed the existing model published results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge