Abhishek Chakraborty

Randomized Feasibility Methods for Constrained Optimization with Adaptive Step Sizes

Jan 27, 2026Abstract:We consider minimizing an objective function subject to constraints defined by the intersection of lower-level sets of convex functions. We study two cases: (i) strongly convex and Lipschitz-smooth objective function and (ii) convex but possibly nonsmooth objective function. To deal with the constraints that are not easy to project on, we use a randomized feasibility algorithm with Polyak steps and a random number of sampled constraints per iteration, while taking (sub)gradient steps to minimize the objective function. For case (i), we prove linear convergence in expectation of the objective function values to any prescribed tolerance using an adaptive stepsize. For case (ii), we develop a fully problem parameter-free and adaptive stepsize scheme that yields an $O(1/\sqrt{T})$ worst-case rate in expectation. The infeasibility of the iterates decreases geometrically with the number of feasibility updates almost surely, while for the averaged iterates, we establish an expected lower bound on the function values relative to the optimal value that depends on the distribution for the random number of sampled constraints. For certain choices of sample-size growth, optimal rates are achieved. Finally, simulations on a Quadratically Constrained Quadratic Programming (QCQP) problem and Support Vector Machines (SVM) demonstrate the computational efficiency of our algorithm compared to other state-of-the-art methods.

Demonstrating Superresolution in Radar Range Estimation Using a Denoising Autoencoder

Jun 17, 2025Abstract:We apply machine learning methods to demonstrate range superresolution in remote sensing radar detection. Specifically, we implement a denoising autoencoder to estimate the distance between two equal intensity scatterers in the subwavelength regime. The machine learning models are trained on waveforms subject to a bandlimit constraint such that ranges much smaller than the inverse bandlimit are optimized in their precision. The autoencoder achieves effective dimensionality reduction, with the bottleneck layer exhibiting a strong and consistent correlation with the true scatterer separation. We confirm reproducibility across different training sessions and network initializations by analyzing the scaled encoder outputs and their robustness to noise. We investigate the behavior of the bottleneck layer for the following types of pulses: a traditional sinc pulse, a bandlimited triangle-type pulse, and a theoretically near-optimal pulse created from a spherical Bessel function basis. The Bessel signal performs best, followed by the triangle wave, with the sinc signal performing worst, highlighting the crucial role of signal design in the success of machine-learning-based range resolution.

DynaMarks: Defending Against Deep Learning Model Extraction Using Dynamic Watermarking

Jul 27, 2022

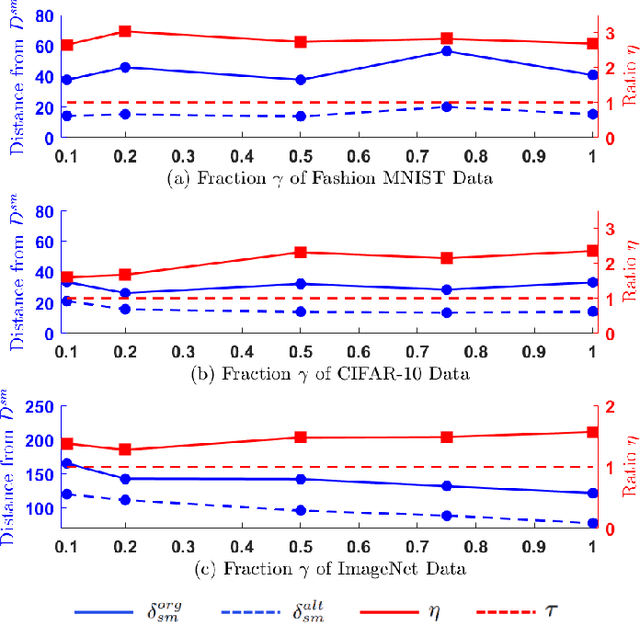

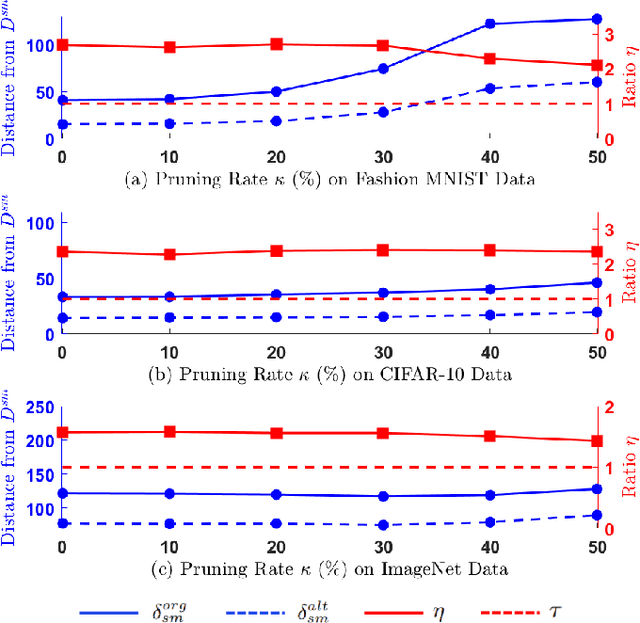

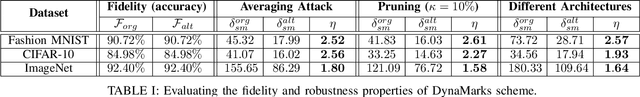

Abstract:The functionality of a deep learning (DL) model can be stolen via model extraction where an attacker obtains a surrogate model by utilizing the responses from a prediction API of the original model. In this work, we propose a novel watermarking technique called DynaMarks to protect the intellectual property (IP) of DL models against such model extraction attacks in a black-box setting. Unlike existing approaches, DynaMarks does not alter the training process of the original model but rather embeds watermark into a surrogate model by dynamically changing the output responses from the original model prediction API based on certain secret parameters at inference runtime. The experimental outcomes on Fashion MNIST, CIFAR-10, and ImageNet datasets demonstrate the efficacy of DynaMarks scheme to watermark surrogate models while preserving the accuracies of the original models deployed in edge devices. In addition, we also perform experiments to evaluate the robustness of DynaMarks against various watermark removal strategies, thus allowing a DL model owner to reliably prove model ownership.

Sparse Representations of Positive Functions via Projected Pseudo-Mirror Descent

Nov 13, 2020

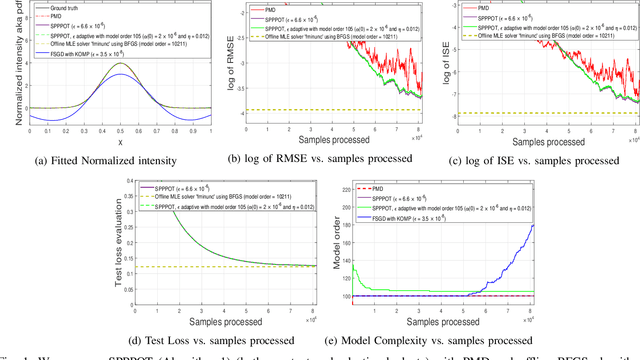

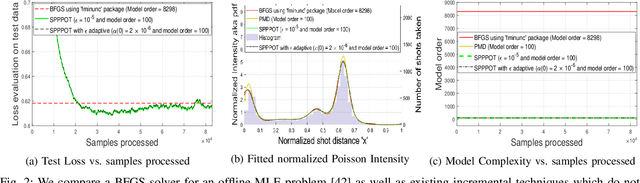

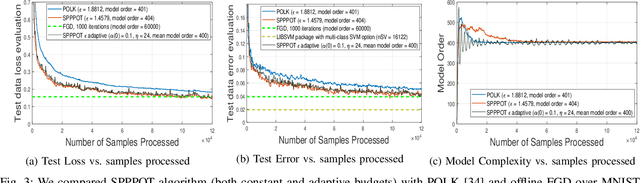

Abstract:We consider the problem of expected risk minimization when the population loss is strongly convex and the target domain of the decision variable is required to be nonnegative, motivated by the settings of maximum likelihood estimation (MLE) and trajectory optimization. We restrict focus to the case that the decision variable belongs to a nonparametric Reproducing Kernel Hilbert Space (RKHS). To solve it, we consider stochastic mirror descent that employs (i) pseudo-gradients and (ii) projections. Compressive projections are executed via kernel orthogonal matching pursuit (KOMP), and overcome the fact that the vanilla RKHS parameterization grows unbounded with time. Moreover, pseudo-gradients are needed, e.g., when stochastic gradients themselves define integrals over unknown quantities that must be evaluated numerically, as in estimating the intensity parameter of an inhomogeneous Poisson Process, and multi-class kernel logistic regression with latent multi-kernels. We establish tradeoffs between accuracy of convergence in mean and the projection budget parameter under constant step-size and compression budget, as well as non-asymptotic bounds on the model complexity. Experiments demonstrate that we achieve state-of-the-art accuracy and complexity tradeoffs for inhomogeneous Poisson Process intensity estimation and multi-class kernel logistic regression.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge