Abdullahi Mohammad

Enhancing Physical Layer Security with Deep SIMO Auto-Encoder and RF Impairments Modeling

Apr 30, 2024Abstract:This paper presents a novel approach to achieving secure wireless communication by leveraging the inherent characteristics of wireless channels through end-to-end learning using a single-input-multiple-output (SIMO) autoencoder (AE). To ensure a more realistic signal transmission, we derive the signal model that captures all radio frequency (RF) hardware impairments to provide reliable and secure communication. Performance evaluations against traditional linear decoders, such as zero-forcing (ZR) and linear minimum mean square error (LMMSE), and the optimal nonlinear decoder, maximum likelihood (ML), demonstrate that the AE-based SIMO model exhibits superior bit error rate (BER) performance, but with a substantial gap even in the presence of RF hardware impairments. Additionally, the proposed model offers enhanced security features, preventing potential eavesdroppers from intercepting transmitted information and leveraging RF impairments for augmented physical layer security and device identification. These findings underscore the efficacy of the proposed end-to-end learning approach in achieving secure and robust wireless communication.

An Unsupervised Deep Unfolding Framework for robust Symbol Level Precoding

Nov 15, 2021

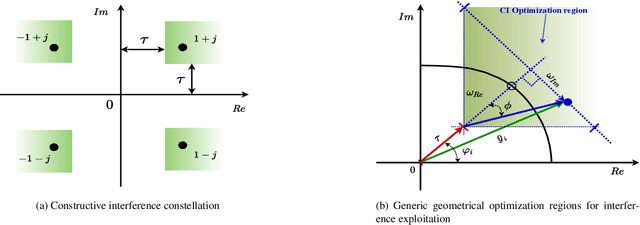

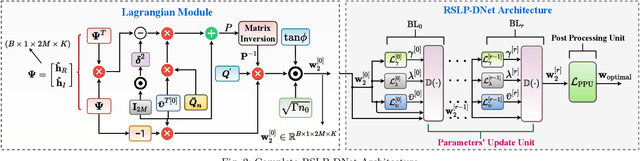

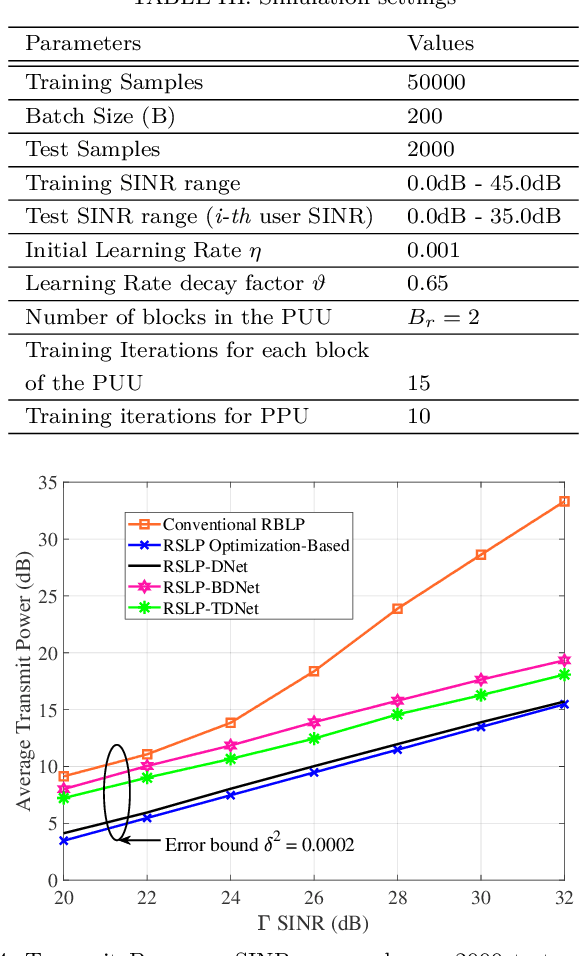

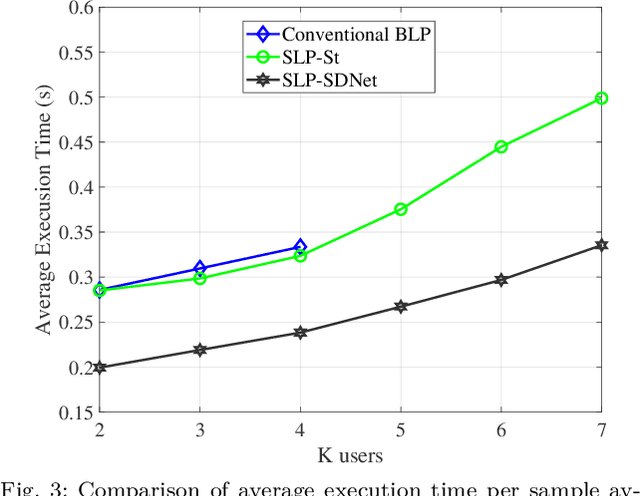

Abstract:Symbol Level Precoding (SLP) has attracted significant research interest due to its ability to exploit interference for energy-efficient transmission. This paper proposes an unsupervised deep-neural network (DNN) based SLP framework. Instead of naively training a DNN architecture for SLP without considering the specifics of the optimization objective of the SLP domain, our proposal unfolds a power minimization SLP formulation based on the interior point method (IPM) proximal `log' barrier function. Furthermore, we extend our proposal to a robust precoding design under channel state information (CSI) uncertainty. The results show that our proposed learning framework provides near-optimal performance while reducing the computational cost from O(n7.5) to O(n3) for the symmetrical system case where n = number of transmit antennas = number of users. This significant complexity reduction is also reflected in a proportional decrease in the proposed approach's execution time compared to the SLP optimization-based solution.

Learning-Based Symbol Level Precoding: A Memory-Efficient Unsupervised Learning Approach

Nov 15, 2021

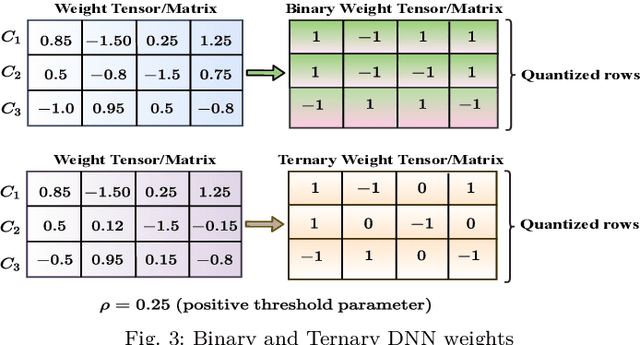

Abstract:Symbol level precoding (SLP) has been proven to be an effective means of managing the interference in a multiuser downlink transmission and also enhancing the received signal power. This paper proposes an unsupervised learning based SLP that applies to quantized deep neural networks (DNNs). Rather than simply training a DNN in a supervised mode, our proposal unfolds a power minimization SLP formulation in an imperfect channel scenario using the interior point method (IPM) proximal `log' barrier function. We use binary and ternary quantizations to compress the DNN's weight values. The results show significant memory savings for our proposals compared to the existing full-precision SLP-DNet with significant model compression of ~21x and ~13x for both binary DNN-based SLP (RSLP-BDNet) and ternary DNN-based SLP (RSLP-TDNets), respectively.

A Memory-Efficient Learning Framework for SymbolLevel Precoding with Quantized NN Weights

Oct 13, 2021

Abstract:This paper proposes a memory-efficient deep neural network (DNN) framework-based symbol level precoding (SLP). We focus on a DNN with realistic finite precision weights and adopt an unsupervised deep learning (DL) based SLP model (SLP-DNet). We apply a stochastic quantization (SQ) technique to obtain its corresponding quantized version called SLP-SQDNet. The proposed scheme offers a scalable performance vs memory tradeoff, by quantizing a scale-able percentage of the DNN weights, and we explore binary and ternary quantizations. Our results show that while SLP-DNet provides near-optimal performance, its quantized versions through SQ yield 3.46x and 2.64x model compression for binary-based and ternary-based SLP-SQDNets, respectively. We also find that our proposals offer 20x and 10x computational complexity reductions compared to SLP optimization-based and SLP-DNet, respectively.

An Unsupervised Learning-Based Approach for Symbol-Level-Precoding

Apr 19, 2021

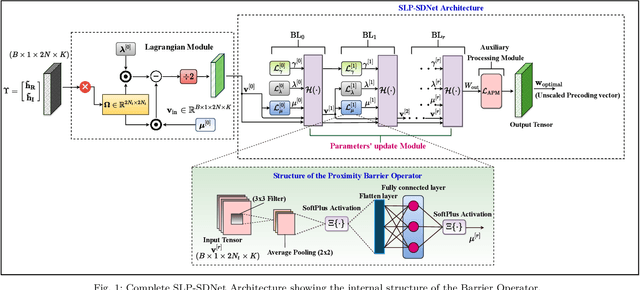

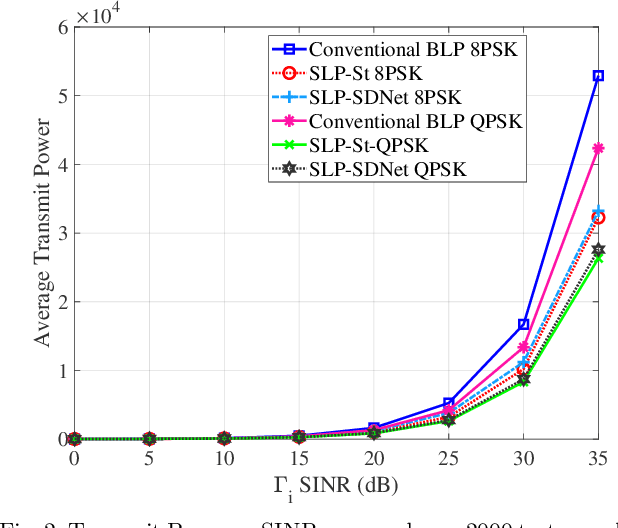

Abstract:This paper proposes an unsupervised learning-based precoding framework that trains deep neural networks (DNNs) with no target labels by unfolding an interior point method (IPM) proximal `log' barrier function. The proximal `log' barrier function is derived from the strict power minimization formulation subject to signal-to-interference-plus-noise ratio (SINR) constraint. The proposed scheme exploits the known interference via symbol-level precoding (SLP) to minimize the transmit power and is named strict Symbol-Level-Precoding deep network (SLP-SDNet). The results show that SLP-SDNet outperforms the conventional block-level-precoding (Conventional BLP) scheme while achieving near-optimal performance faster than the SLP optimization-based approach

Complexity-Scalable Neural Network Based MIMO Detection With Learnable Weight Scaling

Sep 12, 2019

Abstract:This paper introduces a framework for systematic complexity scaling of deep neural network (DNN) based MIMO detectors. The model uses a fraction of the DNN inputs by scaling their values through weights that follow monotonically non-increasing functions. This allows for weight scaling across and within the different DNN layers in order to achieve scalable complexity-accuracy results. To reduce complexity further, we introduce a regularization constraint on the layer weights such that, at inference, parts (or the entirety) of network layers can be removed with minimal impact on the detection accuracy. We also introduce trainable weight-scaling functions for increased robustness to changes in the activation patterns and a further improvement in the detection accuracy at the same inference complexity. Numerical results show that our approach is 10 and 100-fold less complex than classical approaches based on semi-definite relaxation and ML detection, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge