An Unsupervised Learning-Based Approach for Symbol-Level-Precoding

Paper and Code

Apr 19, 2021

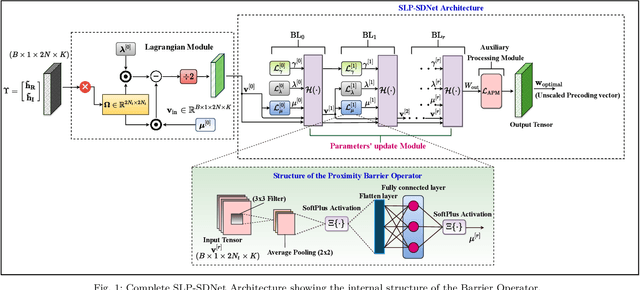

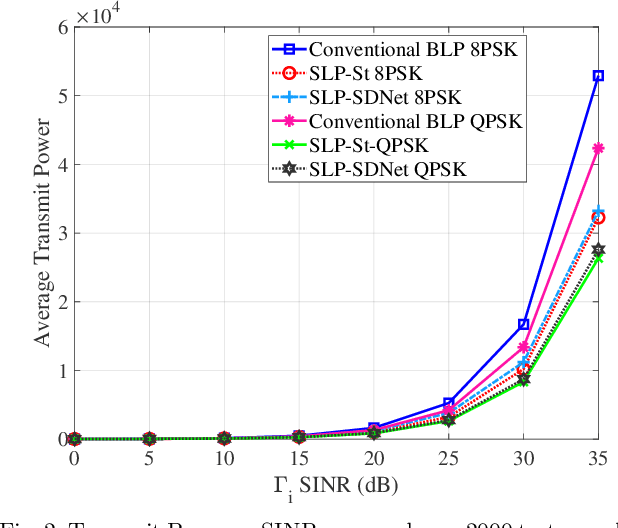

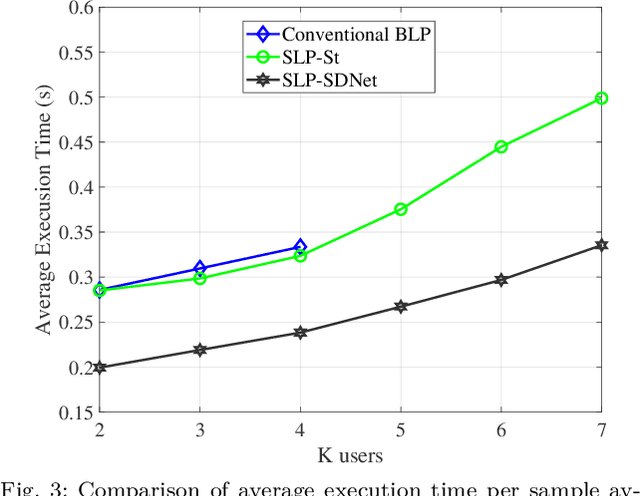

This paper proposes an unsupervised learning-based precoding framework that trains deep neural networks (DNNs) with no target labels by unfolding an interior point method (IPM) proximal `log' barrier function. The proximal `log' barrier function is derived from the strict power minimization formulation subject to signal-to-interference-plus-noise ratio (SINR) constraint. The proposed scheme exploits the known interference via symbol-level precoding (SLP) to minimize the transmit power and is named strict Symbol-Level-Precoding deep network (SLP-SDNet). The results show that SLP-SDNet outperforms the conventional block-level-precoding (Conventional BLP) scheme while achieving near-optimal performance faster than the SLP optimization-based approach

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge