Abdenour Hadid

Center for Machine Vision Research, University of Oulu, Finland

VLM-PAR: A Vision Language Model for Pedestrian Attribute Recognition

Dec 22, 2025Abstract:Pedestrian Attribute Recognition (PAR) involves predicting fine-grained attributes such as clothing color, gender, and accessories from pedestrian imagery, yet is hindered by severe class imbalance, intricate attribute co-dependencies, and domain shifts. We introduce VLM-PAR, a modular vision-language framework built on frozen SigLIP 2 multilingual encoders. By first aligning image and prompt embeddings via refining visual features through a compact cross-attention fusion, VLM-PAR achieves significant accuracy improvement on the highly imbalanced PA100K benchmark, setting a new state-of-the-art performance, while also delivering significant gains in mean accuracy across PETA and Market-1501 benchmarks. These results underscore the efficacy of integrating large-scale vision-language pretraining with targeted cross-modal refinement to overcome imbalance and generalization challenges in PAR.

RAVID: Retrieval-Augmented Visual Detection: A Knowledge-Driven Approach for AI-Generated Image Identification

Aug 05, 2025Abstract:In this paper, we introduce RAVID, the first framework for AI-generated image detection that leverages visual retrieval-augmented generation (RAG). While RAG methods have shown promise in mitigating factual inaccuracies in foundation models, they have primarily focused on text, leaving visual knowledge underexplored. Meanwhile, existing detection methods, which struggle with generalization and robustness, often rely on low-level artifacts and model-specific features, limiting their adaptability. To address this, RAVID dynamically retrieves relevant images to enhance detection. Our approach utilizes a fine-tuned CLIP image encoder, RAVID CLIP, enhanced with category-related prompts to improve representation learning. We further integrate a vision-language model (VLM) to fuse retrieved images with the query, enriching the input and improving accuracy. Given a query image, RAVID generates an embedding using RAVID CLIP, retrieves the most relevant images from a database, and combines these with the query image to form an enriched input for a VLM (e.g., Qwen-VL or Openflamingo). Experiments on the UniversalFakeDetect benchmark, which covers 19 generative models, show that RAVID achieves state-of-the-art performance with an average accuracy of 93.85%. RAVID also outperforms traditional methods in terms of robustness, maintaining high accuracy even under image degradations such as Gaussian blur and JPEG compression. Specifically, RAVID achieves an average accuracy of 80.27% under degradation conditions, compared to 63.44% for the state-of-the-art model C2P-CLIP, demonstrating consistent improvements in both Gaussian blur and JPEG compression scenarios. The code will be publicly available upon acceptance.

SAViL-Det: Semantic-Aware Vision-Language Model for Multi-Script Text Detection

Jul 27, 2025Abstract:Detecting text in natural scenes remains challenging, particularly for diverse scripts and arbitrarily shaped instances where visual cues alone are often insufficient. Existing methods do not fully leverage semantic context. This paper introduces SAViL-Det, a novel semantic-aware vision-language model that enhances multi-script text detection by effectively integrating textual prompts with visual features. SAViL-Det utilizes a pre-trained CLIP model combined with an Asymptotic Feature Pyramid Network (AFPN) for multi-scale visual feature fusion. The core of the proposed framework is a novel language-vision decoder that adaptively propagates fine-grained semantic information from text prompts to visual features via cross-modal attention. Furthermore, a text-to-pixel contrastive learning mechanism explicitly aligns textual and corresponding visual pixel features. Extensive experiments on challenging benchmarks demonstrate the effectiveness of the proposed approach, achieving state-of-the-art performance with F-scores of 84.8% on the benchmark multi-lingual MLT-2019 dataset and 90.2% on the curved-text CTW1500 dataset.

Recent Advances in Medical Imaging Segmentation: A Survey

May 14, 2025Abstract:Medical imaging is a cornerstone of modern healthcare, driving advancements in diagnosis, treatment planning, and patient care. Among its various tasks, segmentation remains one of the most challenging problem due to factors such as data accessibility, annotation complexity, structural variability, variation in medical imaging modalities, and privacy constraints. Despite recent progress, achieving robust generalization and domain adaptation remains a significant hurdle, particularly given the resource-intensive nature of some proposed models and their reliance on domain expertise. This survey explores cutting-edge advancements in medical image segmentation, focusing on methodologies such as Generative AI, Few-Shot Learning, Foundation Models, and Universal Models. These approaches offer promising solutions to longstanding challenges. We provide a comprehensive overview of the theoretical foundations, state-of-the-art techniques, and recent applications of these methods. Finally, we discuss inherent limitations, unresolved issues, and future research directions aimed at enhancing the practicality and accessibility of segmentation models in medical imaging. We are maintaining a \href{https://github.com/faresbougourzi/Awesome-DL-for-Medical-Imaging-Segmentation}{GitHub Repository} to continue tracking and updating innovations in this field.

DeeCLIP: A Robust and Generalizable Transformer-Based Framework for Detecting AI-Generated Images

Apr 28, 2025Abstract:This paper introduces DeeCLIP, a novel framework for detecting AI-generated images using CLIP-ViT and fusion learning. Despite significant advancements in generative models capable of creating highly photorealistic images, existing detection methods often struggle to generalize across different models and are highly sensitive to minor perturbations. To address these challenges, DeeCLIP incorporates DeeFuser, a fusion module that combines high-level and low-level features, improving robustness against degradations such as compression and blurring. Additionally, we apply triplet loss to refine the embedding space, enhancing the model's ability to distinguish between real and synthetic content. To further enable lightweight adaptation while preserving pre-trained knowledge, we adopt parameter-efficient fine-tuning using low-rank adaptation (LoRA) within the CLIP-ViT backbone. This approach supports effective zero-shot learning without sacrificing generalization. Trained exclusively on 4-class ProGAN data, DeeCLIP achieves an average accuracy of 89.00% on 19 test subsets composed of generative adversarial network (GAN) and diffusion models. Despite having fewer trainable parameters, DeeCLIP outperforms existing methods, demonstrating superior robustness against various generative models and real-world distortions. The code is publicly available at https://github.com/Mamadou-Keita/DeeCLIP for research purposes.

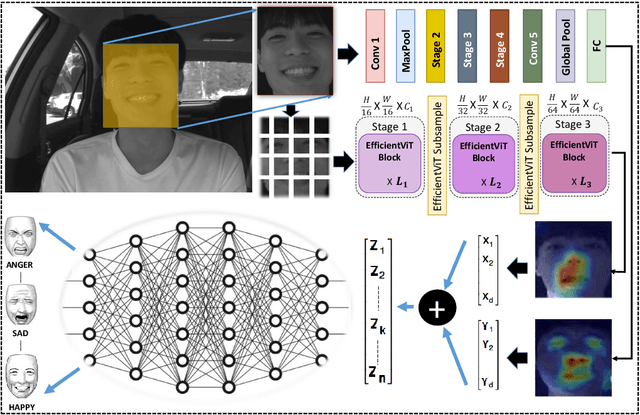

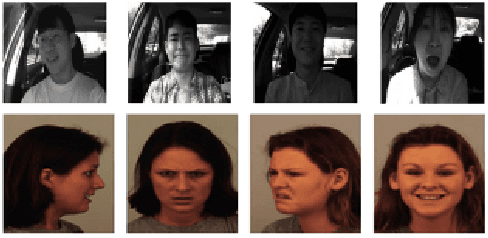

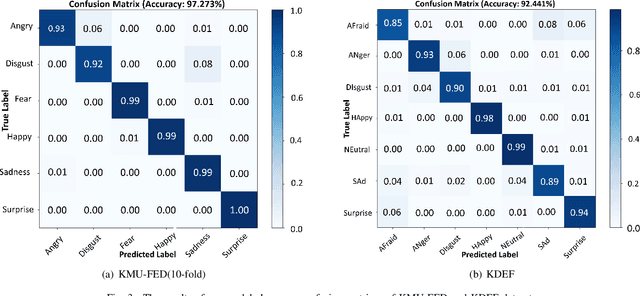

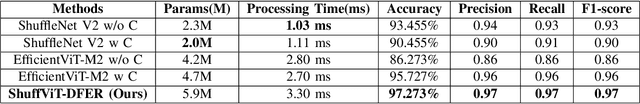

Shuffle Vision Transformer: Lightweight, Fast and Efficient Recognition of Driver Facial Expression

Sep 05, 2024

Abstract:Existing methods for driver facial expression recognition (DFER) are often computationally intensive, rendering them unsuitable for real-time applications. In this work, we introduce a novel transfer learning-based dual architecture, named ShuffViT-DFER, which elegantly combines computational efficiency and accuracy. This is achieved by harnessing the strengths of two lightweight and efficient models using convolutional neural network (CNN) and vision transformers (ViT). We efficiently fuse the extracted features to enhance the performance of the model in accurately recognizing the facial expressions of the driver. Our experimental results on two benchmarking and public datasets, KMU-FED and KDEF, highlight the validity of our proposed method for real-time application with superior performance when compared to state-of-the-art methods.

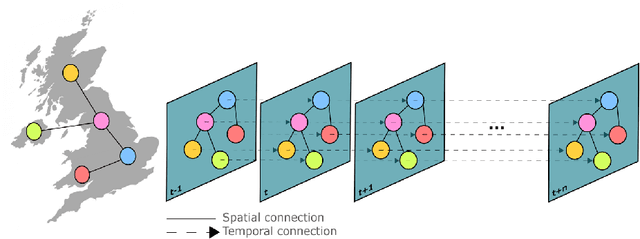

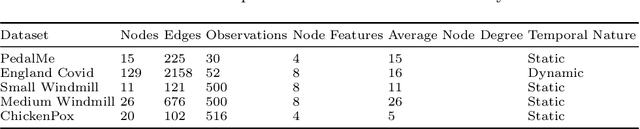

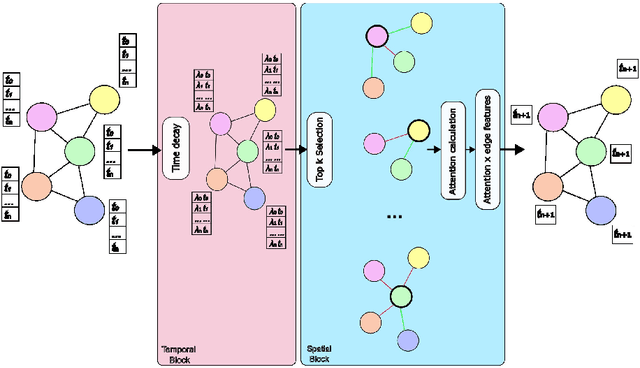

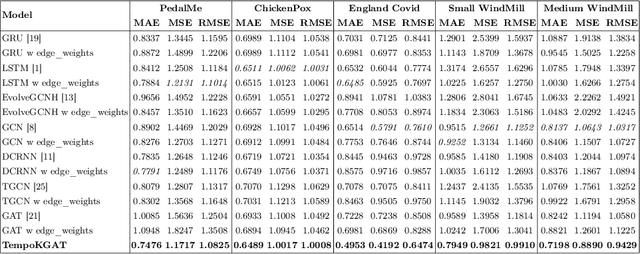

TempoKGAT: A Novel Graph Attention Network Approach for Temporal Graph Analysis

Aug 29, 2024

Abstract:Graph neural networks (GNN) have shown significant capabilities in handling structured data, yet their application to dynamic, temporal data remains limited. This paper presents a new type of graph attention network, called TempoKGAT, which combines time-decaying weight and a selective neighbor aggregation mechanism on the spatial domain, which helps uncover latent patterns in the graph data. In this approach, a top-k neighbor selection based on the edge weights is introduced to represent the evolving features of the graph data. We evaluated the performance of our TempoKGAT on multiple datasets from the traffic, energy, and health sectors involving spatio-temporal data. We compared the performance of our approach to several state-of-the-art methods found in the literature on several open-source datasets. Our method shows superior accuracy on all datasets. These results indicate that TempoKGAT builds on existing methodologies to optimize prediction accuracy and provide new insights into model interpretation in temporal contexts.

TG-PhyNN: An Enhanced Physically-Aware Graph Neural Network framework for forecasting Spatio-Temporal Data

Aug 29, 2024

Abstract:Accurately forecasting dynamic processes on graphs, such as traffic flow or disease spread, remains a challenge. While Graph Neural Networks (GNNs) excel at modeling and forecasting spatio-temporal data, they often lack the ability to directly incorporate underlying physical laws. This work presents TG-PhyNN, a novel Temporal Graph Physics-Informed Neural Network framework. TG-PhyNN leverages the power of GNNs for graph-based modeling while simultaneously incorporating physical constraints as a guiding principle during training. This is achieved through a two-step prediction strategy that enables the calculation of physical equation derivatives within the GNN architecture. Our findings demonstrate that TG-PhyNN significantly outperforms traditional forecasting models (e.g., GRU, LSTM, GAT) on real-world spatio-temporal datasets like PedalMe (traffic flow), COVID-19 spread, and Chickenpox outbreaks. These datasets are all governed by well-defined physical principles, which TG-PhyNN effectively exploits to offer more reliable and accurate forecasts in various domains where physical processes govern the dynamics of data. This paves the way for improved forecasting in areas like traffic flow prediction, disease outbreak prediction, and potentially other fields where physics plays a crucial role.

Bi-LORA: A Vision-Language Approach for Synthetic Image Detection

Apr 07, 2024

Abstract:Advancements in deep image synthesis techniques, such as generative adversarial networks (GANs) and diffusion models (DMs), have ushered in an era of generating highly realistic images. While this technological progress has captured significant interest, it has also raised concerns about the potential difficulty in distinguishing real images from their synthetic counterparts. This paper takes inspiration from the potent convergence capabilities between vision and language, coupled with the zero-shot nature of vision-language models (VLMs). We introduce an innovative method called Bi-LORA that leverages VLMs, combined with low-rank adaptation (LORA) tuning techniques, to enhance the precision of synthetic image detection for unseen model-generated images. The pivotal conceptual shift in our methodology revolves around reframing binary classification as an image captioning task, leveraging the distinctive capabilities of cutting-edge VLM, notably bootstrapping language image pre-training (BLIP2). Rigorous and comprehensive experiments are conducted to validate the effectiveness of our proposed approach, particularly in detecting unseen diffusion-generated images from unknown diffusion-based generative models during training, showcasing robustness to noise, and demonstrating generalization capabilities to GANs. The obtained results showcase an impressive average accuracy of 93.41% in synthetic image detection on unseen generation models. The code and models associated with this research can be publicly accessed at https://github.com/Mamadou-Keita/VLM-DETECT.

Knowledge-Based Convolutional Neural Network for the Simulation and Prediction of Two-Phase Darcy Flows

Apr 04, 2024Abstract:Physics-informed neural networks (PINNs) have gained significant prominence as a powerful tool in the field of scientific computing and simulations. Their ability to seamlessly integrate physical principles into deep learning architectures has revolutionized the approaches to solving complex problems in physics and engineering. However, a persistent challenge faced by mainstream PINNs lies in their handling of discontinuous input data, leading to inaccuracies in predictions. This study addresses these challenges by incorporating the discretized forms of the governing equations into the PINN framework. We propose to combine the power of neural networks with the dynamics imposed by the discretized differential equations. By discretizing the governing equations, the PINN learns to account for the discontinuities and accurately capture the underlying relationships between inputs and outputs, improving the accuracy compared to traditional interpolation techniques. Moreover, by leveraging the power of neural networks, the computational cost associated with numerical simulations is substantially reduced. We evaluate our model on a large-scale dataset for the prediction of pressure and saturation fields demonstrating high accuracies compared to non-physically aware models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge