Towards Trainable Saliency Maps in Medical Imaging

Paper and Code

Nov 15, 2020

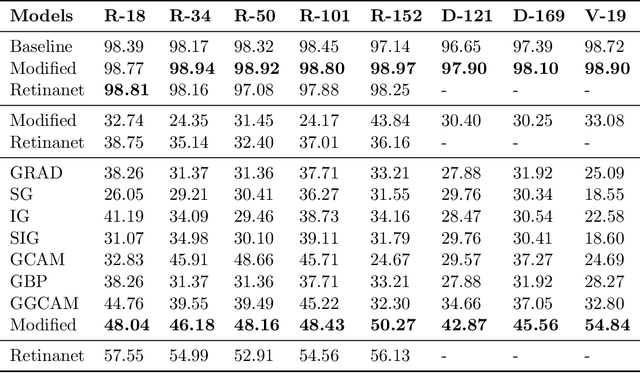

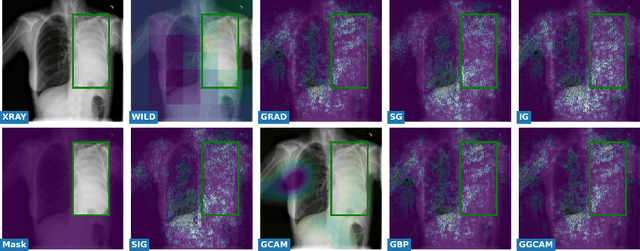

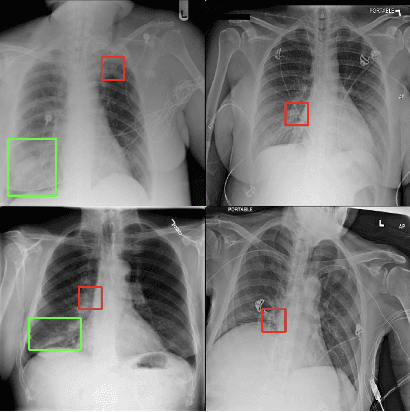

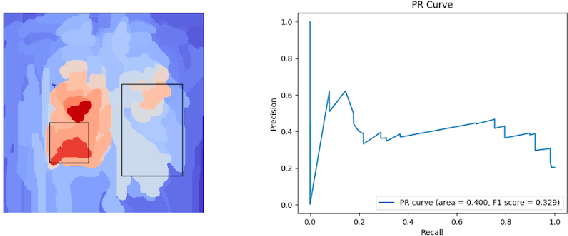

While success of Deep Learning (DL) in automated diagnosis can be transformative to the medicinal practice especially for people with little or no access to doctors, its widespread acceptability is severely limited by inherent black-box decision making and unsafe failure modes. While saliency methods attempt to tackle this problem in non-medical contexts, their apriori explanations do not transfer well to medical usecases. With this study we validate a model design element agnostic to both architecture complexity and model task, and show how introducing this element gives an inherently self-explanatory model. We compare our results with state of the art non-trainable saliency maps on RSNA Pneumonia Dataset and demonstrate a much higher localization efficacy using our adopted technique. We also compare, with a fully supervised baseline and provide a reasonable alternative to it's high data labelling overhead. We further investigate the validity of our claims through qualitative evaluation from an expert reader.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge