Themes Inferred Audio-visual Correspondence Learning

Paper and Code

Sep 14, 2020

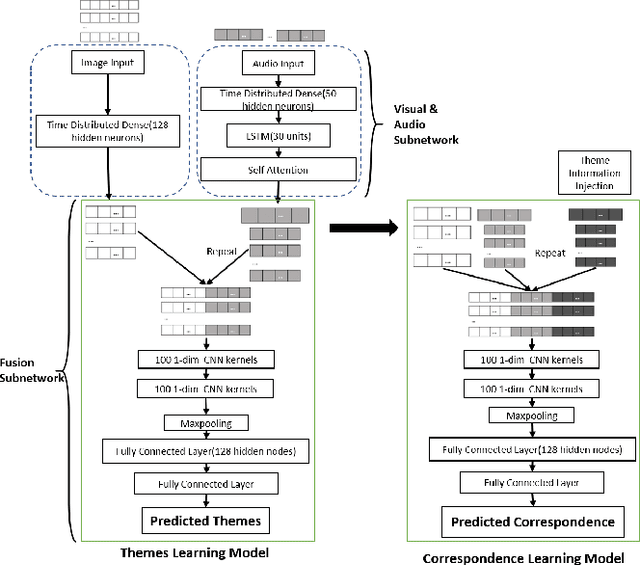

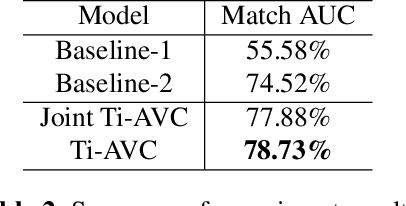

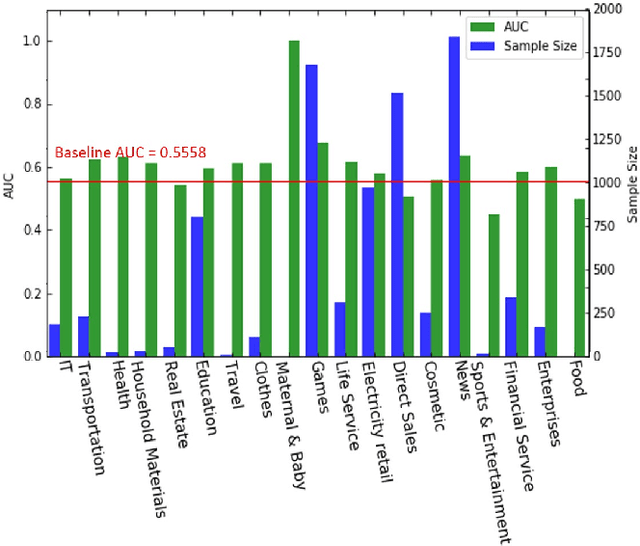

The applications of short-termuser generated video(UGV),such as snapchat, youtube short-term videos, booms recently,raising lots of multimodal machine learning tasks. Amongthem, learning the correspondence between audio and vi-sual information from videos is a challenging one. Mostprevious work of theaudio-visual correspondence(AVC)learning only investigated on constrained videos or simplesettings, which may not fit the application of UGV. In thispaper, we proposed new principles for AVC and introduced anew framework to set sight on the themes of videos to facili-tate AVC learning. We also released the KWAI-AD-AudViscorpus which contained 85432 short advertisement videos(around 913 hours) made by users. We evaluated our pro-posed approach on this corpus and it was able to outperformthe baseline by 23.15% absolute differenc

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge