PanoFlow: Learning Optical Flow for Panoramic Images

Paper and Code

Feb 27, 2022

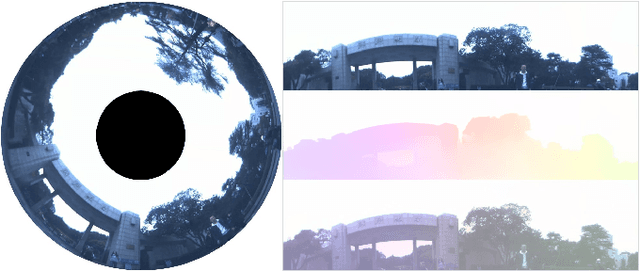

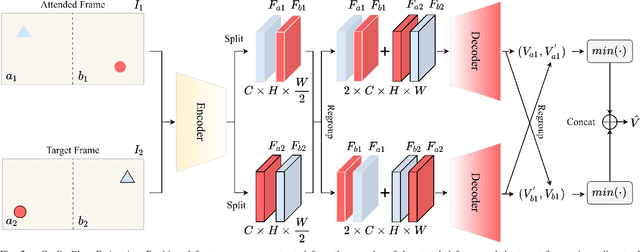

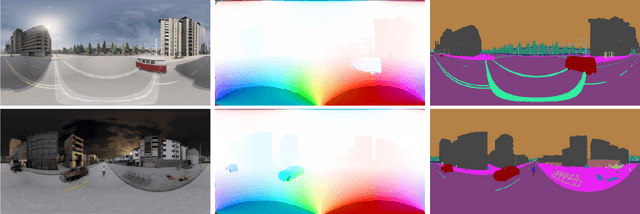

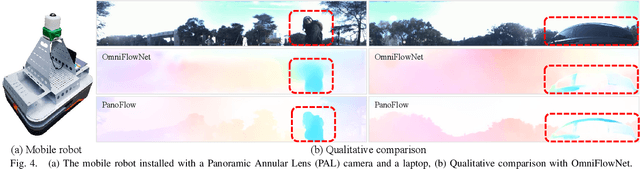

Optical flow estimation is a basic task in self-driving and robotics systems, which enables to temporally interpret the traffic scene. Autonomous vehicles clearly benefit from the ultra-wide Field of View (FoV) offered by 360-degree panoramic sensors. However, due to the unique imaging process of panoramic images, models designed for pinhole images do not directly generalize satisfactorily to 360-degree panoramic images. In this paper, we put forward a novel network framework--PanoFlow, to learn optical flow for panoramic images. To overcome the distortions introduced by equirectangular projection in panoramic transformation, we design a Flow Distortion Augmentation (FDA) method. We further propose a Cyclic Flow Estimation (CFE) method by leveraging the cyclicity of spherical images to infer 360-degree optical flow and converting large displacement to relatively small displacement. PanoFlow is applicable to any existing flow estimation method and benefit from the progress of narrow-FoV flow estimation. In addition, we create and release a synthetic panoramic dataset Flow360 based on CARLA to facilitate training and quantitative analysis. PanoFlow achieves state-of-the-art performance. Our proposed approach reduces the End-Point-Error (EPE) on the established Flow360 dataset by 26%. On the public OmniFlowNet dataset, PanoFlow achieves an EPE of 3.34 pixels, a 53.1% error reduction from the best published result (7.12 pixels). We also validate our method via an outdoor collection vehicle, indicating strong potential and robustness for real-world navigation applications. Code and dataset are publicly available at https://github.com/MasterHow/PanoFlow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge