Meta-Learning for Neural Relation Classification with Distant Supervision

Paper and Code

Oct 26, 2020

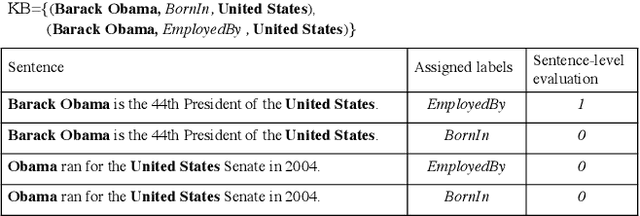

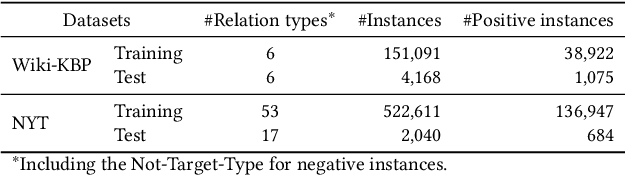

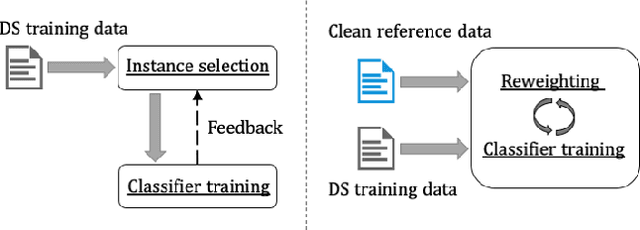

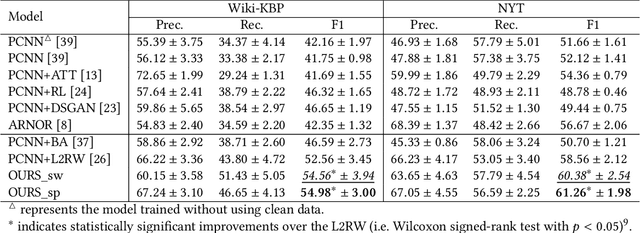

Distant supervision provides a means to create a large number of weakly labeled data at low cost for relation classification. However, the resulting labeled instances are very noisy, containing data with wrong labels. Many approaches have been proposed to select a subset of reliable instances for neural model training, but they still suffer from noisy labeling problem or underutilization of the weakly-labeled data. To better select more reliable training instances, we introduce a small amount of manually labeled data as reference to guide the selection process. In this paper, we propose a meta-learning based approach, which learns to reweight noisy training data under the guidance of reference data. As the clean reference data is usually very small, we propose to augment it by dynamically distilling the most reliable elite instances from the noisy data. Experiments on several datasets demonstrate that the reference data can effectively guide the selection of training data, and our augmented approach consistently improves the performance of relation classification comparing to the existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge