Less is More: Pre-training a Strong Siamese Encoder Using a Weak Decoder

Paper and Code

Feb 18, 2021

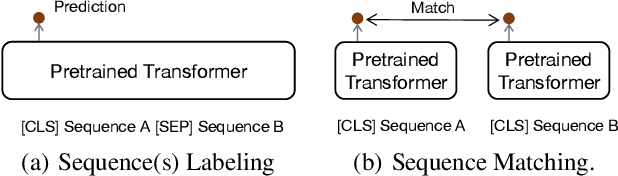

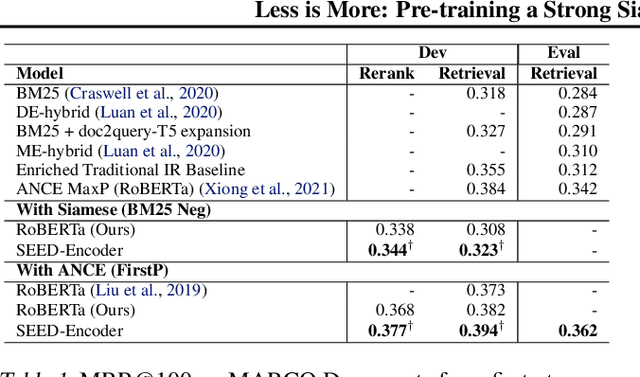

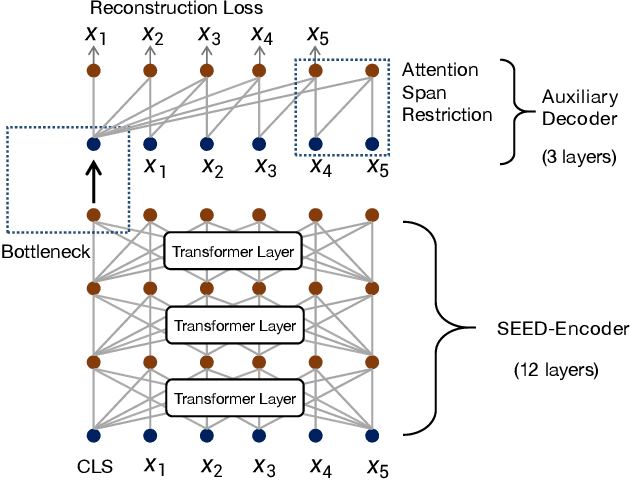

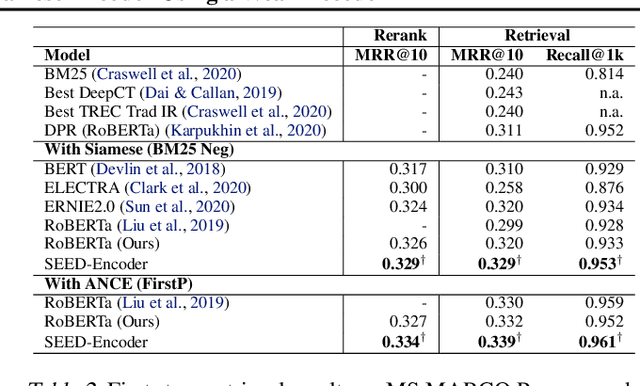

Many real-world applications use Siamese networks to efficiently match text sequences at scale, which require high-quality sequence encodings. This paper pre-trains language models dedicated to sequence matching in Siamese architectures. We first hypothesize that a representation is better for sequence matching if the entire sequence can be reconstructed from it, which, however, is unlikely to be achieved in standard autoencoders: A strong decoder can rely on its capacity and natural language patterns to reconstruct and bypass the needs of better sequence encodings. Therefore we propose a new self-learning method that pretrains the encoder with a weak decoder, which reconstructs the original sequence from the encoder's [CLS] representations but is restricted in both capacity and attention span. In our experiments on web search and recommendation, the pre-trained SEED-Encoder, "SiamEsE oriented encoder by reconstructing from weak decoder", shows significantly better generalization ability when fine-tuned in Siamese networks, improving overall accuracy and few-shot performances. Our code and models will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge