Exploring Contextual Relationships for Cervical Abnormal Cell Detection

Paper and Code

Jul 18, 2022

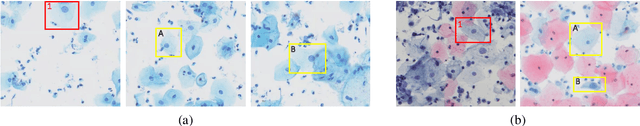

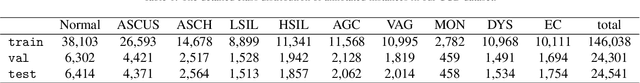

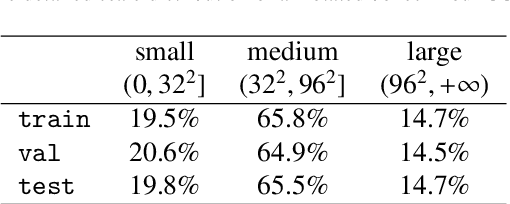

Cervical abnormal cell detection is a challenging task as the morphological discrepancies between abnormal and normal cells are usually subtle. To determine whether a cervical cell is normal or abnormal, cytopathologists always take surrounding cells as references to identify its abnormality. To mimic these behaviors, we propose to explore contextual relationships to boost the performance of cervical abnormal cell detection. Specifically, both contextual relationships between cells and cell-to-global images are exploited to enhance features of each region of interest (RoI) proposals. Accordingly, two modules, dubbed as RoI-relationship attention module (RRAM) and global RoI attention module (GRAM), are developed and their combination strategies are also investigated. We establish a strong baseline by using Double-Head Faster R-CNN with feature pyramid network (FPN) and integrate our RRAM and GRAM into it to validate the effectiveness of the proposed modules. Experiments conducted on a large cervical cell detection dataset reveal that the introduction of RRAM and GRAM both achieves better average precision (AP) than the baseline methods. Moreover, when cascading RRAM and GRAM, our method outperforms the state-of-the-art (SOTA) methods. Furthermore, we also show the proposed feature enhancing scheme can facilitate both image-level and smear-level classification. The code and trained models are publicly available at https://github.com/CVIU-CSU/CR4CACD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge