Decoupling Feature Representations of Ego and Other Modalities for Incomplete Multi-modal Brain Tumor Segmentation

Paper and Code

Aug 16, 2024

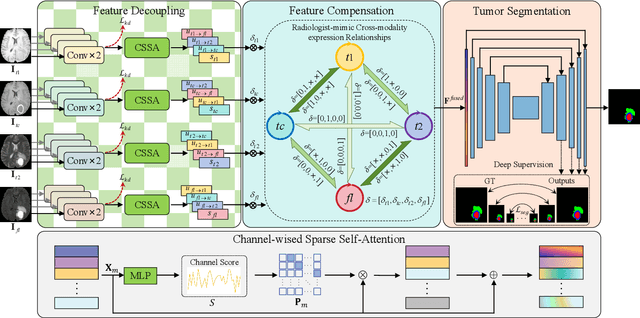

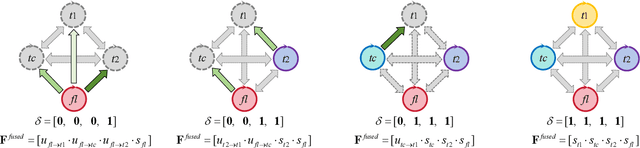

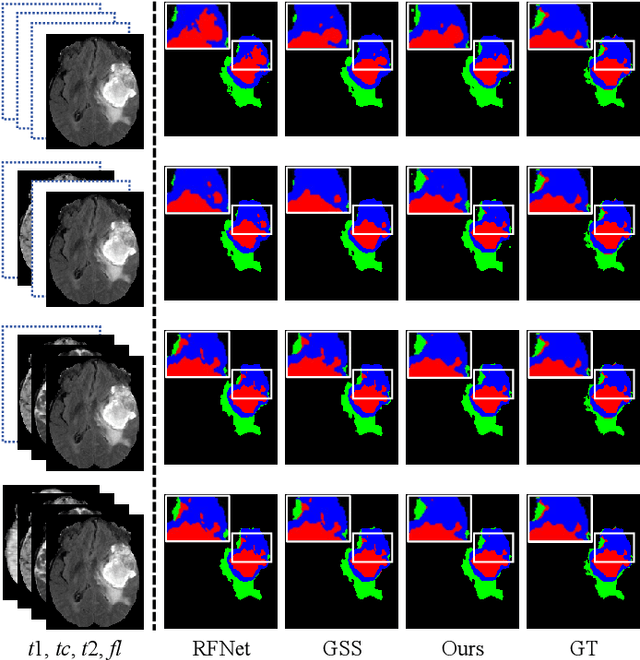

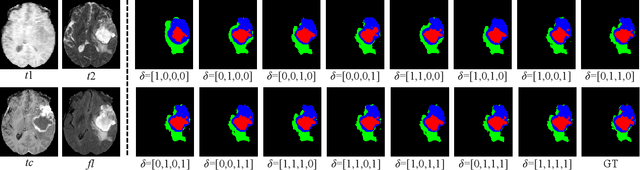

Multi-modal brain tumor segmentation typically involves four magnetic resonance imaging (MRI) modalities, while incomplete modalities significantly degrade performance. Existing solutions employ explicit or implicit modality adaptation, aligning features across modalities or learning a fused feature robust to modality incompleteness. They share a common goal of encouraging each modality to express both itself and the others. However, the two expression abilities are entangled as a whole in a seamless feature space, resulting in prohibitive learning burdens. In this paper, we propose DeMoSeg to enhance the modality adaptation by Decoupling the task of representing the ego and other Modalities for robust incomplete multi-modal Segmentation. The decoupling is super lightweight by simply using two convolutions to map each modality onto four feature sub-spaces. The first sub-space expresses itself (Self-feature), while the remaining sub-spaces substitute for other modalities (Mutual-features). The Self- and Mutual-features interactively guide each other through a carefully-designed Channel-wised Sparse Self-Attention (CSSA). After that, a Radiologist-mimic Cross-modality expression Relationships (RCR) is introduced to have available modalities provide Self-feature and also `lend' their Mutual-features to compensate for the absent ones by exploiting the clinical prior knowledge. The benchmark results on BraTS2020, BraTS2018 and BraTS2015 verify the DeMoSeg's superiority thanks to the alleviated modality adaptation difficulty. Concretely, for BraTS2020, DeMoSeg increases Dice by at least 0.92%, 2.95% and 4.95% on whole tumor, tumor core and enhanced tumor regions, respectively, compared to other state-of-the-arts. Codes are at https://github.com/kk42yy/DeMoSeg

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge