Characterizing Attacks on Deep Reinforcement Learning

Paper and Code

Jul 24, 2019

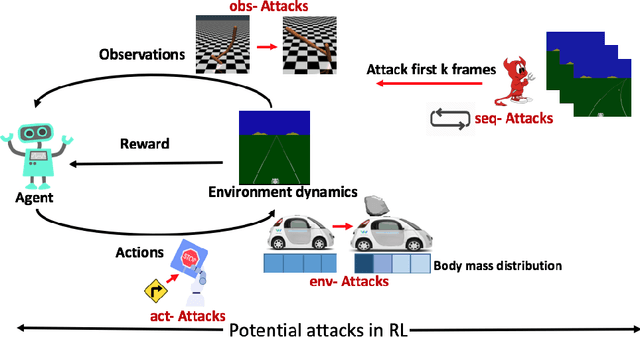

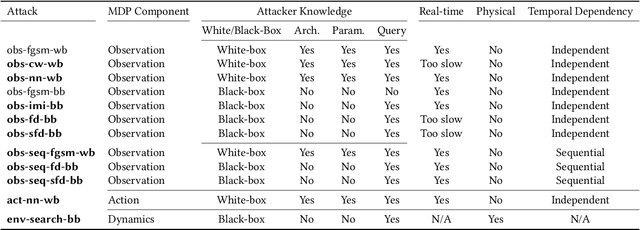

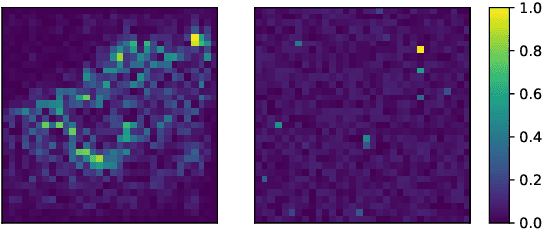

Deep reinforcement learning (DRL) has achieved great success in various applications. However, recent studies show that machine learning models are vulnerable to adversarial attacks. DRL models have been attacked by adding perturbations to observations. While such observation based attack is only one aspect of potential attacks on DRL, other forms of attacks which are more practical require further analysis, such as manipulating environment dynamics. Therefore, we propose to understand the vulnerabilities of DRL from various perspectives and provide a thorough taxonomy of potential attacks. We conduct the first set of experiments on the unexplored parts within the taxonomy. In addition to current observation based attacks against DRL, we propose the first targeted attacks based on action space and environment dynamics. We also introduce the online sequential attacks based on temporal consistency information among frames. To better estimate gradient in black-box setting, we propose a sampling strategy and theoretically prove its efficiency and estimation error bound. We conduct extensive experiments to compare the effectiveness of different attacks with several baselines in various environments, including game playing, robotics control, and autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge