AutoFIS: Automatic Feature Interaction Selection in Factorization Models for Click-Through Rate Prediction

Paper and Code

Mar 26, 2020

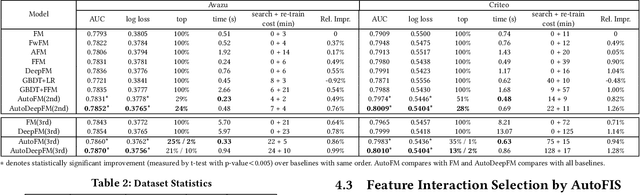

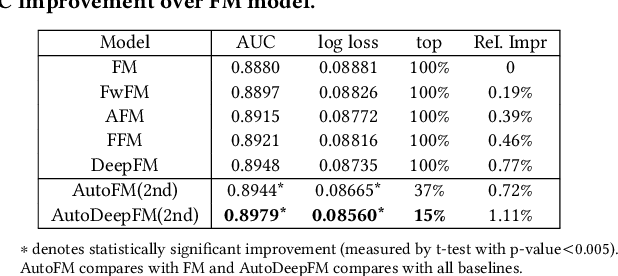

Learning effective feature interactions is crucial for click-through rate (CTR) prediction tasks in recommender systems. In most of the existing deep learning models, feature interactions are either manually designed or simply enumerated. However, enumerating all feature interactions brings large memory and computation cost. Even worse, useless interactions may introduce unnecessary noise and complicate the training process. In this work, we propose a two-stage algorithm called Automatic Feature Interaction Selection (AutoFIS). AutoFIS can automatically identify all the important feature interactions for factorization models with just the computational cost equivalent to training the target model to convergence. In the \emph{search stage}, instead of searching over a discrete set of candidate feature interactions, we relax the choices to be continuous by introducing the architecture parameters. By implementing a regularized optimizer over the architecture parameters, the model can automatically identify and remove the redundant feature interactions during the training process of the model. In the \emph{re-train stage}, we keep the architecture parameters serving as an attention unit to further boost the performance. Offline experiments on three large-scale datasets (two public benchmarks, one private) demonstrate that the proposed AutoFIS can significantly improve various FM based models. AutoFIS has been deployed onto the training platform of Huawei App Store recommendation service, where a 10-day online A/B test demonstrated that AutoFIS improved the DeepFM model by 20.3\% and 20.1\% in terms of CTR and CVR respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge