Zhuomin Chai

PDNNet: PDN-Aware GNN-CNN Heterogeneous Network for Dynamic IR Drop Prediction

Mar 27, 2024

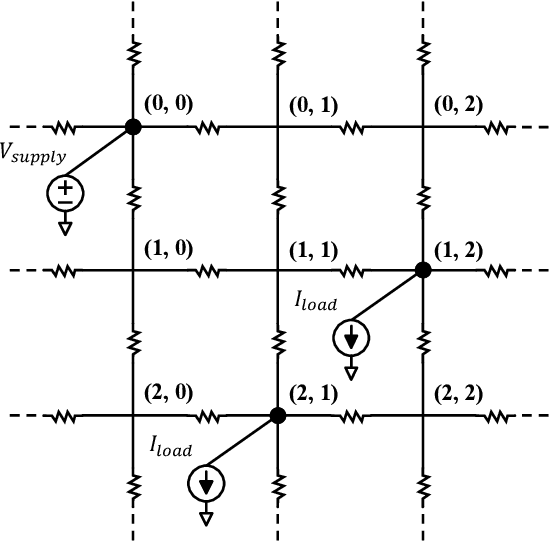

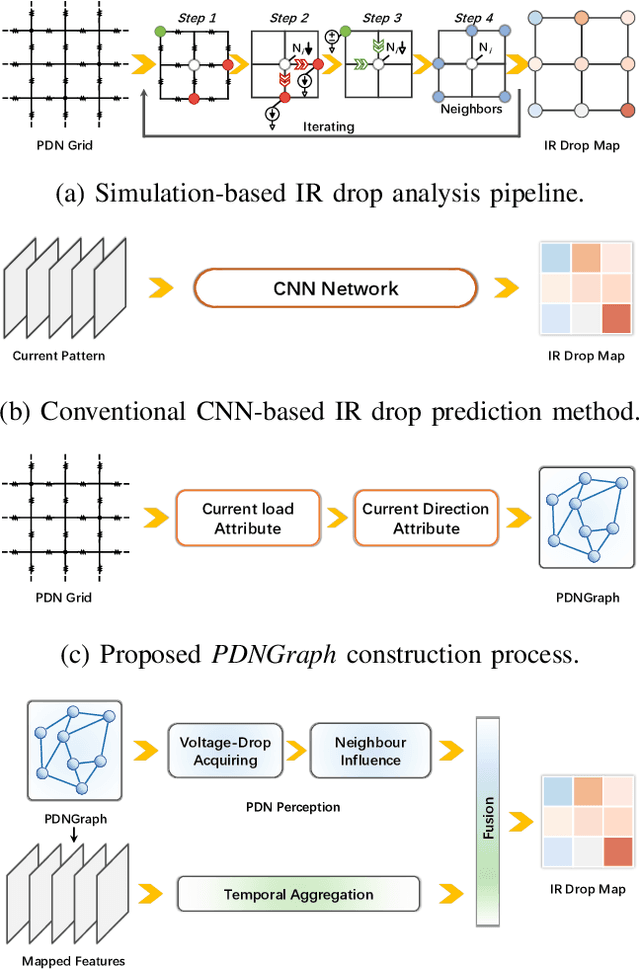

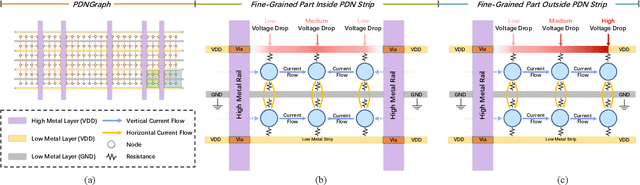

Abstract:IR drop on the power delivery network (PDN) is closely related to PDN's configuration and cell current consumption. As the integrated circuit (IC) design is growing larger, dynamic IR drop simulation becomes computationally unaffordable and machine learning based IR drop prediction has been explored as a promising solution. Although CNN-based methods have been adapted to IR drop prediction task in several works, the shortcomings of overlooking PDN configuration is non-negligible. In this paper, we consider not only how to properly represent cell-PDN relation, but also how to model IR drop following its physical nature in the feature aggregation procedure. Thus, we propose a novel graph structure, PDNGraph, to unify the representations of the PDN structure and the fine-grained cell-PDN relation. We further propose a dual-branch heterogeneous network, PDNNet, incorporating two parallel GNN-CNN branches to favorably capture the above features during the learning process. Several key designs are presented to make the dynamic IR drop prediction highly effective and interpretable. We are the first work to apply graph structure to deep-learning based dynamic IR drop prediction method. Experiments show that PDNNet outperforms the state-of-the-art CNN-based methods by up to 39.3% reduction in prediction error and achieves 545x speedup compared to the commercial tool, which demonstrates the superiority of our method.

HybridNet: Dual-Branch Fusion of Geometrical and Topological Views for VLSI Congestion Prediction

May 07, 2023Abstract:Accurate early congestion prediction can prevent unpleasant surprises at the routing stage, playing a crucial character in assisting designers to iterate faster in VLSI design cycles. In this paper, we introduce a novel strategy to fully incorporate topological and geometrical features of circuits by making several key designs in our network architecture. To be more specific, we construct two individual graphs (geometry-graph, topology-graph) with distinct edge construction schemes according to their unique properties. We then propose a dual-branch network with different encoder layers in each pathway and aggregate representations with a sophisticated fusion strategy. Our network, named HybridNet, not only provides a simple yet effective way to capture the geometric interactions of cells, but also preserves the original topological relationships in the netlist. Experimental results on the ISPD2015 benchmarks show that we achieve an improvement of 10.9% compared to previous methods.

CircuitNet: An Open-Source Dataset for Machine Learning Applications in Electronic Design Automation

Aug 04, 2022

Abstract:The electronic design automation (EDA) community has been actively exploring machine learning for very-large-scale-integrated computer aided design (VLSI CAD). Many studies have explored learning based techniques for cross-stage prediction tasks in the design flow to achieve faster design convergence. Although building machine learning (ML) models usually requires a large amount of data, most studies can only generate small internal datasets for validation due to the lack of large public datasets. In this essay, we present the first open-source dataset for machine learning tasks in VLSI CAD called CircuitNet. The dataset consists of more than 10K samples extracted from versatile runs of commercial design tools based on 6 open-source RISC-V designs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge