Zhuang Miao

Paying more attention to local contrast: improving infrared small target detection performance via prior knowledge

Nov 20, 2024Abstract:The data-driven method for infrared small target detection (IRSTD) has achieved promising results. However, due to the small scale of infrared small target datasets and the limited number of pixels occupied by the targets themselves, it is a challenging task for deep learning methods to directly learn from these samples. Utilizing human expert knowledge to assist deep learning methods in better learning is worthy of exploration. To effectively guide the model to focus on targets' spatial features, this paper proposes the Local Contrast Attention Enhanced infrared small target detection Network (LCAE-Net), combining prior knowledge with data-driven deep learning methods. LCAE-Net is a U-shaped neural network model which consists of two developed modules: a Local Contrast Enhancement (LCE) module and a Channel Attention Enhancement (CAE) module. The LCE module takes advantages of prior knowledge, leveraging handcrafted convolution operator to acquire Local Contrast Attention (LCA), which could realize background suppression while enhance the potential target region, thus guiding the neural network to pay more attention to potential infrared small targets' location information. To effectively utilize the response information throughout downsampling progresses, the CAE module is proposed to achieve the information fusion among feature maps' different channels. Experimental results indicate that our LCAE-Net outperforms existing state-of-the-art methods on the three public datasets NUDT-SIRST, NUAA-SIRST, and IRSTD-1K, and its detection speed could reach up to 70 fps. Meanwhile, our model has a parameter count and Floating-Point Operations (FLOPs) of 1.945M and 4.862G respectively, which is suitable for deployment on edge devices.

Feature Chirality in Deep Learning Models

May 06, 2023

Abstract:As deep learning applications extensively increase by leaps and bounds, their interpretability has become increasingly prominent. As a universal property, chirality exists widely in nature, and applying it to the explanatory research of deep learning may be helpful to some extent. Inspired by a recent study that used CNN (convolutional neural network), which applied visual chirality, to distinguish whether an image is flipped or not. In this paper, we study feature chirality innovatively, which shows how the statistics of deep learning models' feature data are changed by training. We rethink the feature-level chirality property, propose the feature chirality, and give the measure. Our analysis of feature chirality on AlexNet, VGG, and ResNet reveals similar but surprising results, including the prevalence of feature chirality in these models, the initialization methods of the models do not affect feature chirality. Our work shows that feature chirality implies model evaluation, interpretability of the model, and model parameters optimization.

Bridge the Gap between Supervised and Unsupervised Learning for Fine-Grained Classification

Mar 01, 2022

Abstract:Unsupervised learning technology has caught up with or even surpassed supervised learning technology in general object classification (GOC) and person re-identification (re-ID). However, it is found that the unsupervised learning of fine-grained visual classification (FGVC) is more challenging than GOC and person re-ID. In order to bridge the gap between unsupervised and supervised learning for FGVC, we investigate the essential factors (including feature extraction, clustering, and contrastive learning) for the performance gap between supervised and unsupervised FGVC. Furthermore, we propose a simple, effective, and practical method, termed as UFCL, to alleviate the gap. Three key issues are concerned and improved: First, we introduce a robust and powerful backbone, ResNet50-IBN, which has an ability of domain adaptation when we transfer ImageNet pre-trained models to FGVC tasks. Next, we propose to introduce HDBSCAN instead of DBSCAN to do clustering, which can generate better clusters for adjacent categories with fewer hyper-parameters. Finally, we propose a weighted feature agent and its updating mechanism to do contrastive learning by using the pseudo labels with inevitable noise, which can improve the optimization process of learning the parameters of the network. The effectiveness of our UFCL is verified on CUB-200-2011, Oxford-Flowers, Oxford-Pets, Stanford-Dogs, Stanford-Cars and FGVC-Aircraft datasets. Under the unsupervised FGVC setting, we achieve state-of-the-art results, and analyze the key factors and the important parameters to provide a practical guidance.

Grafted network for person re-identification

Jun 06, 2020

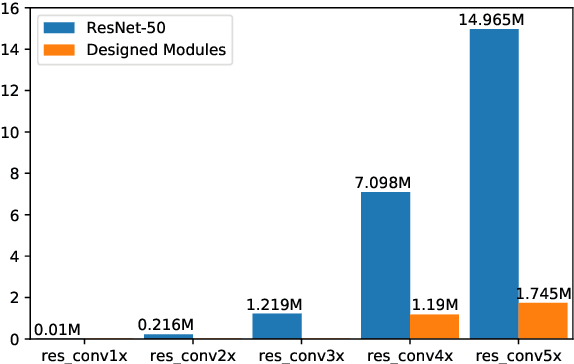

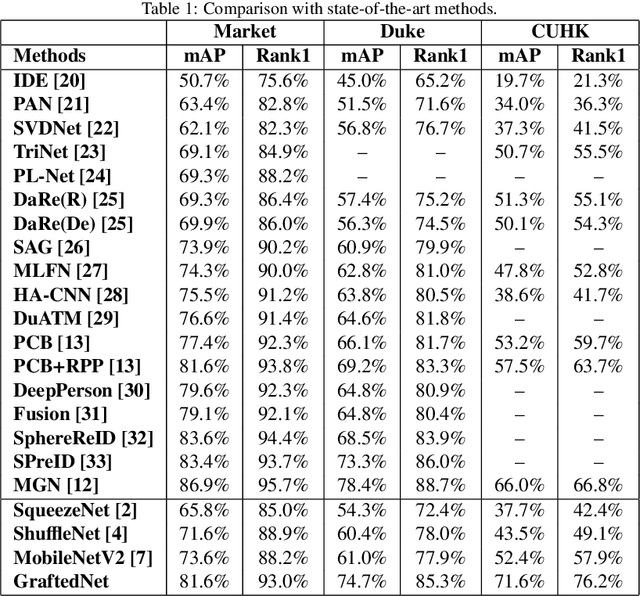

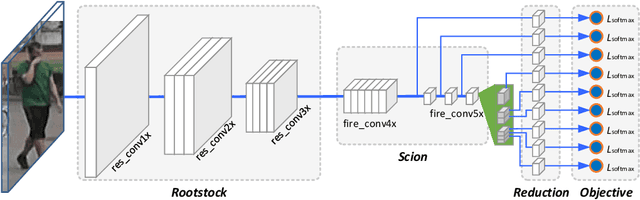

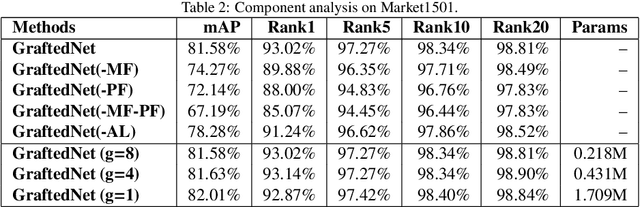

Abstract:Convolutional neural networks have shown outstanding effectiveness in person re-identification (re-ID). However, the models always have large number of parameters and much computation for mobile application. In order to relieve this problem, we propose a novel grafted network (GraftedNet), which is designed by grafting a high-accuracy rootstock and a light-weighted scion. The rootstock is based on the former parts of ResNet-50 to provide a strong baseline, while the scion is a new designed module, composed of the latter parts of SqueezeNet, to compress the parameters. To extract more discriminative feature representation, a joint multi-level and part-based feature is proposed. In addition, to train GraftedNet efficiently, we propose an accompanying learning method, by adding an accompanying branch to train the model in training and removing it in testing for saving parameters and computation. On three public person re-ID benchmarks (Market1501, DukeMTMC-reID and CUHK03), the effectiveness of GraftedNet are evaluated and its components are analyzed. Experimental results show that the proposed GraftedNet achieves 93.02%, 85.3% and 76.2% in Rank-1 and 81.6%, 74.7% and 71.6% in mAP, with only 4.6M parameters.

A heterogeneous branch and multi-level classification network for person re-identification

Jun 02, 2020

Abstract:Convolutional neural networks with multiple branches have recently been proved highly effective in person re-identification (re-ID). Researchers design multi-branch networks using part models, yet they always attribute the effectiveness to multiple parts. In addition, existing multi-branch networks always have isomorphic branches, which lack structural diversity. In order to improve this problem, we propose a novel Heterogeneous Branch and Multi-level Classification Network (HBMCN), which is designed based on the pre-trained ResNet-50 model. A new heterogeneous branch, SE-Res-Branch, is proposed based on the SE-Res module, which consists of the Squeeze-and-Excitation block and the residual block. Furthermore, a new multi-level classification joint objective function is proposed for the supervised learning of HBMCN, whereby multi-level features are extracted from multiple high-level layers and concatenated to represent a person. Based on three public person re-ID benchmarks (Market1501, DukeMTMC-reID and CUHK03), experimental results show that the proposed HBMCN reaches 94.4%, 85.7% and 73.8% in Rank-1, and 85.7%, 74.6% and 69.0% in mAP, achieving a state-of-the-art performance. Further analysis demonstrates that the specially designed heterogeneous branch performs better than an isomorphic branch, and multi-level classification provides more discriminative features compared to single-level classification. As a result, HBMCN provides substantial further improvements in person re-ID tasks.

Ensemble Feature for Person Re-Identification

Jan 17, 2019

Abstract:In person re-identification (re-ID), the key task is feature representation, which is used to compute distance or similarity in prediction. Person re-ID achieves great improvement when deep learning methods are introduced to tackle this problem. The features extracted by convolutional neural networks (CNN) are more effective and discriminative than the hand-crafted features. However, deep feature extracted by a single CNN network is not robust enough in testing stage. To improve the ability of feature representation, we propose a new ensemble network (EnsembleNet) by dividing a single network into multiple end-to-end branches. The ensemble feature is obtained by concatenating each of the branch features to represent a person. EnsembleNet is designed based on ResNet-50 and its backbone shares most of the parameters for saving computation and memory cost. Experimental results show that our EnsembleNet achieves the state-of-the-art performance on the public Market1501, DukeMTMC-reID and CUHK03 person re-ID benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge