Zhongliang Li

FEMTO-ST, UTBM

A Lifetime Extended Energy Management Strategy for Fuel Cell Hybrid Electric Vehicles via Self-Learning Fuzzy Reinforcement Learning

Feb 13, 2023

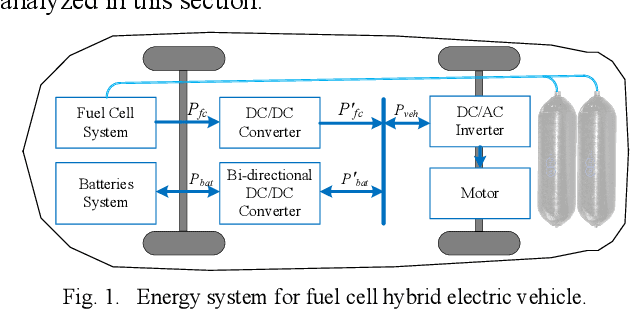

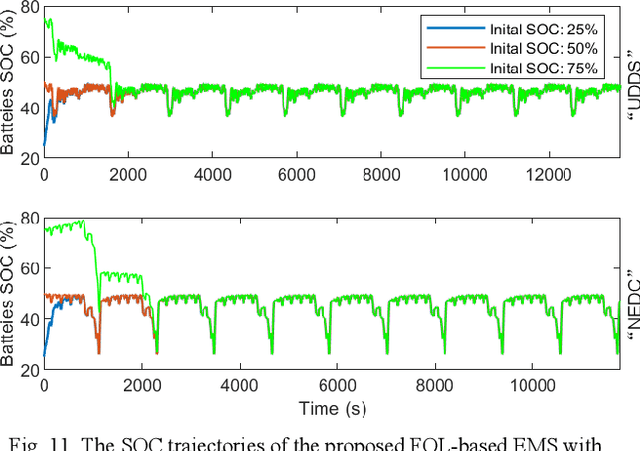

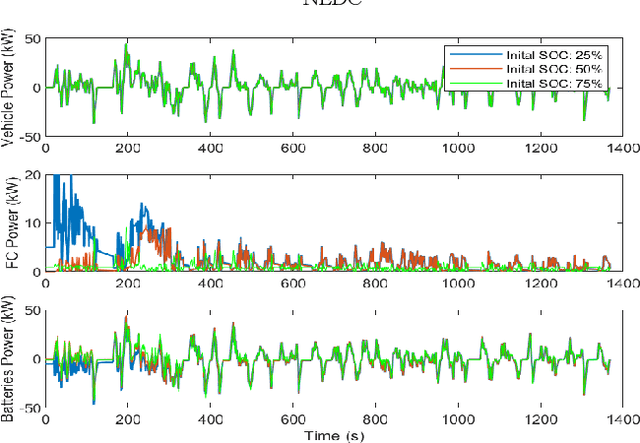

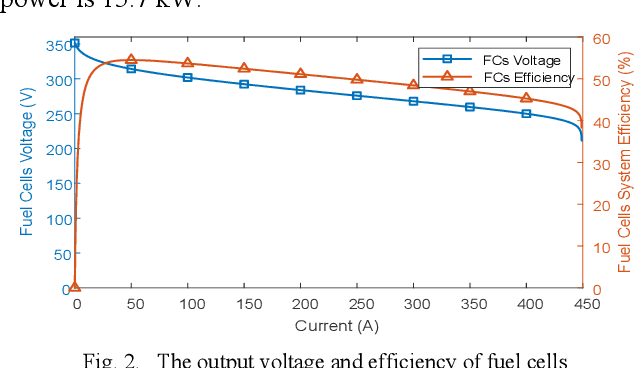

Abstract:Modeling difficulty, time-varying model, and uncertain external inputs are the main challenges for energy management of fuel cell hybrid electric vehicles. In the paper, a fuzzy reinforcement learning-based energy management strategy for fuel cell hybrid electric vehicles is proposed to reduce fuel consumption, maintain the batteries' long-term operation, and extend the lifetime of the fuel cells system. Fuzzy Q-learning is a model-free reinforcement learning that can learn itself by interacting with the environment, so there is no need for modeling the fuel cells system. In addition, frequent startup of the fuel cells will reduce the remaining useful life of the fuel cells system. The proposed method suppresses frequent fuel cells startup by considering the penalty for the times of fuel cell startups in the reward of reinforcement learning. Moreover, applying fuzzy logic to approximate the value function in Q-Learning can solve continuous state and action space problems. Finally, a python-based training and testing platform verify the effectiveness and self-learning improvement of the proposed method under conditions of initial state change, model change and driving condition change.

Learning from Pseudo Lesion: A Self-supervised Framework for COVID-19 Diagnosis

Jun 23, 2021

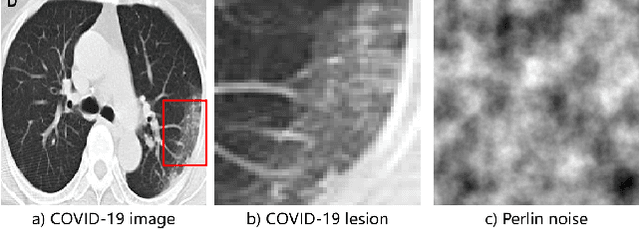

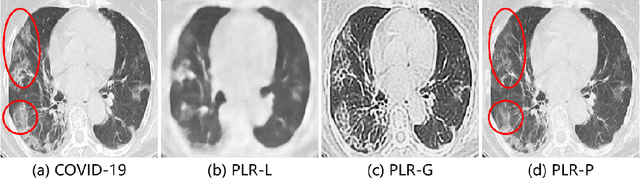

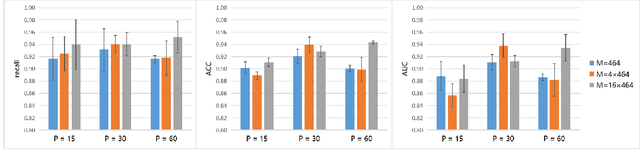

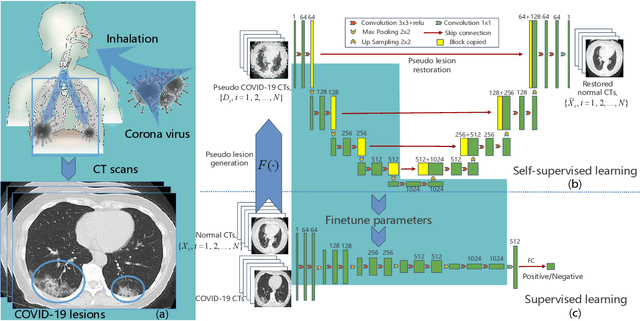

Abstract:The Coronavirus disease 2019 (COVID-19) has rapidly spread all over the world since its first report in December 2019 and thoracic computed tomography (CT) has become one of the main tools for its diagnosis. In recent years, deep learning-based approaches have shown impressive performance in myriad image recognition tasks. However, they usually require a large number of annotated data for training. Inspired by Ground Glass Opacity (GGO), a common finding in COIVD-19 patient's CT scans, we proposed in this paper a novel self-supervised pretraining method based on pseudo lesions generation and restoration for COVID-19 diagnosis. We used Perlin noise, a gradient noise based mathematical model, to generate lesion-like patterns, which were then randomly pasted to the lung regions of normal CT images to generate pseudo COVID-19 images. The pairs of normal and pseudo COVID-19 images were then used to train an encoder-decoder architecture based U-Net for image restoration, which does not require any labelled data. The pretrained encoder was then fine-tuned using labelled data for COVID-19 diagnosis task. Two public COVID-19 diagnosis datasets made up of CT images were employed for evaluation. Comprehensive experimental results demonstrated that the proposed self-supervised learning approach could extract better feature representation for COVID-19 diagnosis and the accuracy of the proposed method outperformed the supervised model pretrained on large scale images by 6.57% and 3.03% on SARS-CoV-2 dataset and Jinan COVID-19 dataset, respectively.

Slim Embedding Layers for Recurrent Neural Language Models

Dec 22, 2017

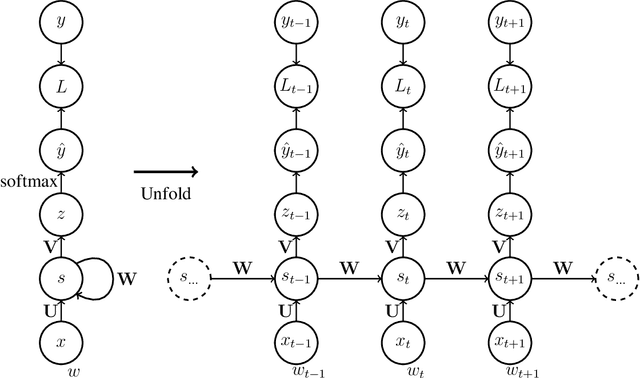

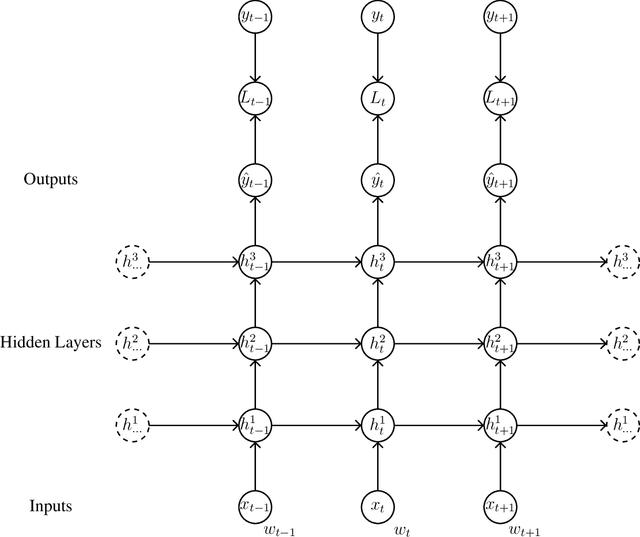

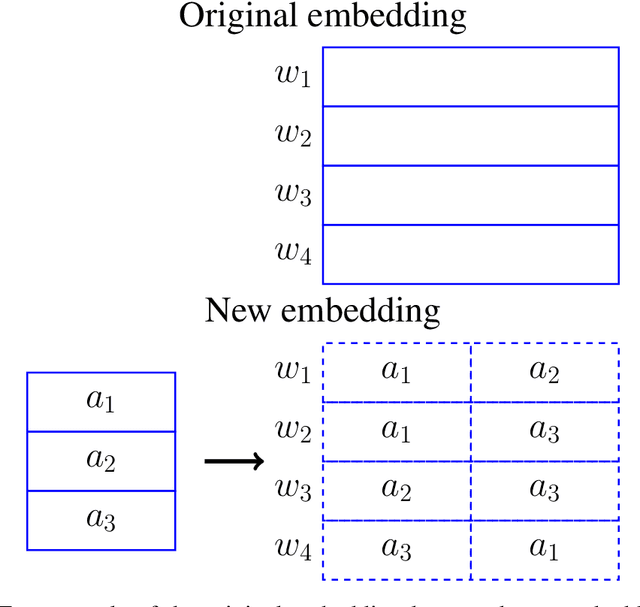

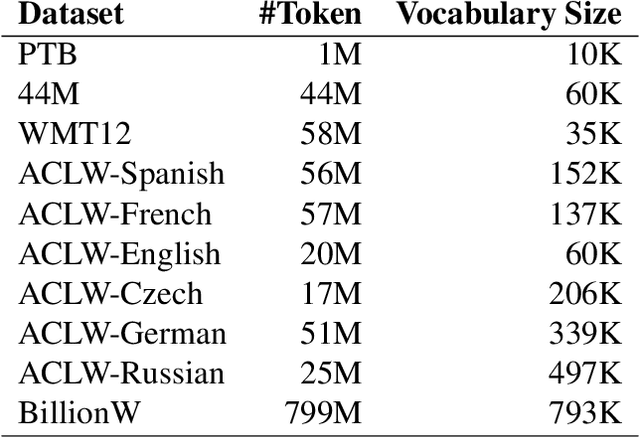

Abstract:Recurrent neural language models are the state-of-the-art models for language modeling. When the vocabulary size is large, the space taken to store the model parameters becomes the bottleneck for the use of recurrent neural language models. In this paper, we introduce a simple space compression method that randomly shares the structured parameters at both the input and output embedding layers of the recurrent neural language models to significantly reduce the size of model parameters, but still compactly represent the original input and output embedding layers. The method is easy to implement and tune. Experiments on several data sets show that the new method can get similar perplexity and BLEU score results while only using a very tiny fraction of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge