Zhiwu Li

A Cosine Network for Image Super-Resolution

Jan 23, 2026Abstract:Deep convolutional neural networks can use hierarchical information to progressively extract structural information to recover high-quality images. However, preserving the effectiveness of the obtained structural information is important in image super-resolution. In this paper, we propose a cosine network for image super-resolution (CSRNet) by improving a network architecture and optimizing the training strategy. To extract complementary homologous structural information, odd and even heterogeneous blocks are designed to enlarge the architectural differences and improve the performance of image super-resolution. Combining linear and non-linear structural information can overcome the drawback of homologous information and enhance the robustness of the obtained structural information in image super-resolution. Taking into account the local minimum of gradient descent, a cosine annealing mechanism is used to optimize the training procedure by performing warm restarts and adjusting the learning rate. Experimental results illustrate that the proposed CSRNet is competitive with state-of-the-art methods in image super-resolution.

Heterogeneous window transformer for image denoising

Jul 08, 2024Abstract:Deep networks can usually depend on extracting more structural information to improve denoising results. However, they may ignore correlation between pixels from an image to pursue better denoising performance. Window transformer can use long- and short-distance modeling to interact pixels to address mentioned problem. To make a tradeoff between distance modeling and denoising time, we propose a heterogeneous window transformer (HWformer) for image denoising. HWformer first designs heterogeneous global windows to capture global context information for improving denoising effects. To build a bridge between long and short-distance modeling, global windows are horizontally and vertically shifted to facilitate diversified information without increasing denoising time. To prevent the information loss phenomenon of independent patches, sparse idea is guided a feed-forward network to extract local information of neighboring patches. The proposed HWformer only takes 30% of popular Restormer in terms of denoising time.

A Novel Granular-Based Bi-Clustering Method of Deep Mining the Co-Expressed Genes

May 12, 2020

Abstract:Traditional clustering methods are limited when dealing with huge and heterogeneous groups of gene expression data, which motivates the development of bi-clustering methods. Bi-clustering methods are used to mine bi-clusters whose subsets of samples (genes) are co-regulated under their test conditions. Studies show that mining bi-clusters of consistent trends and trends with similar degrees of fluctuations from the gene expression data is essential in bioinformatics research. Unfortunately, traditional bi-clustering methods are not fully effective in discovering such bi-clusters. Therefore, we propose a novel bi-clustering method by involving here the theory of Granular Computing. In the proposed scheme, the gene data matrix, considered as a group of time series, is transformed into a series of ordered information granules. With the information granules we build a characteristic matrix of the gene data to capture the fluctuation trend of the expression value between consecutive conditions to mine the ideal bi-clusters. The experimental results are in agreement with the theoretical analysis, and show the excellent performance of the proposed method.

Augmentation of the Reconstruction Performance of Fuzzy C-Means with an Optimized Fuzzification Factor Vector

Apr 13, 2020

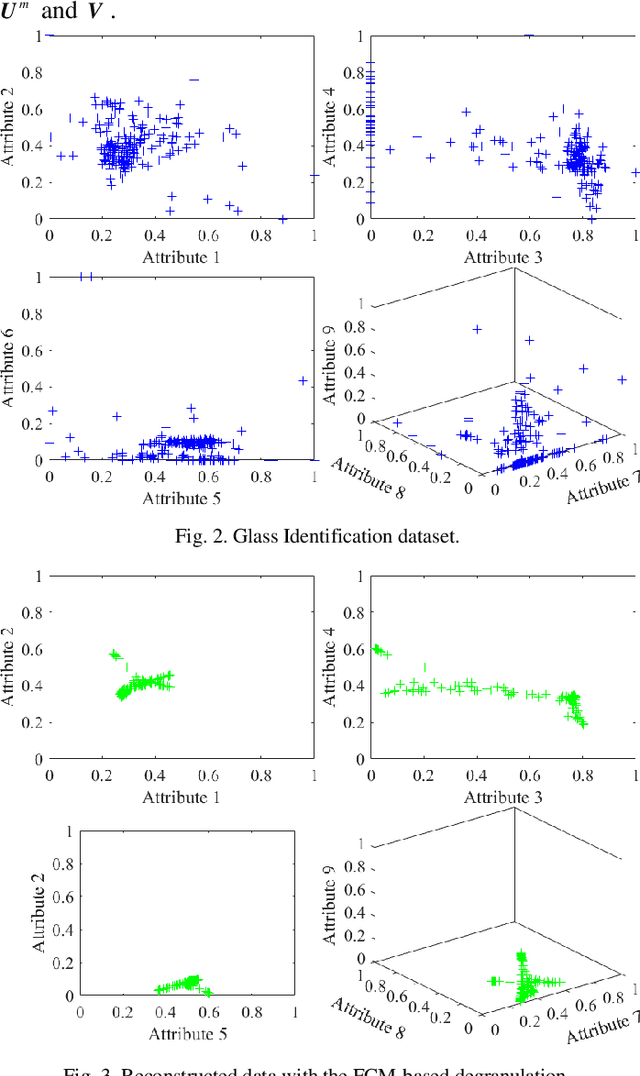

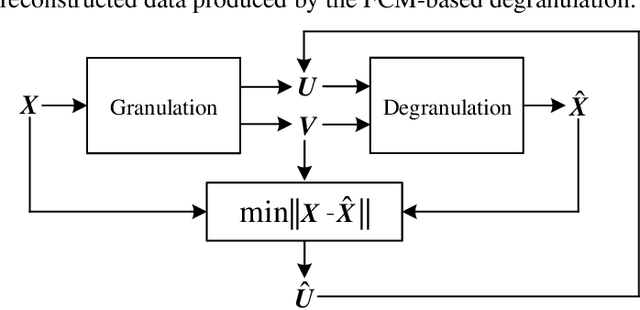

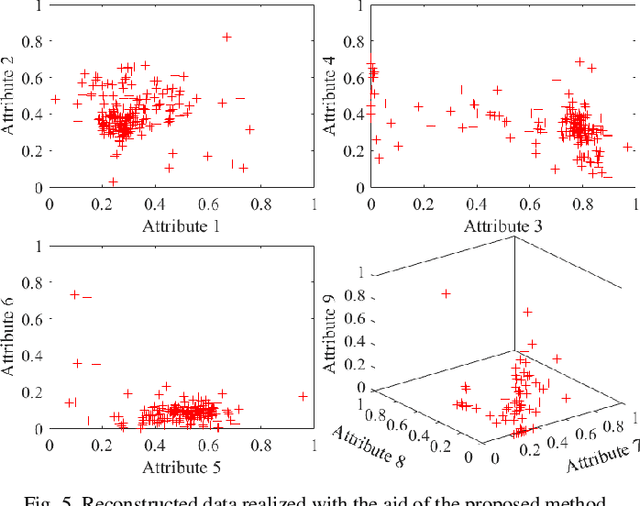

Abstract:Information granules have been considered to be the fundamental constructs of Granular Computing (GrC). As a useful unsupervised learning technique, Fuzzy C-Means (FCM) is one of the most frequently used methods to construct information granules. The FCM-based granulation-degranulation mechanism plays a pivotal role in GrC. In this paper, to enhance the quality of the degranulation (reconstruction) process, we augment the FCM-based degranulation mechanism by introducing a vector of fuzzification factors (fuzzification factor vector) and setting up an adjustment mechanism to modify the prototypes and the partition matrix. The design is regarded as an optimization problem, which is guided by a reconstruction criterion. In the proposed scheme, the initial partition matrix and prototypes are generated by the FCM. Then a fuzzification factor vector is introduced to form an appropriate fuzzification factor for each cluster to build up an adjustment scheme of modifying the prototypes and the partition matrix. With the supervised learning mode of the granulation-degranulation process, we construct a composite objective function of the fuzzification factor vector, the prototypes and the partition matrix. Subsequently, the particle swarm optimization (PSO) is employed to optimize the fuzzification factor vector to refine the prototypes and develop the optimal partition matrix. Finally, the reconstruction performance of the FCM algorithm is enhanced. We offer a thorough analysis of the developed scheme. In particular, we show that the classical FCM algorithm forms a special case of the proposed scheme. Experiments completed for both synthetic and publicly available datasets show that the proposed approach outperforms the generic data reconstruction approach.

Granular Computing: An Augmented Scheme of Degranulation Through a Modified Partition Matrix

Apr 03, 2020

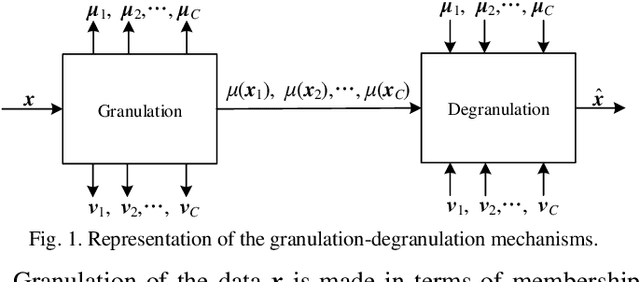

Abstract:As an important technology in artificial intelligence Granular Computing (GrC) has emerged as a new multi-disciplinary paradigm and received much attention in recent years. Information granules forming an abstract and efficient characterization of large volumes of numeric data have been considered as the fundamental constructs of GrC. By generating prototypes and partition matrix, fuzzy clustering is a commonly encountered way of information granulation. Degranulation involves data reconstruction completed on a basis of the granular representatives. Previous studies have shown that there is a relationship between the reconstruction error and the performance of the granulation process. Typically, the lower the degranulation error is, the better performance of granulation is. However, the existing methods of degranulation usually cannot restore the original numeric data, which is one of the important reasons behind the occurrence of the reconstruction error. To enhance the quality of degranulation, in this study, we develop an augmented scheme through modifying the partition matrix. By proposing the augmented scheme, we dwell on a novel collection of granulation-degranulation mechanisms. In the constructed approach, the prototypes can be expressed as the product of the dataset matrix and the partition matrix. Then, in the degranulation process, the reconstructed numeric data can be decomposed into the product of the partition matrix and the matrix of prototypes. Both the granulation and degranulation are regarded as generalized rotation between the data subspace and the prototype subspace with the partition matrix and the fuzzification factor. By modifying the partition matrix, the new partition matrix is constructed through a series of matrix operations. We offer a thorough analysis of the developed scheme. The experimental results are in agreement with the underlying conceptual framework

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge