Zhengzhong Liang

Explainable Verbal Reasoner Plus (EVR+): A Natural Language Reasoning Framework that Supports Diverse Compositional Reasoning

Apr 28, 2023

Abstract:Languages models have been successfully applied to a variety of reasoning tasks in NLP, yet the language models still suffer from compositional generalization. In this paper we present Explainable Verbal Reasoner Plus (EVR+), a reasoning framework that enhances language models' compositional reasoning ability by (1) allowing the model to explicitly generate and execute symbolic operators, and (2) allowing the model to decompose a complex task into several simpler ones in a flexible manner. Compared with its predecessor Explainable Verbal Reasoner (EVR) and other previous approaches adopting similar ideas, our framework supports more diverse types of reasoning such as nested loops and different types of recursion. To evaluate our reasoning framework, we build a synthetic dataset with five tasks that require compositional reasoning. Results show that our reasoning framework can enhance the language model's compositional generalization performance on the five tasks, using a fine-tuned language model. We also discussed the possibility and the challenges to combine our reasoning framework with a few-shot prompted language model.

Better Retrieval May Not Lead to Better Question Answering

May 07, 2022

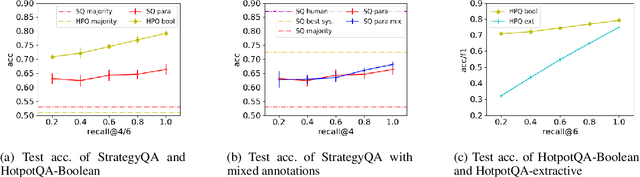

Abstract:Considerable progress has been made recently in open-domain question answering (QA) problems, which require Information Retrieval (IR) and Reading Comprehension (RC). A popular approach to improve the system's performance is to improve the quality of the retrieved context from the IR stage. In this work we show that for StrategyQA, a challenging open-domain QA dataset that requires multi-hop reasoning, this common approach is surprisingly ineffective -- improving the quality of the retrieved context hardly improves the system's performance. We further analyze the system's behavior to identify potential reasons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge