Zhengyi Zhong

SacFL: Self-Adaptive Federated Continual Learning for Resource-Constrained End Devices

May 01, 2025

Abstract:The proliferation of end devices has led to a distributed computing paradigm, wherein on-device machine learning models continuously process diverse data generated by these devices. The dynamic nature of this data, characterized by continuous changes or data drift, poses significant challenges for on-device models. To address this issue, continual learning (CL) is proposed, enabling machine learning models to incrementally update their knowledge and mitigate catastrophic forgetting. However, the traditional centralized approach to CL is unsuitable for end devices due to privacy and data volume concerns. In this context, federated continual learning (FCL) emerges as a promising solution, preserving user data locally while enhancing models through collaborative updates. Aiming at the challenges of limited storage resources for CL, poor autonomy in task shift detection, and difficulty in coping with new adversarial tasks in FCL scenario, we propose a novel FCL framework named SacFL. SacFL employs an Encoder-Decoder architecture to separate task-robust and task-sensitive components, significantly reducing storage demands by retaining lightweight task-sensitive components for resource-constrained end devices. Moreover, $\rm{SacFL}$ leverages contrastive learning to introduce an autonomous data shift detection mechanism, enabling it to discern whether a new task has emerged and whether it is a benign task. This capability ultimately allows the device to autonomously trigger CL or attack defense strategy without additional information, which is more practical for end devices. Comprehensive experiments conducted on multiple text and image datasets, such as Cifar100 and THUCNews, have validated the effectiveness of $\rm{SacFL}$ in both class-incremental and domain-incremental scenarios. Furthermore, a demo system has been developed to verify its practicality.

CUT: Pruning Pre-Trained Multi-Task Models into Compact Models for Edge Devices

Apr 14, 2025Abstract:Multi-task learning has garnered widespread attention in the industry due to its efficient data utilization and strong generalization capabilities, making it particularly suitable for providing high-quality intelligent services to users. Edge devices, as the primary platforms directly serving users, play a crucial role in delivering multi-task services. However, current multi-task models are often large, and user task demands are increasingly diverse. Deploying such models directly on edge devices not only increases the burden on these devices but also leads to task redundancy. To address this issue, this paper innovatively proposes a pre-trained multi-task model pruning method specifically designed for edge computing. The goal is to utilize existing pre-trained multi-task models to construct a compact multi-task model that meets the needs of edge devices. The specific implementation steps are as follows: First, decompose the tasks within the pre-trained multi-task model and select tasks based on actual user needs. Next, while retaining the knowledge of the original pre-trained model, evaluate parameter importance and use a parameter fusion method to effectively integrate shared parameters among tasks. Finally, obtain a compact multi-task model suitable for edge devices. To validate the effectiveness of the proposed method, we conducted experiments on three public image datasets. The experimental results fully demonstrate the superiority and efficiency of this method, providing a new solution for multi-task learning on edge devices.

Efficient Multi-Task Modeling through Automated Fusion of Trained Models

Apr 14, 2025

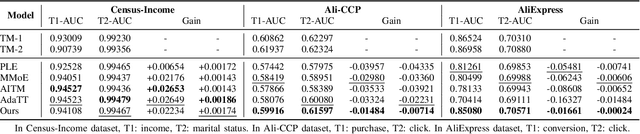

Abstract:Although multi-task learning is widely applied in intelligent services, traditional multi-task modeling methods often require customized designs based on specific task combinations, resulting in a cumbersome modeling process. Inspired by the rapid development and excellent performance of single-task models, this paper proposes an efficient multi-task modeling method that can automatically fuse trained single-task models with different structures and tasks to form a multi-task model. As a general framework, this method allows modelers to simply prepare trained models for the required tasks, simplifying the modeling process while fully utilizing the knowledge contained in the trained models. This eliminates the need for excessive focus on task relationships and model structure design. To achieve this goal, we consider the structural differences among various trained models and employ model decomposition techniques to hierarchically decompose them into multiple operable model components. Furthermore, we have designed an Adaptive Knowledge Fusion (AKF) module based on Transformer, which adaptively integrates intra-task and inter-task knowledge based on model components. Through the proposed method, we achieve efficient and automated construction of multi-task models, and its effectiveness is verified through extensive experiments on three datasets.

Unlearning through Knowledge Overwriting: Reversible Federated Unlearning via Selective Sparse Adapter

Feb 28, 2025

Abstract:Federated Learning is a promising paradigm for privacy-preserving collaborative model training. In practice, it is essential not only to continuously train the model to acquire new knowledge but also to guarantee old knowledge the right to be forgotten (i.e., federated unlearning), especially for privacy-sensitive information or harmful knowledge. However, current federated unlearning methods face several challenges, including indiscriminate unlearning of cross-client knowledge, irreversibility of unlearning, and significant unlearning costs. To this end, we propose a method named FUSED, which first identifies critical layers by analyzing each layer's sensitivity to knowledge and constructs sparse unlearning adapters for sensitive ones. Then, the adapters are trained without altering the original parameters, overwriting the unlearning knowledge with the remaining knowledge. This knowledge overwriting process enables FUSED to mitigate the effects of indiscriminate unlearning. Moreover, the introduction of independent adapters makes unlearning reversible and significantly reduces the unlearning costs. Finally, extensive experiments on three datasets across various unlearning scenarios demonstrate that FUSED's effectiveness is comparable to Retraining, surpassing all other baselines while greatly reducing unlearning costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge