Zhengbo Wang

A Unified Study of LoRA Variants: Taxonomy, Review, Codebase, and Empirical Evaluation

Jan 30, 2026Abstract:Low-Rank Adaptation (LoRA) is a fundamental parameter-efficient fine-tuning method that balances efficiency and performance in large-scale neural networks. However, the proliferation of LoRA variants has led to fragmentation in methodology, theory, code, and evaluation. To this end, this work presents the first unified study of LoRA variants, offering a systematic taxonomy, unified theoretical review, structured codebase, and standardized empirical assessment. First, we categorize LoRA variants along four principal axes: rank, optimization dynamics, initialization, and integration with Mixture-of-Experts. Then, we review their relationships and evolution within a common theoretical framework focused on low-rank update dynamics. Further, we introduce LoRAFactory, a modular codebase that implements variants through a unified interface, supporting plug-and-play experimentation and fine-grained analysis. Last, using this codebase, we conduct a large-scale evaluation across natural language generation, natural language understanding, and image classification tasks, systematically exploring key hyperparameters. Our results uncover several findings, notably: LoRA and its variants exhibit pronounced sensitivity to the choices of learning rate compared to other hyperparameters; moreover, with proper hyperparameter configurations, LoRA consistently matches or surpasses the performance of most of its variants.

Towards Compatible Fine-tuning for Vision-Language Model Updates

Dec 30, 2024

Abstract:So far, efficient fine-tuning has become a popular strategy for enhancing the capabilities of foundation models on downstream tasks by learning plug-and-play modules. However, existing methods overlook a crucial issue: if the underlying foundation model is updated, are these plug-and-play modules still effective? In this paper, we first conduct a detailed analysis of various fine-tuning methods on the CLIP in terms of their compatibility with model updates. The study reveals that many high-performing fine-tuning methods fail to be compatible with the upgraded models. To address this, we propose a novel approach, Class-conditioned Context Optimization (ContCoOp), which integrates learnable prompts with class embeddings using an attention layer before inputting them into the text encoder. Consequently, the prompts can dynamically adapt to the changes in embedding space (due to model updates), ensuring continued effectiveness. Extensive experiments over 15 datasets show that our ContCoOp achieves the highest compatibility over the baseline methods, and exhibits robust out-of-distribution generalization.

LoRA-Pro: Are Low-Rank Adapters Properly Optimized?

Jul 25, 2024

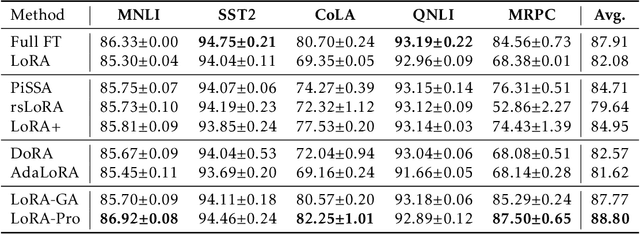

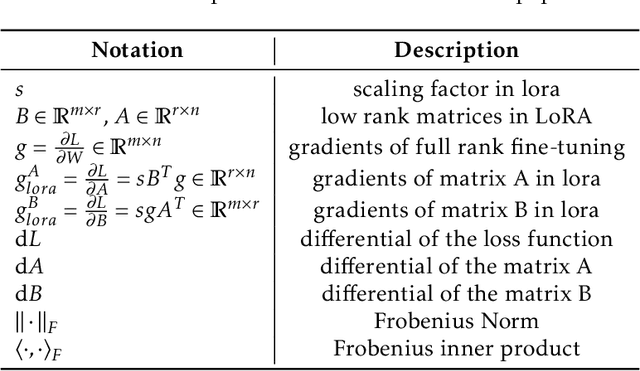

Abstract:Low-Rank Adaptation, also known as LoRA, has emerged as a prominent method for parameter-efficient fine-tuning foundation models by re-parameterizing the original matrix into the product of two low-rank matrices. Despite its efficiency, LoRA often yields inferior performance compared to full fine-tuning. In this paper, we propose LoRA-Pro to bridge this performance gap. Firstly, we delve into the optimization processes in LoRA and full fine-tuning. We reveal that while LoRA employs low-rank approximation, it neglects to approximate the optimization process of full fine-tuning. To address this, we introduce a novel concept called the "equivalent gradient." This virtual gradient makes the optimization process on the re-parameterized matrix equivalent to LoRA, which can be used to quantify the differences between LoRA and full fine-tuning. The equivalent gradient is derived from the gradients of matrices $A$ and $B$. To narrow the performance gap, our approach minimizes the differences between the equivalent gradient and the gradient obtained from full fine-tuning during the optimization process. By solving this objective, we derive optimal closed-form solutions for updating matrices $A$ and $B$. Our method constrains the optimization process, shrinking the performance gap between LoRA and full fine-tuning. Extensive experiments on natural language processing tasks validate the effectiveness of our method.

Connecting the Dots: Collaborative Fine-tuning for Black-Box Vision-Language Models

Feb 06, 2024Abstract:With the emergence of pretrained vision-language models (VLMs), considerable efforts have been devoted to fine-tuning them for downstream tasks. Despite the progress made in designing efficient fine-tuning methods, such methods require access to the model's parameters, which can be challenging as model owners often opt to provide their models as a black box to safeguard model ownership. This paper proposes a \textbf{C}ollabo\textbf{ra}tive \textbf{F}ine-\textbf{T}uning (\textbf{CraFT}) approach for fine-tuning black-box VLMs to downstream tasks, where one only has access to the input prompts and the output predictions of the model. CraFT comprises two modules, a prompt generation module for learning text prompts and a prediction refinement module for enhancing output predictions in residual style. Additionally, we introduce an auxiliary prediction-consistent loss to promote consistent optimization across these modules. These modules are optimized by a novel collaborative training algorithm. Extensive experiments on few-shot classification over 15 datasets demonstrate the superiority of CraFT. The results show that CraFT achieves a decent gain of about 12\% with 16-shot datasets and only 8,000 queries. Moreover, CraFT trains faster and uses only about 1/80 of the memory footprint for deployment, while sacrificing only 1.62\% compared to the white-box method.

A Hard-to-Beat Baseline for Training-free CLIP-based Adaptation

Feb 06, 2024

Abstract:Contrastive Language-Image Pretraining (CLIP) has gained popularity for its remarkable zero-shot capacity. Recent research has focused on developing efficient fine-tuning methods, such as prompt learning and adapter, to enhance CLIP's performance in downstream tasks. However, these methods still require additional training time and computational resources, which is undesirable for devices with limited resources. In this paper, we revisit a classical algorithm, Gaussian Discriminant Analysis (GDA), and apply it to the downstream classification of CLIP. Typically, GDA assumes that features of each class follow Gaussian distributions with identical covariance. By leveraging Bayes' formula, the classifier can be expressed in terms of the class means and covariance, which can be estimated from the data without the need for training. To integrate knowledge from both visual and textual modalities, we ensemble it with the original zero-shot classifier within CLIP. Extensive results on 17 datasets validate that our method surpasses or achieves comparable results with state-of-the-art methods on few-shot classification, imbalanced learning, and out-of-distribution generalization. In addition, we extend our method to base-to-new generalization and unsupervised learning, once again demonstrating its superiority over competing approaches. Our code is publicly available at \url{https://github.com/mrflogs/ICLR24}.

Self-training solutions for the ICCV 2023 GeoNet Challenge

Nov 28, 2023

Abstract:GeoNet is a recently proposed domain adaptation benchmark consisting of three challenges (i.e., GeoUniDA, GeoImNet, and GeoPlaces). Each challenge contains images collected from the USA and Asia where there are huge geographical gaps. Our solution adopts a two-stage source-free domain adaptation framework with a Swin Transformer backbone to achieve knowledge transfer from the USA (source) domain to Asia (target) domain. In the first stage, we train a source model using labeled source data with a re-sampling strategy and two types of cross-entropy loss. In the second stage, we generate pseudo labels for unlabeled target data to fine-tune the model. Our method achieves an H-score of 74.56% and ultimately ranks 1st in the GeoUniDA challenge. In GeoImNet and GeoPlaces challenges, our solution also reaches a top-3 accuracy of 64.46% and 51.23%, respectively.

Towards Realistic Unsupervised Fine-tuning with CLIP

Aug 24, 2023

Abstract:The emergence of vision-language models (VLMs), such as CLIP, has spurred a significant research effort towards their application for downstream supervised learning tasks. Although some previous studies have explored the unsupervised fine-tuning of CLIP, they often rely on prior knowledge in the form of class names associated with ground truth labels. In this paper, we delve into a realistic unsupervised fine-tuning scenario by assuming that the unlabeled data might contain out-of-distribution samples from unknown classes. Furthermore, we emphasize the importance of simultaneously enhancing out-of-distribution detection capabilities alongside the recognition of instances associated with predefined class labels. To tackle this problem, we present a simple, efficient, and effective fine-tuning approach called Universal Entropy Optimization (UEO). UEO leverages sample-level confidence to approximately minimize the conditional entropy of confident instances and maximize the marginal entropy of less confident instances. Apart from optimizing the textual prompts, UEO also incorporates optimization of channel-wise affine transformations within the visual branch of CLIP. Through extensive experiments conducted across 15 domains and 4 different types of prior knowledge, we demonstrate that UEO surpasses baseline methods in terms of both generalization and out-of-distribution detection.

Improving Zero-Shot Generalization for CLIP with Synthesized Prompts

Jul 14, 2023Abstract:With the growing interest in pretrained vision-language models like CLIP, recent research has focused on adapting these models to downstream tasks. Despite achieving promising results, most existing methods require labeled data for all classes, which may not hold in real-world applications due to the long tail and Zipf's law. For example, some classes may lack labeled data entirely, such as emerging concepts. To address this problem, we propose a plug-and-play generative approach called \textbf{S}ynt\textbf{H}es\textbf{I}zed \textbf{P}rompts~(\textbf{SHIP}) to improve existing fine-tuning methods. Specifically, we follow variational autoencoders to introduce a generator that reconstructs the visual features by inputting the synthesized prompts and the corresponding class names to the textual encoder of CLIP. In this manner, we easily obtain the synthesized features for the remaining label-only classes. Thereafter, we fine-tune CLIP with off-the-shelf methods by combining labeled and synthesized features. Extensive experiments on base-to-new generalization, cross-dataset transfer learning, and generalized zero-shot learning demonstrate the superiority of our approach. The code is available at \url{https://github.com/mrflogs/SHIP}.

Exploiting Semantic Attributes for Transductive Zero-Shot Learning

Mar 17, 2023Abstract:Zero-shot learning (ZSL) aims to recognize unseen classes by generalizing the relation between visual features and semantic attributes learned from the seen classes. A recent paradigm called transductive zero-shot learning further leverages unlabeled unseen data during training and has obtained impressive results. These methods always synthesize unseen features from attributes through a generative adversarial network to mitigate the bias towards seen classes. However, they neglect the semantic information in the unlabeled unseen data and thus fail to generate high-fidelity attribute-consistent unseen features. To address this issue, we present a novel transductive ZSL method that produces semantic attributes of the unseen data and imposes them on the generative process. In particular, we first train an attribute decoder that learns the mapping from visual features to semantic attributes. Then, from the attribute decoder, we obtain pseudo-attributes of unlabeled data and integrate them into the generative model, which helps capture the detailed differences within unseen classes so as to synthesize more discriminative features. Experiments on five standard benchmarks show that our method yields state-of-the-art results for zero-shot learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge