Zhenda Shen

LLM Review: Enhancing Creative Writing via Blind Peer Review Feedback

Jan 12, 2026Abstract:Large Language Models (LLMs) often struggle with creative generation, and multi-agent frameworks that improve reasoning through interaction can paradoxically hinder creativity by inducing content homogenization. We introduce LLM Review, a peer-review-inspired framework implementing Blind Peer Review: agents exchange targeted feedback while revising independently, preserving divergent creative trajectories. To enable rigorous evaluation, we propose SciFi-100, a science fiction writing dataset with a unified framework combining LLM-as-a-judge scoring, human annotation, and rule-based novelty metrics. Experiments demonstrate that LLM Review consistently outperforms multi-agent baselines, and smaller models with our framework can surpass larger single-agent models, suggesting interaction structure may substitute for model scale.

Single-Shot Plug-and-Play Methods for Inverse Problems

Nov 22, 2023

Abstract:The utilisation of Plug-and-Play (PnP) priors in inverse problems has become increasingly prominent in recent years. This preference is based on the mathematical equivalence between the general proximal operator and the regularised denoiser, facilitating the adaptation of various off-the-shelf denoiser priors to a wide range of inverse problems. However, existing PnP models predominantly rely on pre-trained denoisers using large datasets. In this work, we introduce Single-Shot PnP methods (SS-PnP), shifting the focus to solving inverse problems with minimal data. First, we integrate Single-Shot proximal denoisers into iterative methods, enabling training with single instances. Second, we propose implicit neural priors based on a novel function that preserves relevant frequencies to capture fine details while avoiding the issue of vanishing gradients. We demonstrate, through extensive numerical and visual experiments, that our method leads to better approximations.

TRIDENT: The Nonlinear Trilogy for Implicit Neural Representations

Nov 21, 2023

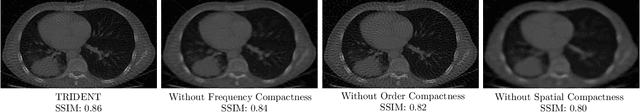

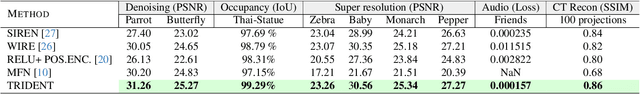

Abstract:Implicit neural representations (INRs) have garnered significant interest recently for their ability to model complex, high-dimensional data without explicit parameterisation. In this work, we introduce TRIDENT, a novel function for implicit neural representations characterised by a trilogy of nonlinearities. Firstly, it is designed to represent high-order features through order compactness. Secondly, TRIDENT efficiently captures frequency information, a feature called frequency compactness. Thirdly, it has the capability to represent signals or images such that most of its energy is concentrated in a limited spatial region, denoting spatial compactness. We demonstrated through extensive experiments on various inverse problems that our proposed function outperforms existing implicit neural representation functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge