Zhe Jia

A Semantic Decoupling-Based Two-Stage Rainy-Day Attack for Revealing Weather Robustness Deficiencies in Vision-Language Models

Jan 19, 2026Abstract:Vision-Language Models (VLMs) are trained on image-text pairs collected under canonical visual conditions and achieve strong performance on multimodal tasks. However, their robustness to real-world weather conditions, and the stability of cross-modal semantic alignment under such structured perturbations, remain insufficiently studied. In this paper, we focus on rainy scenarios and introduce the first adversarial framework that exploits realistic weather to attack VLMs, using a two-stage, parameterized perturbation model based on semantic decoupling to analyze rain-induced shifts in decision-making. In Stage 1, we model the global effects of rainfall by applying a low-dimensional global modulation to condition the embedding space and gradually weaken the original semantic decision boundaries. In Stage 2, we introduce structured rain variations by explicitly modeling multi-scale raindrop appearance and rainfall-induced illumination changes, and optimize the resulting non-differentiable weather space to induce stable semantic shifts. Operating in a non-pixel parameter space, our framework generates perturbations that are both physically grounded and interpretable. Experiments across multiple tasks show that even physically plausible, highly constrained weather perturbations can induce substantial semantic misalignment in mainstream VLMs, posing potential safety and reliability risks in real-world deployment. Ablations further confirm that illumination modeling and multi-scale raindrop structures are key drivers of these semantic shifts.

SourceNet: Interpretable Sim-to-Real Inference on Variable-Geometry Sensor Arrays for Earthquake Source Inversion

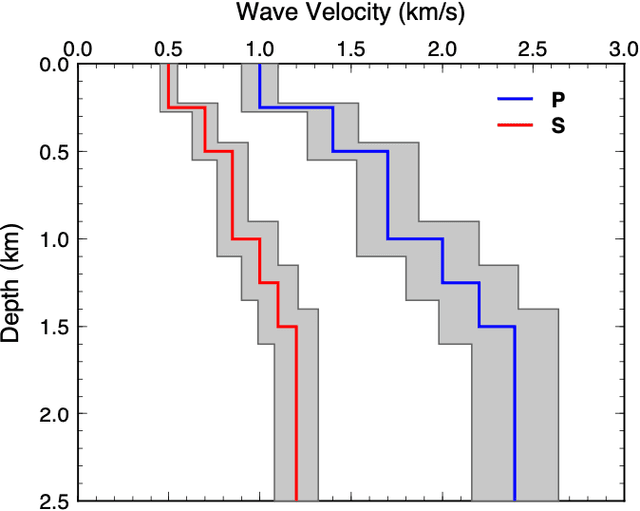

Jan 09, 2026Abstract:Inferring high-dimensional physical states from sparse, ad-hoc sensor arrays is a fundamental challenge across AI for Science, as they are complicated by irregular geometries and the profound Sim-to-Real gap in physical modeling. Taking earthquake source characterization as a representative challenge, we address limitations in conventional deep learning: CNNs demand fixed grids, while pooling-based architectures (e.g., DeepSets) struggle to capture the relational wave physics. Here, we propose SourceNet, a Transformer-based framework that treats the sensor array as a flexible set to model arbitrary geometries. To bridge the reality gap, we introduce Physics-Structured Domain Randomization (PSDR). Instead of forcing feature alignment, PSDR randomizes the governing physical dynamics by varying velocity structures, propagation effects, and sensor availability, to force the model to learn robust representations invariant to unmodeled environmental heterogeneity. By pre-training on 100,000 synthetic events and fine-tuning on ~2,000 real world events, SourceNet achieves state-of-the-art precision on held-out real data. This demonstrates exceptional data efficiency, and matches classical solvers while enabling real-time processing. Remarkably, interpretability analysis reveals that the model shows scientific-agent-like features: it autonomously discovers geometric information bottlenecks and learns an attention policy that prioritizes sparse sensor placements, effectively recovering principles of optimal experimental design from data alone.

Extracting dispersion curves from ambient noise correlations using deep learning

Feb 05, 2020

Abstract:We present a machine-learning approach to classifying the phases of surface wave dispersion curves. Standard FTAN analysis of surfaces observed on an array of receivers is converted to an image, of which, each pixel is classified as fundamental mode, first overtone, or noise. We use a convolutional neural network (U-net) architecture with a supervised learning objective and incorporate transfer learning. The training is initially performed with synthetic data to learn coarse structure, followed by fine-tuning of the network using approximately 10% of the real data based on human classification. The results show that the machine classification is nearly identical to the human picked phases. Expanding the method to process multiple images at once did not improve the performance. The developed technique will faciliate automated processing of large dispersion curve datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge