Zeihui Xiong

Towards Communication-efficient Federated Learning via Sparse and Aligned Adaptive Optimization

May 28, 2024

Abstract:Adaptive moment estimation (Adam), as a Stochastic Gradient Descent (SGD) variant, has gained widespread popularity in federated learning (FL) due to its fast convergence. However, federated Adam (FedAdam) algorithms suffer from a threefold increase in uplink communication overhead compared to federated SGD (FedSGD) algorithms, which arises from the necessity to transmit both local model updates and first and second moment estimates from distributed devices to the centralized server for aggregation. Driven by this issue, we propose a novel sparse FedAdam algorithm called FedAdam-SSM, wherein distributed devices sparsify the updates of local model parameters and moment estimates and subsequently upload the sparse representations to the centralized server. To further reduce the communication overhead, the updates of local model parameters and moment estimates incorporate a shared sparse mask (SSM) into the sparsification process, eliminating the need for three separate sparse masks. Theoretically, we develop an upper bound on the divergence between the local model trained by FedAdam-SSM and the desired model trained by centralized Adam, which is related to sparsification error and imbalanced data distribution. By minimizing the divergence bound between the model trained by FedAdam-SSM and centralized Adam, we optimize the SSM to mitigate the learning performance degradation caused by sparsification error. Additionally, we provide convergence bounds for FedAdam-SSM in both convex and non-convex objective function settings, and investigate the impact of local epoch, learning rate and sparsification ratio on the convergence rate of FedAdam-SSM. Experimental results show that FedAdam-SSM outperforms baselines in terms of convergence rate (over 1.1$\times$ faster than the sparse FedAdam baselines) and test accuracy (over 14.5\% ahead of the quantized FedAdam baselines).

Nothing Wasted: Full Contribution Enforcement in Federated Edge Learning

Oct 15, 2021

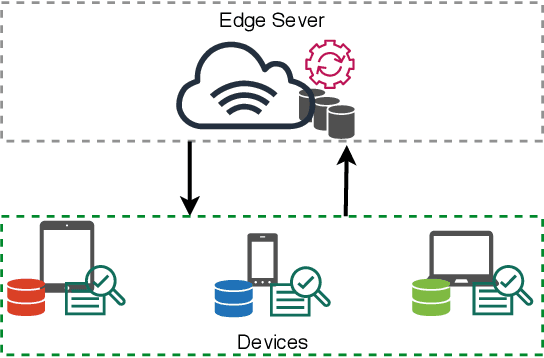

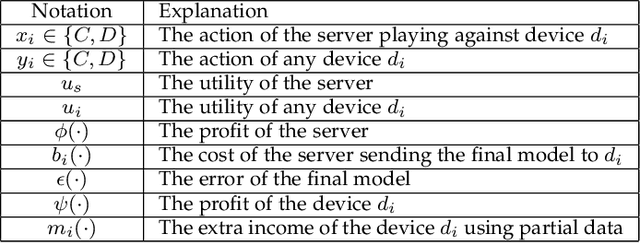

Abstract:The explosive amount of data generated at the network edge makes mobile edge computing an essential technology to support real-time applications, calling for powerful data processing and analysis provided by machine learning (ML) techniques. In particular, federated edge learning (FEL) becomes prominent in securing the privacy of data owners by keeping the data locally used to train ML models. Existing studies on FEL either utilize in-process optimization or remove unqualified participants in advance. In this paper, we enhance the collaboration from all edge devices in FEL to guarantee that the ML model is trained using all available local data to accelerate the learning process. To that aim, we propose a collective extortion (CE) strategy under the imperfect-information multi-player FEL game, which is proved to be effective in helping the server efficiently elicit the full contribution of all devices without worrying about suffering from any economic loss. Technically, our proposed CE strategy extends the classical extortion strategy in controlling the proportionate share of expected utilities for a single opponent to the swiftly homogeneous control over a group of players, which further presents an attractive trait of being impartial for all participants. Moreover, the CE strategy enriches the game theory hierarchy, facilitating a wider application scope of the extortion strategy. Both theoretical analysis and experimental evaluations validate the effectiveness and fairness of our proposed scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge