Zaidao Wen

Pose Discrepancy Spatial Transformer Based Feature Disentangling for Partial Aspect Angles SAR Target Recognition

Mar 07, 2021

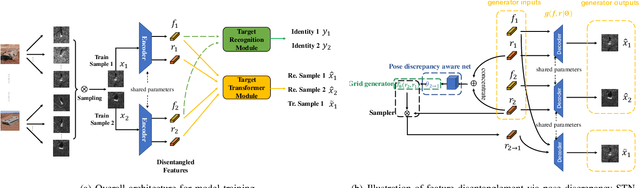

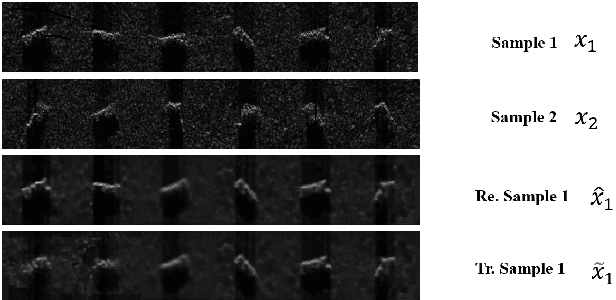

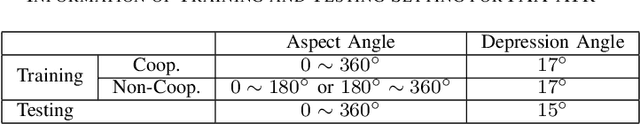

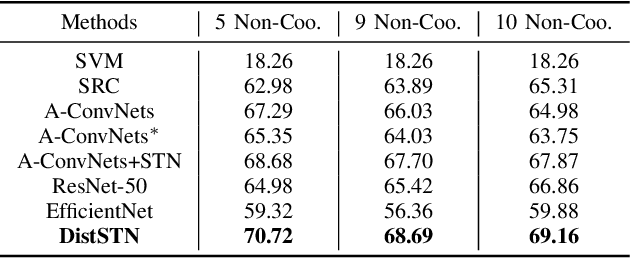

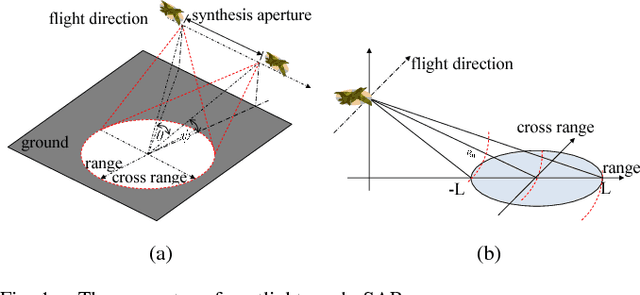

Abstract:This letter presents a novel framework termed DistSTN for the task of synthetic aperture radar (SAR) automatic target recognition (ATR). In contrast to the conventional SAR ATR algorithms, DistSTN considers a more challenging practical scenario for non-cooperative targets whose aspect angles for training are incomplete and limited in a partial range while those of testing samples are unlimited. To address this issue, instead of learning the pose invariant features, DistSTN newly involves an elaborated feature disentangling model to separate the learned pose factors of a SAR target from the identity ones so that they can independently control the representation process of the target image. To disentangle the explainable pose factors, we develop a pose discrepancy spatial transformer module in DistSTN to characterize the intrinsic transformation between the factors of two different targets with an explicit geometric model. Furthermore, DistSTN develops an amortized inference scheme that enables efficient feature extraction and recognition using an encoder-decoder mechanism. Experimental results with the moving and stationary target acquisition and recognition (MSTAR) benchmark demonstrate the effectiveness of our proposed approach. Compared with the other ATR algorithms, DistSTN can achieve higher recognition accuracy.

Discriminative Transformation Learning for Fuzzy Sparse Subspace Clustering

Jul 18, 2017

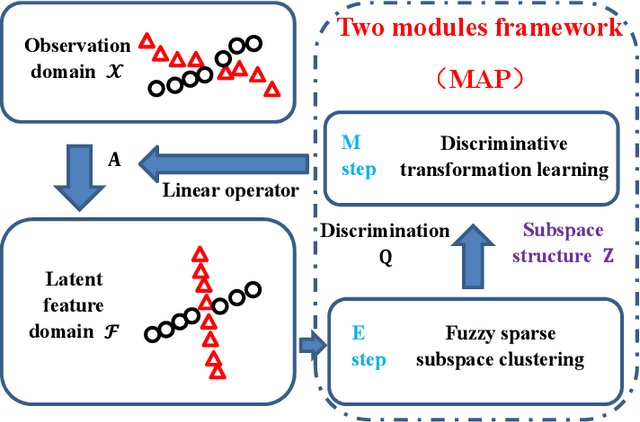

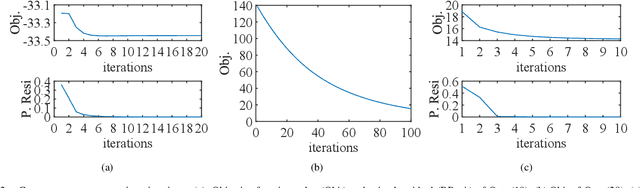

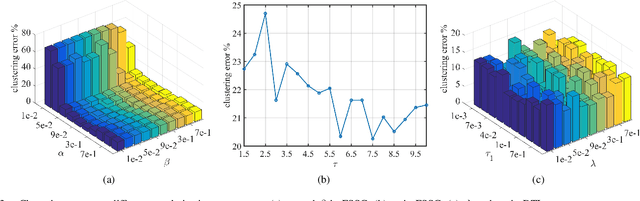

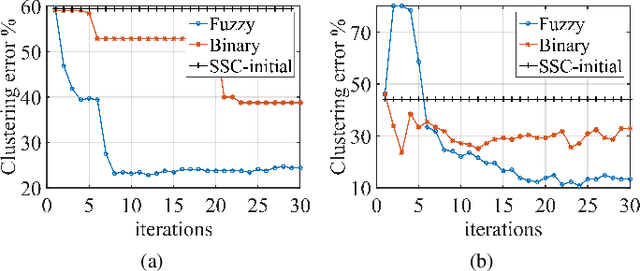

Abstract:This paper develops a novel iterative framework for subspace clustering in a learned discriminative feature domain. This framework consists of two modules of fuzzy sparse subspace clustering and discriminative transformation learning. In the first module, fuzzy latent labels containing discriminative information and latent representations capturing the subspace structure will be simultaneously evaluated in a feature domain. Then the linear transforming operator with respect to the feature domain will be successively updated in the second module with the advantages of more discrimination, subspace structure preservation and robustness to outliers. These two modules will be alternatively carried out and both theoretical analysis and empirical evaluations will demonstrate its effectiveness and superiorities. In particular, experimental results on three benchmark databases for subspace clustering clearly illustrate that the proposed framework can achieve significant improvements than other state-of-the-art approaches in terms of clustering accuracy.

Discriminative Nonlinear Analysis Operator Learning: When Cosparse Model Meets Image Classification

Apr 30, 2017

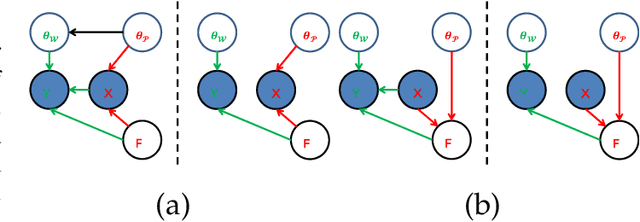

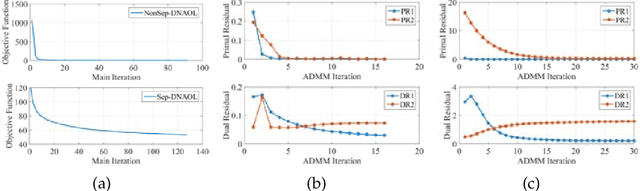

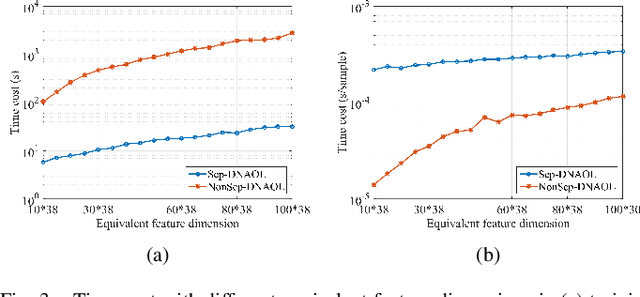

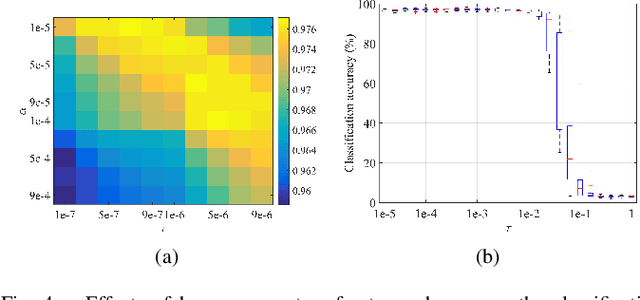

Abstract:Linear synthesis model based dictionary learning framework has achieved remarkable performances in image classification in the last decade. Behaved as a generative feature model, it however suffers from some intrinsic deficiencies. In this paper, we propose a novel parametric nonlinear analysis cosparse model (NACM) with which a unique feature vector will be much more efficiently extracted. Additionally, we derive a deep insight to demonstrate that NACM is capable of simultaneously learning the task adapted feature transformation and regularization to encode our preferences, domain prior knowledge and task oriented supervised information into the features. The proposed NACM is devoted to the classification task as a discriminative feature model and yield a novel discriminative nonlinear analysis operator learning framework (DNAOL). The theoretical analysis and experimental performances clearly demonstrate that DNAOL will not only achieve the better or at least competitive classification accuracies than the state-of-the-art algorithms but it can also dramatically reduce the time complexities in both training and testing phases.

Target Oriented High Resolution SAR Image Formation via Semantic Information Guided Regularizations

Apr 24, 2017

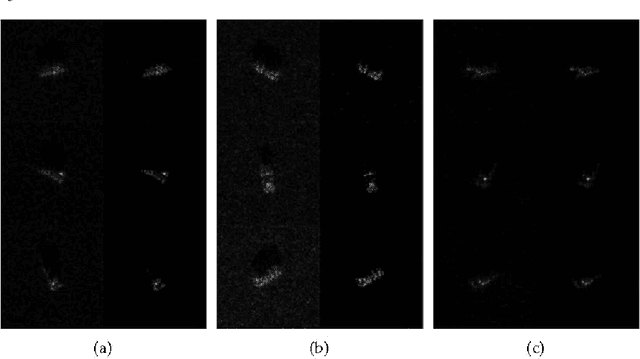

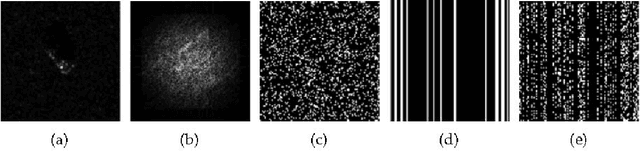

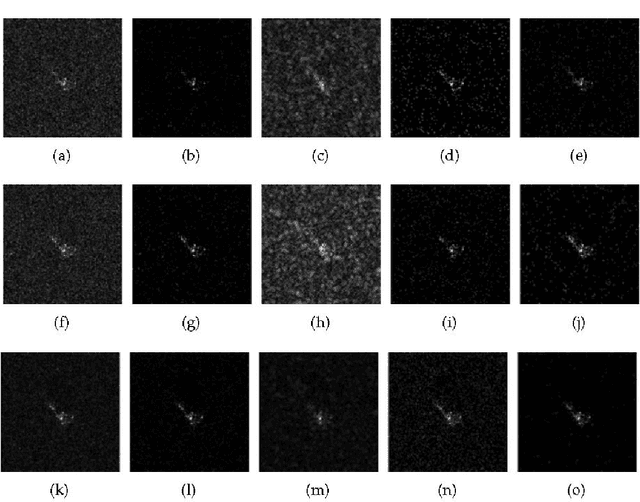

Abstract:Sparsity-regularized synthetic aperture radar (SAR) imaging framework has shown its remarkable performance to generate a feature enhanced high resolution image, in which a sparsity-inducing regularizer is involved by exploiting the sparsity priors of some visual features in the underlying image. However, since the simple prior of low level features are insufficient to describe different semantic contents in the image, this type of regularizer will be incapable of distinguishing between the target of interest and unconcerned background clutters. As a consequence, the features belonging to the target and clutters are simultaneously affected in the generated image without concerning their underlying semantic labels. To address this problem, we propose a novel semantic information guided framework for target oriented SAR image formation, which aims at enhancing the interested target scatters while suppressing the background clutters. Firstly, we develop a new semantics-specific regularizer for image formation by exploiting the statistical properties of different semantic categories in a target scene SAR image. In order to infer the semantic label for each pixel in an unsupervised way, we moreover induce a novel high-level prior-driven regularizer and some semantic causal rules from the prior knowledge. Finally, our regularized framework for image formation is further derived as a simple iteratively reweighted $\ell_1$ minimization problem which can be conveniently solved by many off-the-shelf solvers. Experimental results demonstrate the effectiveness and superiority of our framework for SAR image formation in terms of target enhancement and clutters suppression, compared with the state of the arts. Additionally, the proposed framework opens a new direction of devoting some machine learning strategies to image formation, which can benefit the subsequent decision making tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge