Yuxi Cai

Cross-Layer Retrospective Retrieving via Layer Attention

Feb 28, 2023

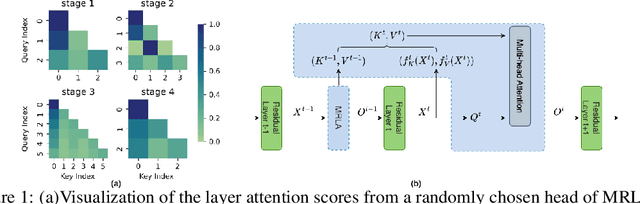

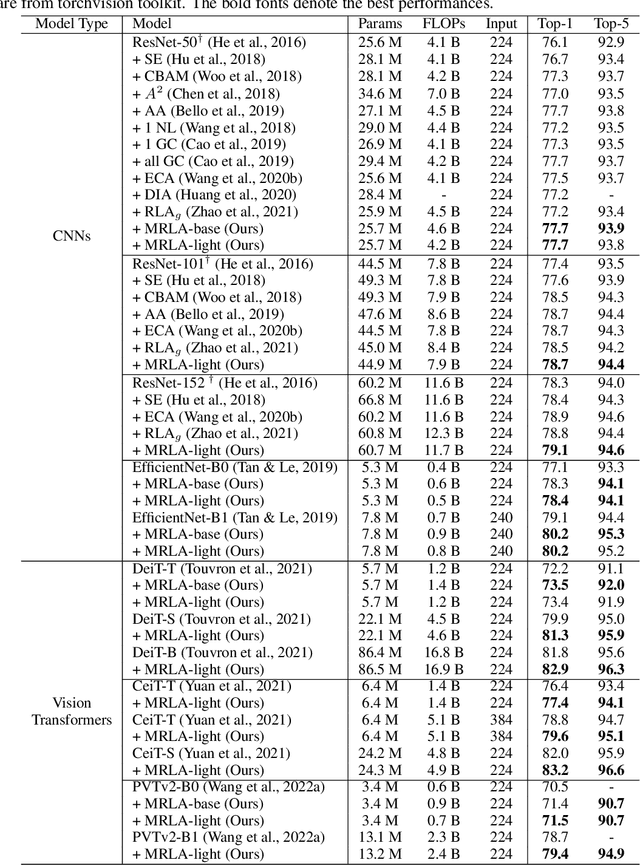

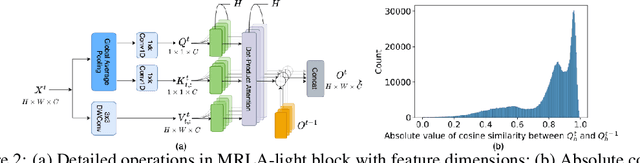

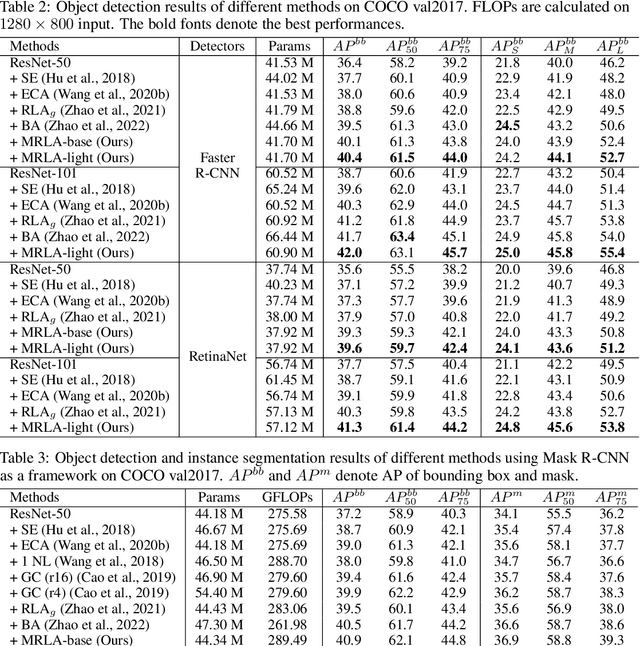

Abstract:More and more evidence has shown that strengthening layer interactions can enhance the representation power of a deep neural network, while self-attention excels at learning interdependencies by retrieving query-activated information. Motivated by this, we devise a cross-layer attention mechanism, called multi-head recurrent layer attention (MRLA), that sends a query representation of the current layer to all previous layers to retrieve query-related information from different levels of receptive fields. A light-weighted version of MRLA is also proposed to reduce the quadratic computation cost. The proposed layer attention mechanism can enrich the representation power of many state-of-the-art vision networks, including CNNs and vision transformers. Its effectiveness has been extensively evaluated in image classification, object detection and instance segmentation tasks, where improvements can be consistently observed. For example, our MRLA can improve 1.6% Top-1 accuracy on ResNet-50, while only introducing 0.16M parameters and 0.07B FLOPs. Surprisingly, it can boost the performances by a large margin of 3-4% box AP and mask AP in dense prediction tasks. Our code is available at https://github.com/joyfang1106/MRLA.

Lightweight Spatial-Channel Adaptive Coordination of Multilevel Refinement Enhancement Network for Image Reconstruction

Sep 17, 2022

Abstract:Benefiting from the vigorous development of deep learning, many CNN-based image super-resolution methods have emerged and achieved better results than traditional algorithms. However, it is difficult for most algorithms to adaptively adjust the spatial region and channel features at the same time, let alone the information exchange between them. In addition, the exchange of information between attention modules is even less visible to researchers. To solve these problems, we put forward a lightweight spatial-channel adaptive coordination of multilevel refinement enhancement networks(MREN). Specifically, we construct a space-channel adaptive coordination block, which enables the network to learn the spatial region and channel feature information of interest under different receptive fields. In addition, the information of the corresponding feature processing level between the spatial part and the channel part is exchanged with the help of jump connection to achieve the coordination between the two. We establish a communication bridge between attention modules through a simple linear combination operation, so as to more accurately and continuously guide the network to pay attention to the information of interest. Extensive experiments on several standard test sets have shown that our MREN achieves superior performance over other advanced algorithms with a very small number of parameters and very low computational complexity.

Image Reconstruction of Multi Branch Feature Multiplexing Fusion Network with Mixed Multi-layer Attention

May 27, 2022

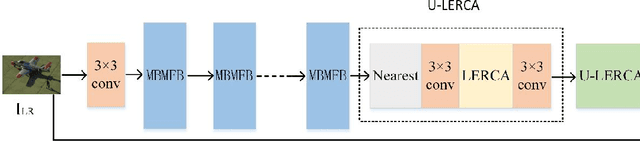

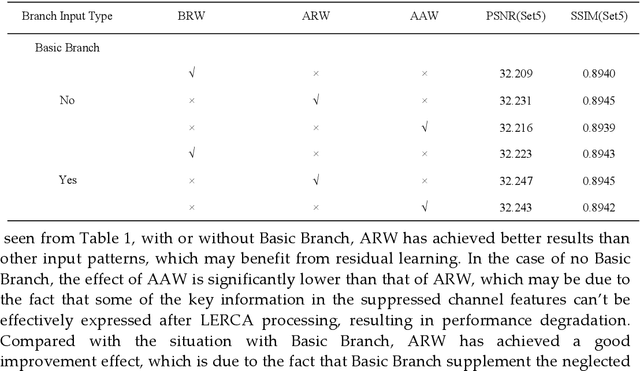

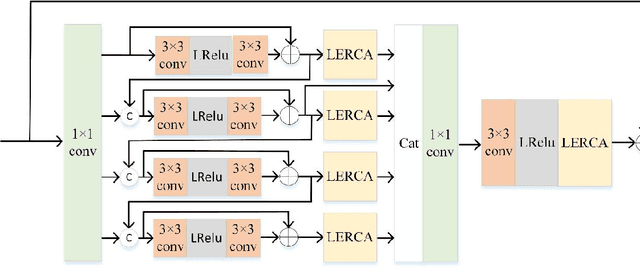

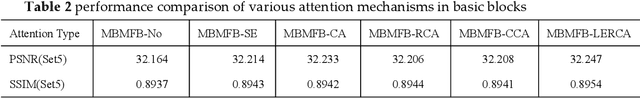

Abstract:Image super-resolution reconstruction achieves better results than traditional methods with the help of the powerful nonlinear representation ability of convolution neural network. However, some existing algorithms also have some problems, such as insufficient utilization of phased features, ignoring the importance of early phased feature fusion to improve network performance, and the inability of the network to pay more attention to high-frequency information in the reconstruction process. To solve these problems, we propose a multi-branch feature multiplexing fusion network with mixed multi-layer attention (MBMFN), which realizes the multiple utilization of features and the multistage fusion of different levels of features. To further improve the networks performance, we propose a lightweight enhanced residual channel attention (LERCA), which can not only effectively avoid the loss of channel information but also make the network pay more attention to the key channel information and benefit from it. Finally, the attention mechanism is introduced into the reconstruction process to strengthen the restoration of edge texture and other details. A large number of experiments on several benchmark sets show that, compared with other advanced reconstruction algorithms, our algorithm produces highly competitive objective indicators and restores more image detail texture information.

DL-AMP and DBTO: An Automatic Merge Planning and Trajectory Optimization and Its Application in Autonomous Driving

Jul 30, 2021

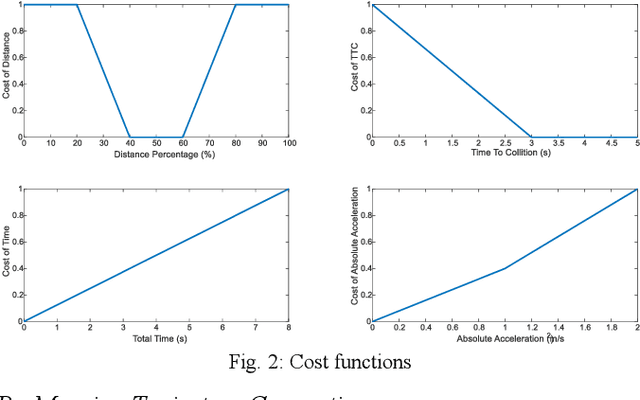

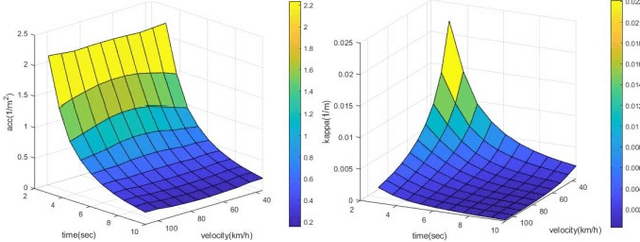

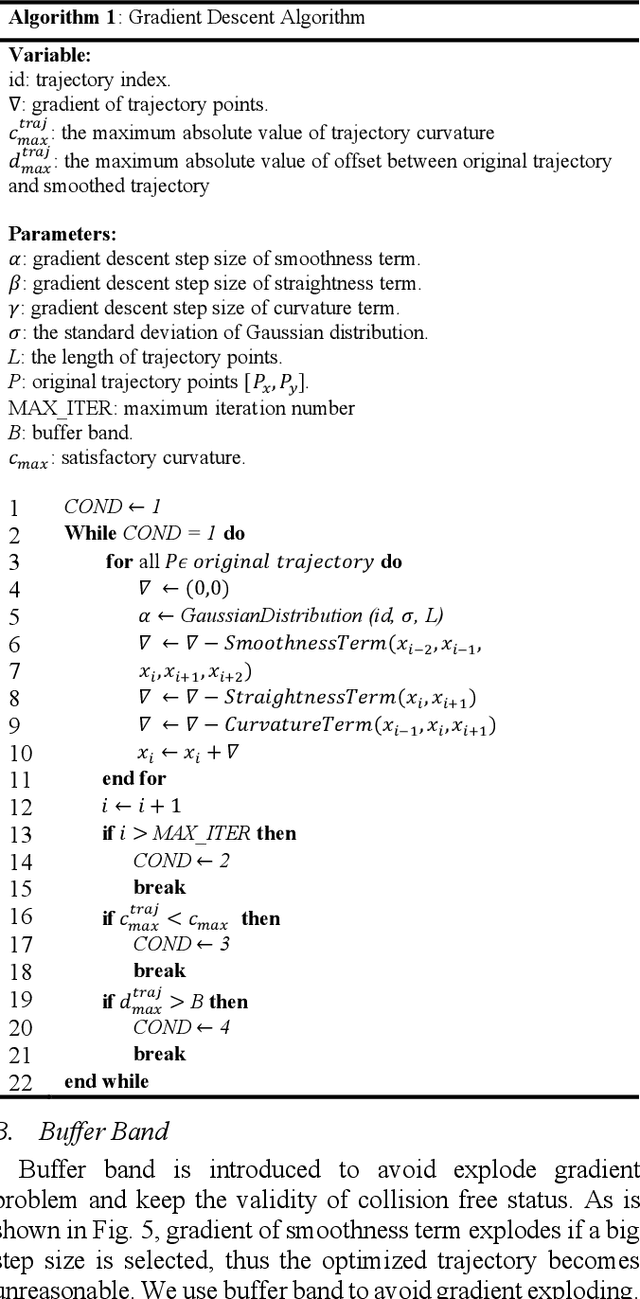

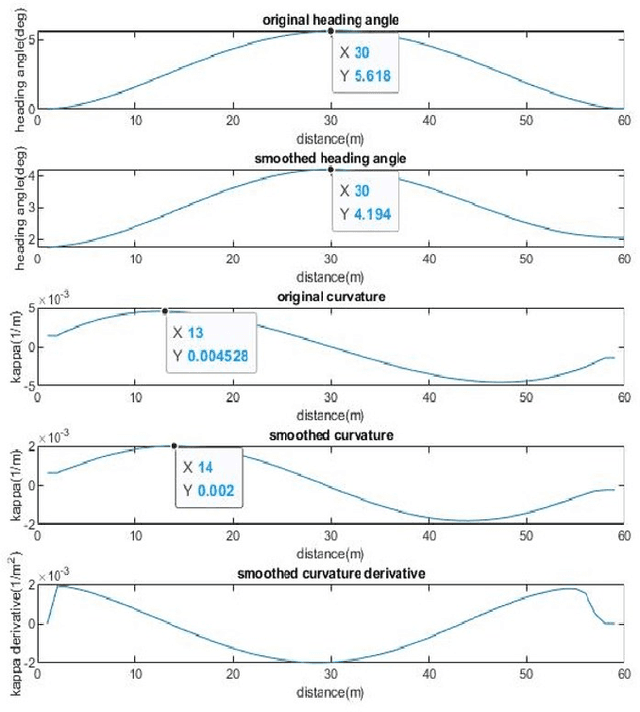

Abstract:This paper presents an automatic merging algorithm for autonomous driving vehicles, which decouples the specific motion planning problem into a Dual-Layer Automatic Merge Planning (DL_AMP) and a Descent-Based Trajectory Optimization (DBTO). This work leads to great improvements in finding the best merge opportunity, lateral and longitudinal merge planning and control, trajectory postprocessing and driving comfort.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge