Yuta Itoh

Telextiles: End-to-end Remote Transmission of Fabric Tactile Sensation

May 06, 2024Abstract:The tactile sensation of textiles is critical in determining the comfort of clothing. For remote use, such as online shopping, users cannot physically touch the textile of clothes, making it difficult to evaluate its tactile sensation. Tactile sensing and actuation devices are required to transmit the tactile sensation of textiles. The sensing device needs to recognize different garments, even with hand-held sensors. In addition, the existing actuation device can only present a limited number of known patterns and cannot transmit unknown tactile sensations of textiles. To address these issues, we propose Telextiles, an interface that can remotely transmit tactile sensations of textiles by creating a latent space that reflects the proximity of textiles through contrastive self-supervised learning. We confirm that textiles with similar tactile features are located close to each other in the latent space through a two-dimensional plot. We then compress the latent features for known textile samples into the 1D distance and apply the 16 textile samples to the rollers in the order of the distance. The roller is rotated to select the textile with the closest feature if an unknown textile is detected.

* 10 pages, 8 figures, Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology

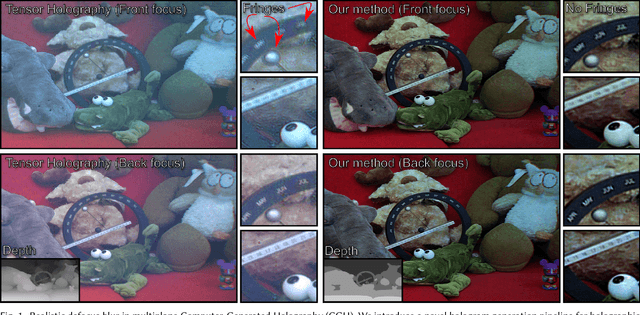

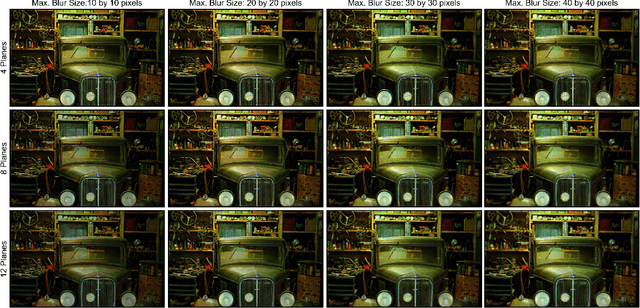

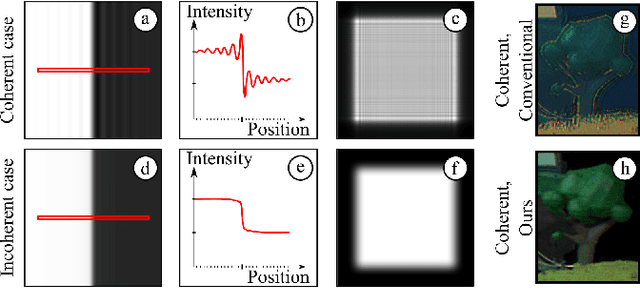

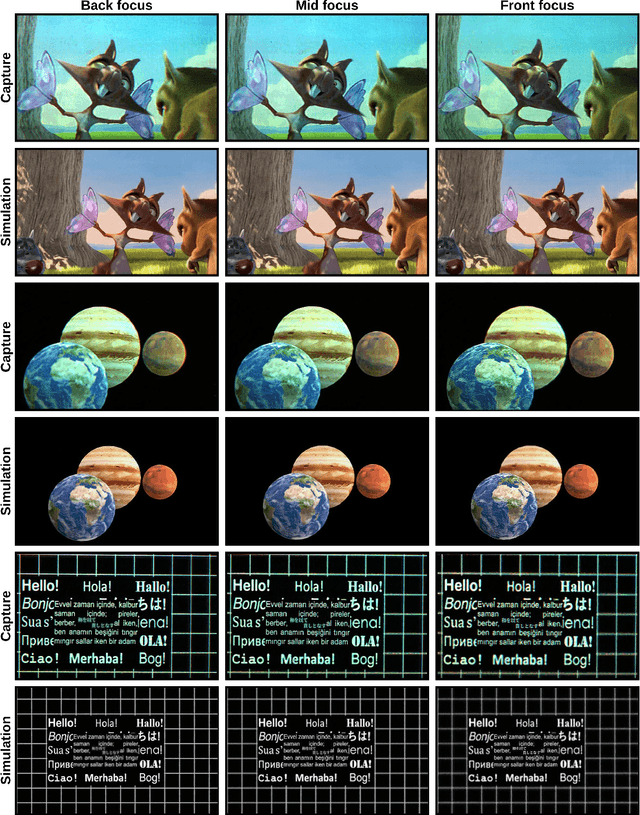

Realistic Defocus Blur for Multiplane Computer-Generated Holography

May 14, 2022

Abstract:This paper introduces a new multiplane CGH computation method to reconstruct artefact-free high-quality holograms with natural-looking defocus blur. Our method introduces a new targeting scheme and a new loss function. While the targeting scheme accounts for defocused parts of the scene at each depth plane, the new loss function analyzes focused and defocused parts separately in reconstructed images. Our method support phase-only CGH calculations using various iterative (e.g., Gerchberg-Saxton, Gradient Descent) and non-iterative (e.g., Double Phase) CGH techniques. We achieve our best image quality using a modified gradient descent-based optimization recipe where we introduce a constraint inspired by the double phase method. We validate our method experimentally using our proof-of-concept holographic display, comparing various algorithms, including multi-depth scenes with sparse and dense contents.

A Survey of Calibration Methods for Optical See-Through Head-Mounted Displays

Sep 13, 2017

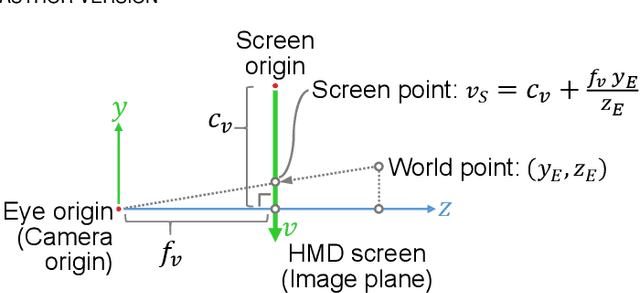

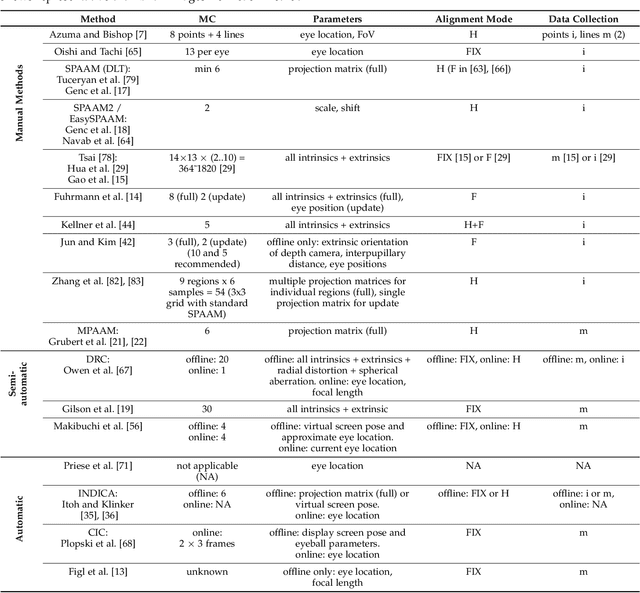

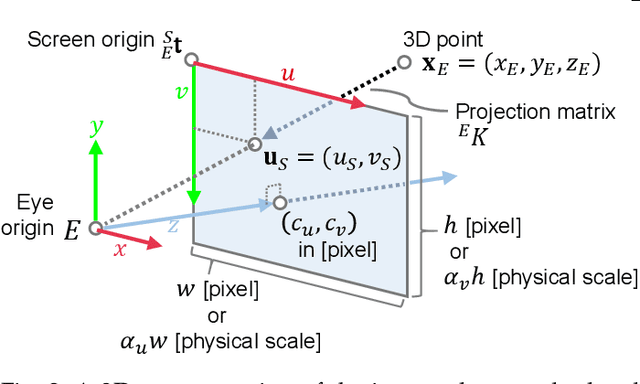

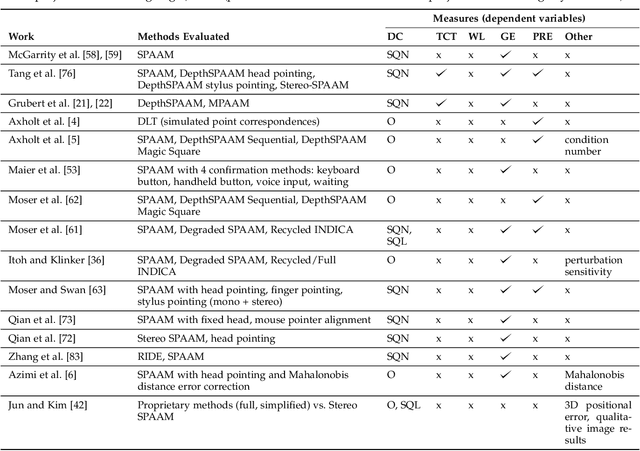

Abstract:Optical see-through head-mounted displays (OST HMDs) are a major output medium for Augmented Reality, which have seen significant growth in popularity and usage among the general public due to the growing release of consumer-oriented models, such as the Microsoft Hololens. Unlike Virtual Reality headsets, OST HMDs inherently support the addition of computer-generated graphics directly into the light path between a user's eyes and their view of the physical world. As with most Augmented and Virtual Reality systems, the physical position of an OST HMD is typically determined by an external or embedded 6-Degree-of-Freedom tracking system. However, in order to properly render virtual objects, which are perceived as spatially aligned with the physical environment, it is also necessary to accurately measure the position of the user's eyes within the tracking system's coordinate frame. For over 20 years, researchers have proposed various calibration methods to determine this needed eye position. However, to date, there has not been a comprehensive overview of these procedures and their requirements. Hence, this paper surveys the field of calibration methods for OST HMDs. Specifically, it provides insights into the fundamentals of calibration techniques, and presents an overview of both manual and automatic approaches, as well as evaluation methods and metrics. Finally, it also identifies opportunities for future research. % relative to the tracking coordinate system, and, hence, its position in 3D space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge