Hakan Urey

AutoColor: Learned Light Power Control for Multi-Color Holograms

May 02, 2023Abstract:Multi-color holograms rely on simultaneous illumination from multiple light sources. These multi-color holograms could utilize light sources better than conventional single-color holograms and can improve the dynamic range of holographic displays. In this letter, we introduce \projectname, the first learned method for estimating the optimal light source powers required for illuminating multi-color holograms. For this purpose, we establish the first multi-color hologram dataset using synthetic images and their depth information. We generate these synthetic images using a trending pipeline combining generative, large language, and monocular depth estimation models. Finally, we train our learned model using our dataset and experimentally demonstrate that \projectname significantly decreases the number of steps required to optimize multi-color holograms from $>1000$ to $70$ iteration steps without compromising image quality.

Dynamic accommodation measurement using Purkinje reflections and ML algorithms

Apr 11, 2023Abstract:We developed a prototype device for dynamic gaze and accommodation measurements based on 4 Purkinje reflections (PR) suitable for use in AR and ophthalmology applications. PR1&2 and PR3&4 are used for accurate gaze and accommodation measurements, respectively. Our eye model was developed in ZEMAX and matches the experiments well. Our model predicts the accommodation from 4 diopters to 1 diopter with better than 0.25D accuracy. We performed repeatability tests and obtained accurate gaze and accommodation estimations from subjects. We are generating a large synthetic data set using physically accurate models and machine learning.

Wearable multi-color RAPD screening device

Apr 11, 2023Abstract:In this work, we developed a wearable, head-mounted device that automatically calculates the precise Relative Afferent Pupillary Defect (RAPD) value of a patient. The device consists of two RGB LEDs, two infrared cameras, and one microcontroller. In the RAPD test, the parameters like LED on-off durations, brightness level, and color of the light can be controlled by the user. Upon data acquisition, a computational unit processes the data, calculates the RAPD score and visualizes the test results with a user-friendly interface.Multiprocessing methods used on GUI to optimize the processing pipeline. We have shown that our head-worn instrument is easy to use, fast, and suitable for early-diagnostics and screening purposes for various neurological conditions such as RAPD, glaucoma, asymmetric glaucoma, and anisocoria.

Artificial Eye Model and Holographic Display Based IOL Simulator

Apr 02, 2023Abstract:Cataract is a common ophthalmic disease in which a cloudy area is formed in the lens of the eye and requires surgical removal and replacement of eye lens. Careful selection of the intraocular lens (IOL) is critical for the post-surgery satisfaction of the patient. Although there are various types of IOLs in the market with different properties, it is challenging for the patient to imagine how they will perceive the world after the surgery. We propose a novel holographic vision simulator which utilizes non-cataractous regions on eye lens to allow the cataract patients to experience post-operative visual acuity before surgery. Computer generated holography display technology enables to shape and street the light beam through the relatively clear areas of the patient's lens. Another challenge for cataract surgeries is to match the right patient with the right IOL. To evaluate various IOLs, we developed an artificial human eye composed of a scleral lens, a glass retina, an iris, and a replaceable IOL holder. Next, we tested different IOLs (monofocal and multifocal) by capturing real-world scenes to demonstrate visual artifacts. Then, the artificial eye was implemented in the benchtop holographic simulator to evaluate various IOLs using different light sources and holographic contents.

* 6 pages, 6 figures, conference poster manuscript

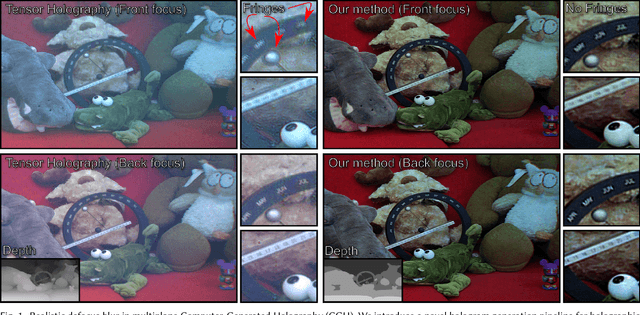

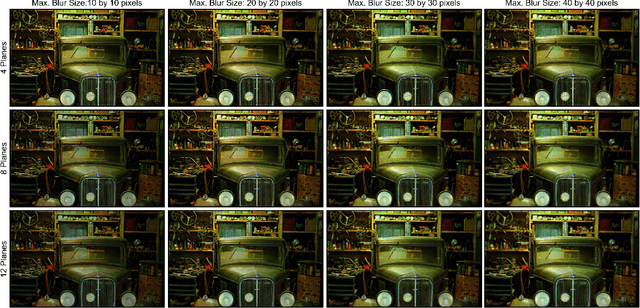

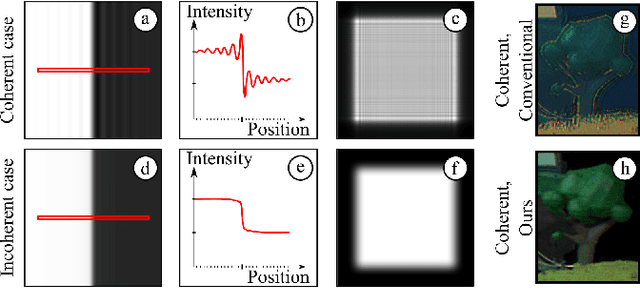

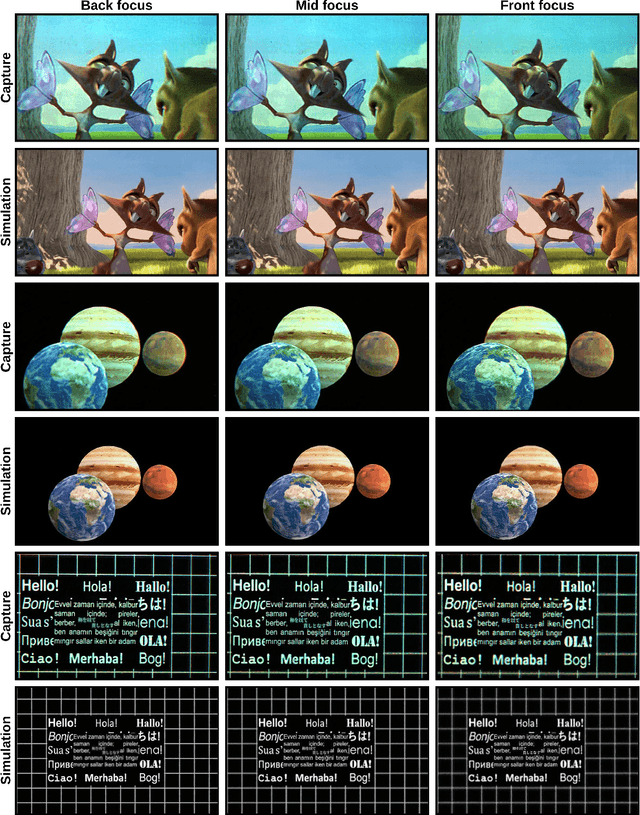

Realistic Defocus Blur for Multiplane Computer-Generated Holography

May 14, 2022

Abstract:This paper introduces a new multiplane CGH computation method to reconstruct artefact-free high-quality holograms with natural-looking defocus blur. Our method introduces a new targeting scheme and a new loss function. While the targeting scheme accounts for defocused parts of the scene at each depth plane, the new loss function analyzes focused and defocused parts separately in reconstructed images. Our method support phase-only CGH calculations using various iterative (e.g., Gerchberg-Saxton, Gradient Descent) and non-iterative (e.g., Double Phase) CGH techniques. We achieve our best image quality using a modified gradient descent-based optimization recipe where we introduce a constraint inspired by the double phase method. We validate our method experimentally using our proof-of-concept holographic display, comparing various algorithms, including multi-depth scenes with sparse and dense contents.

Learned holographic light transport

Aug 01, 2021

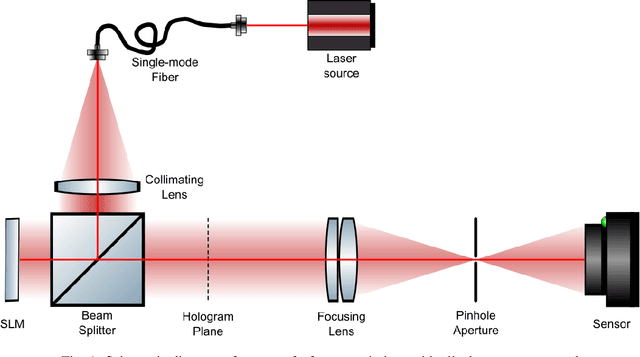

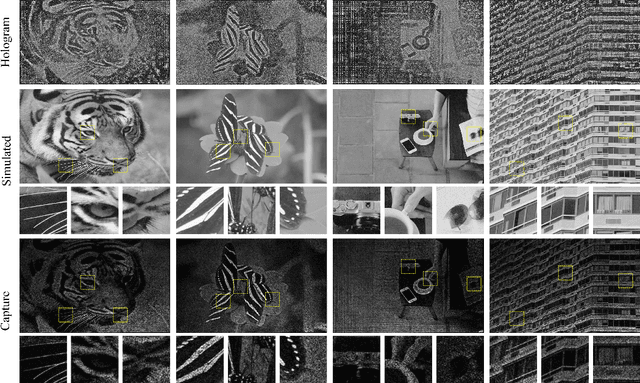

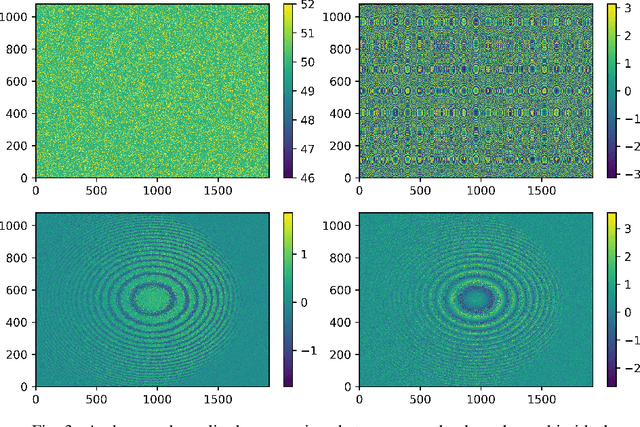

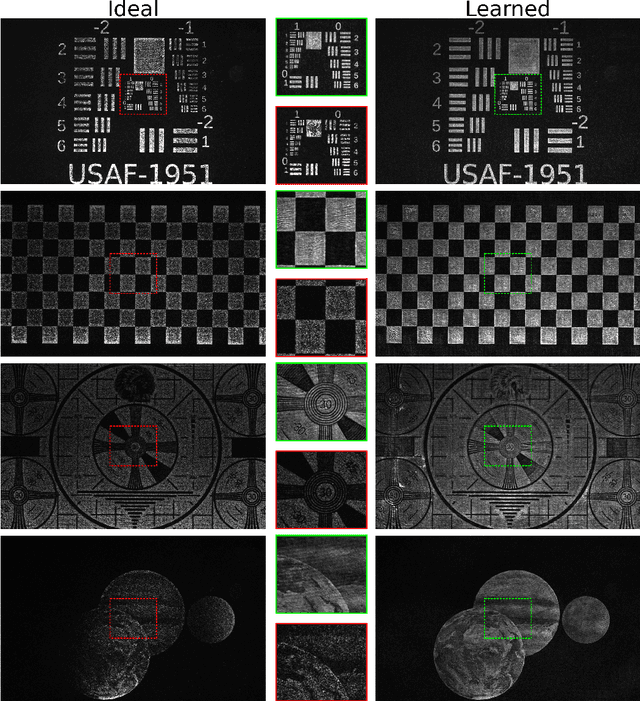

Abstract:Computer-Generated Holography (CGH) algorithms often fall short in matching simulations with results from a physical holographic display. Our work addresses this mismatch by learning the holographic light transport in holographic displays. Using a camera and a holographic display, we capture the image reconstructions of optimized holograms that rely on ideal simulations to generate a dataset. Inspired by the ideal simulations, we learn a complex-valued convolution kernel that can propagate given holograms to captured photographs in our dataset. Our method can dramatically improve simulation accuracy and image quality in holographic displays while paving the way for physically informed learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge