Koray Kavaklı

AutoColor: Learned Light Power Control for Multi-Color Holograms

May 02, 2023Abstract:Multi-color holograms rely on simultaneous illumination from multiple light sources. These multi-color holograms could utilize light sources better than conventional single-color holograms and can improve the dynamic range of holographic displays. In this letter, we introduce \projectname, the first learned method for estimating the optimal light source powers required for illuminating multi-color holograms. For this purpose, we establish the first multi-color hologram dataset using synthetic images and their depth information. We generate these synthetic images using a trending pipeline combining generative, large language, and monocular depth estimation models. Finally, we train our learned model using our dataset and experimentally demonstrate that \projectname significantly decreases the number of steps required to optimize multi-color holograms from $>1000$ to $70$ iteration steps without compromising image quality.

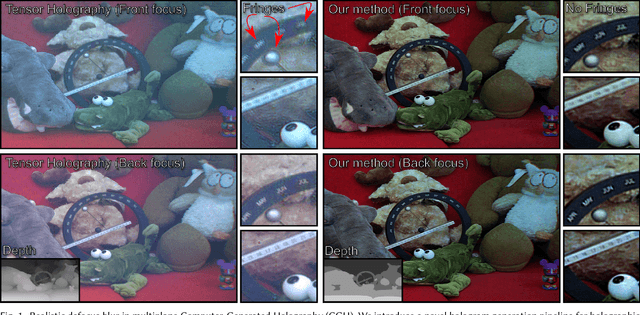

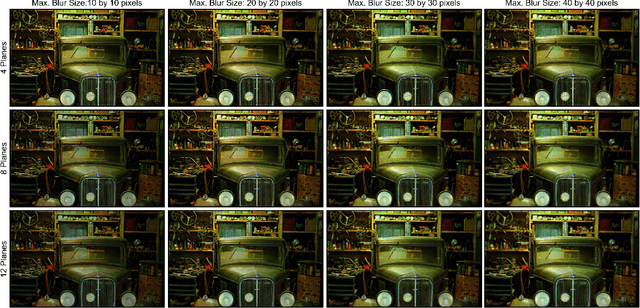

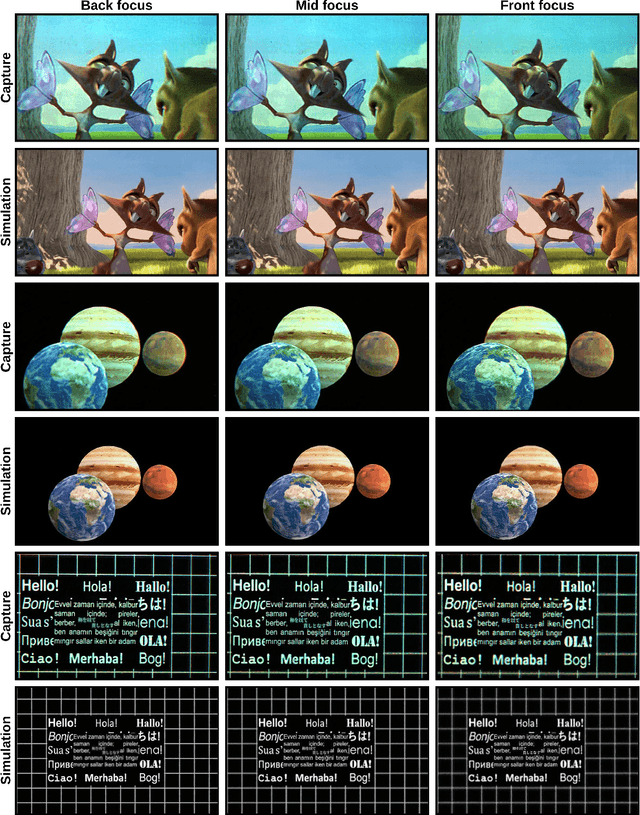

Realistic Defocus Blur for Multiplane Computer-Generated Holography

May 14, 2022

Abstract:This paper introduces a new multiplane CGH computation method to reconstruct artefact-free high-quality holograms with natural-looking defocus blur. Our method introduces a new targeting scheme and a new loss function. While the targeting scheme accounts for defocused parts of the scene at each depth plane, the new loss function analyzes focused and defocused parts separately in reconstructed images. Our method support phase-only CGH calculations using various iterative (e.g., Gerchberg-Saxton, Gradient Descent) and non-iterative (e.g., Double Phase) CGH techniques. We achieve our best image quality using a modified gradient descent-based optimization recipe where we introduce a constraint inspired by the double phase method. We validate our method experimentally using our proof-of-concept holographic display, comparing various algorithms, including multi-depth scenes with sparse and dense contents.

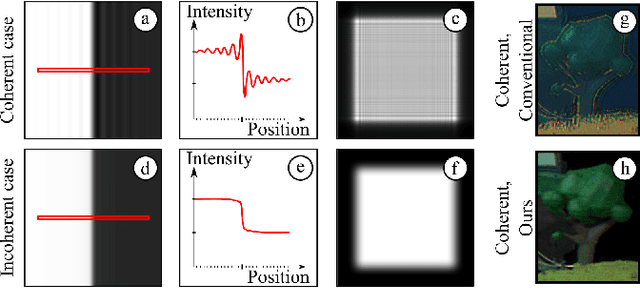

Learned holographic light transport

Aug 01, 2021

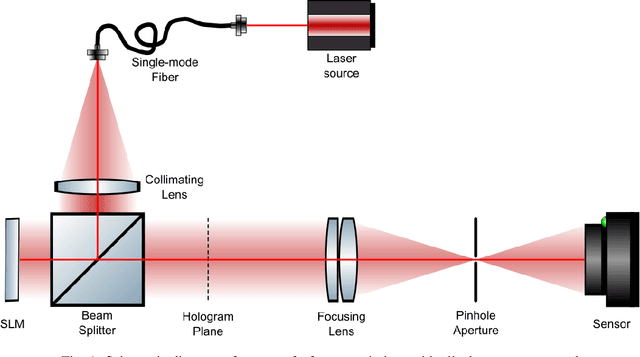

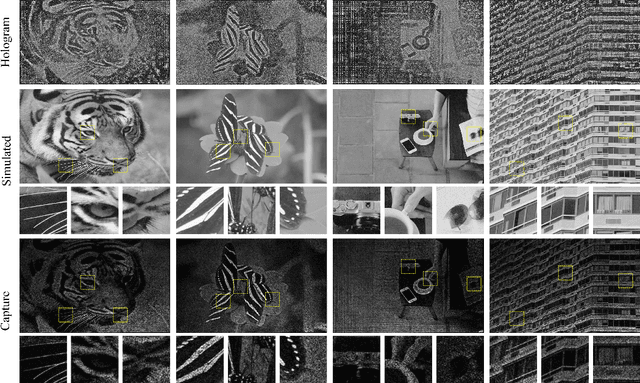

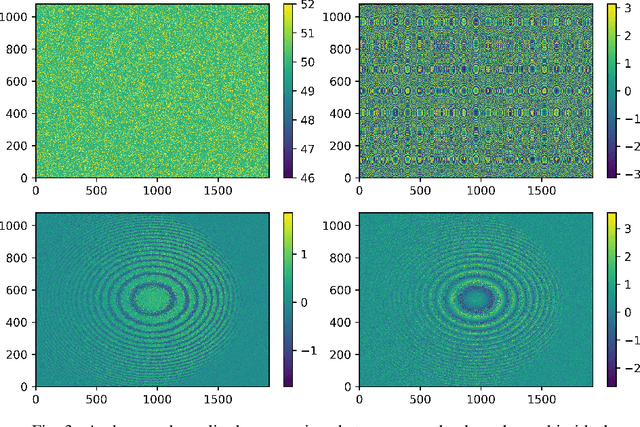

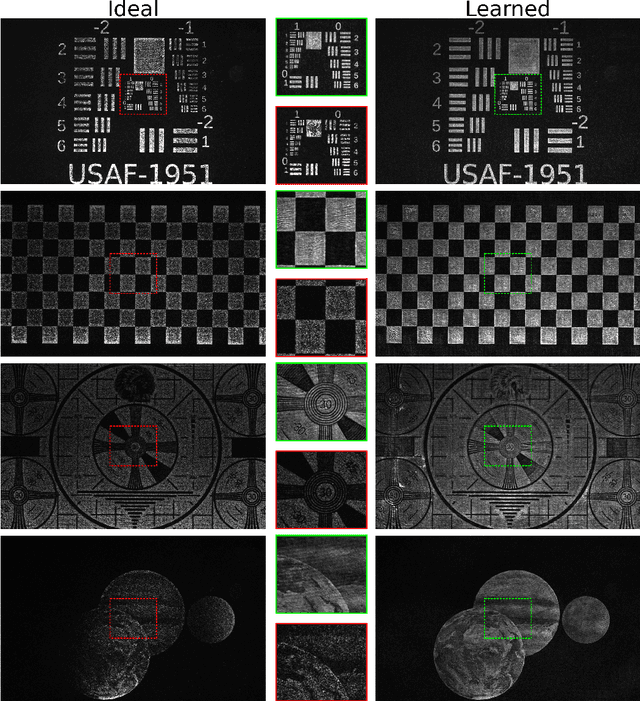

Abstract:Computer-Generated Holography (CGH) algorithms often fall short in matching simulations with results from a physical holographic display. Our work addresses this mismatch by learning the holographic light transport in holographic displays. Using a camera and a holographic display, we capture the image reconstructions of optimized holograms that rely on ideal simulations to generate a dataset. Inspired by the ideal simulations, we learn a complex-valued convolution kernel that can propagate given holograms to captured photographs in our dataset. Our method can dramatically improve simulation accuracy and image quality in holographic displays while paving the way for physically informed learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge