Yuefei Lyu

Navigating the Black Box: Leveraging LLMs for Effective Text-Level Graph Injection Attacks

Jun 16, 2025

Abstract:Text-attributed graphs (TAGs) integrate textual data with graph structures, providing valuable insights in applications such as social network analysis and recommendation systems. Graph Neural Networks (GNNs) effectively capture both topological structure and textual information in TAGs but are vulnerable to adversarial attacks. Existing graph injection attack (GIA) methods assume that attackers can directly manipulate the embedding layer, producing non-explainable node embeddings. Furthermore, the effectiveness of these attacks often relies on surrogate models with high training costs. Thus, this paper introduces ATAG-LLM, a novel black-box GIA framework tailored for TAGs. Our approach leverages large language models (LLMs) to generate interpretable text-level node attributes directly, ensuring attacks remain feasible in real-world scenarios. We design strategies for LLM prompting that balance exploration and reliability to guide text generation, and propose a similarity assessment method to evaluate attack text effectiveness in disrupting graph homophily. This method efficiently perturbs the target node with minimal training costs in a strict black-box setting, ensuring a text-level graph injection attack for TAGs. Experiments on real-world TAG datasets validate the superior performance of ATAG-LLM compared to state-of-the-art embedding-level and text-level attack methods.

Subgroup Fairness in Graph-based Spam Detection

Apr 24, 2022

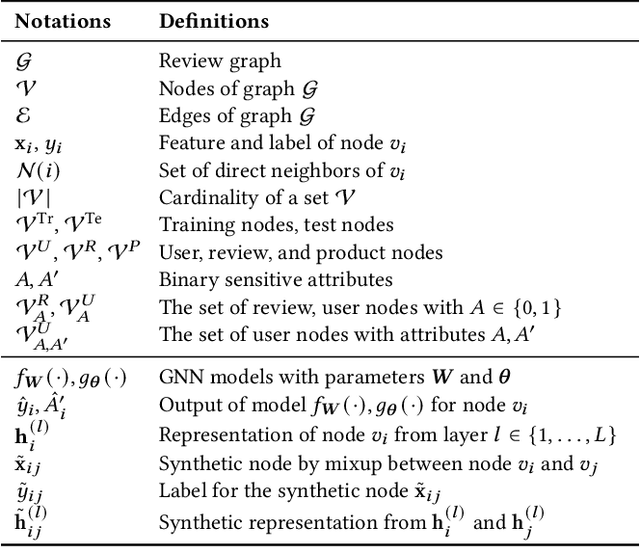

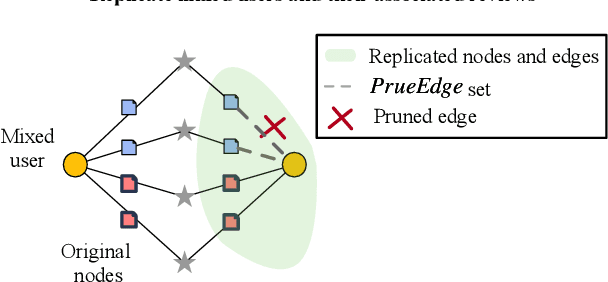

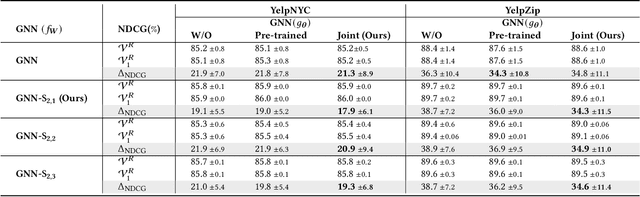

Abstract:Fake reviews are prevalent on review websites such as Amazon and Yelp. GNN is the state-of-the-art method that can detect suspicious reviewers by exploiting the topologies of the graph connecting reviewers, reviews, and target products. However, the discrepancy in the detection accuracy over different groups of reviewers causes discriminative treatment of different reviewers of the websites, leading to less engagement and trustworthiness of such websites. The complex dependencies over the review graph introduce difficulties in teasing out subgroups of reviewers that are hidden within larger groups and are treated unfairly. There is no previous study that defines and discovers the subtle subgroups to improve equitable treatment of reviewers. This paper addresses the challenges of defining, discovering, and utilizing subgroup memberships for fair spam detection. We first define a subgroup membership that can lead to discrepant accuracy in the subgroups. Since the subgroup membership is usually not observable while also important to guide the GNN detector to balance the treatment, we design a model that jointly infers the hidden subgroup memberships and exploits the membership for calibrating the target GNN's detection accuracy across subgroups. Comprehensive results on two large Yelp review datasets demonstrate that the proposed model can be trained to treat the subgroups more fairly.

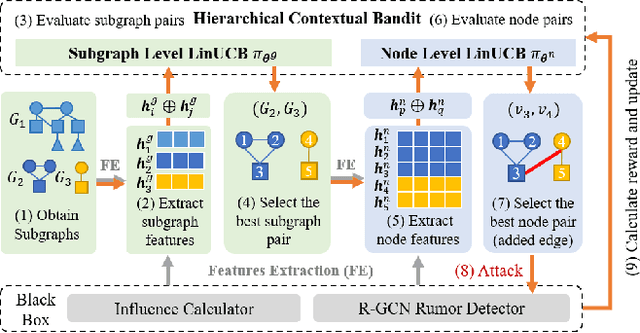

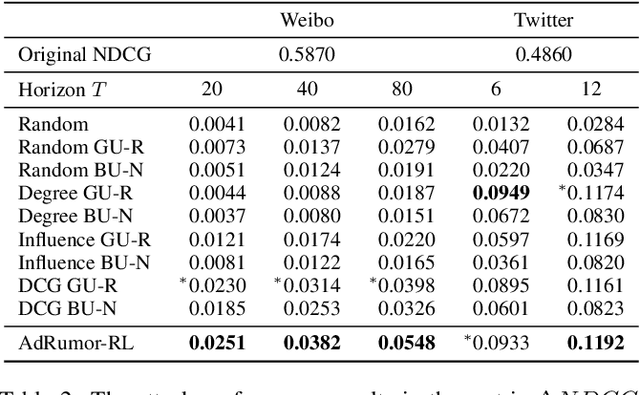

Interpretable and Effective Reinforcement Learning for Attacking against Graph-based Rumor Detection

Jan 15, 2022

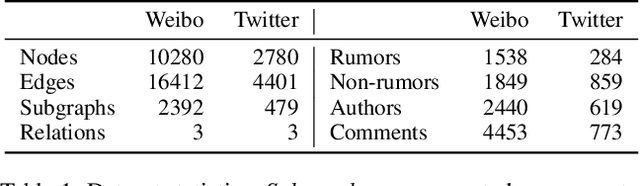

Abstract:Social networks are polluted by rumors, which can be detected by machine learning models. However, the models are fragile and understanding the vulnerabilities is critical to rumor detection. Certain vulnerabilities are due to dependencies on the graphs and suspiciousness ranking and are difficult for end-to-end methods to learn from limited noisy data. With a black-box detector, we design features capturing the dependencies to allow a reinforcement learning to learn an effective and interpretable attack policy based on the detector output. To speed up learning, we devise: (i) a credit assignment method that decomposes delayed rewards to individual attacking steps proportional to their effects; (ii) a time-dependent control variate to reduce variance due to large graphs and many attacking steps. On two social rumor datasets, we demonstrate: (i) the effectiveness of the attacks compared to rule-based attacks and end-to-end approaches; (ii) the usefulness of the proposed credit assignment strategy and control variate; (iii) interpretability of the policy when generating strong attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge