Subgroup Fairness in Graph-based Spam Detection

Paper and Code

Apr 24, 2022

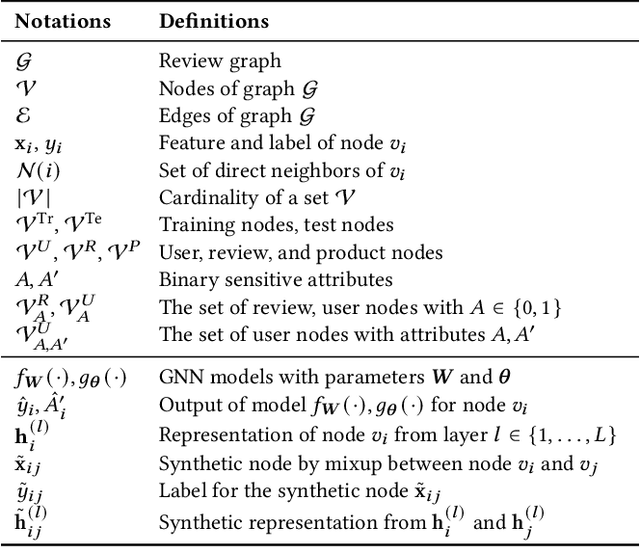

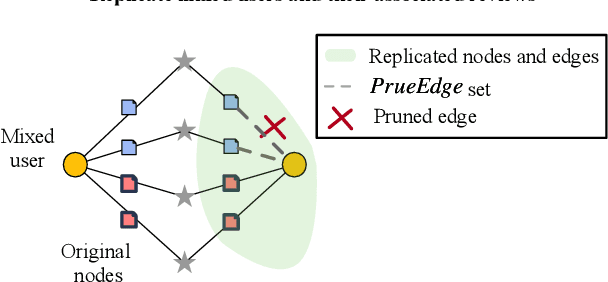

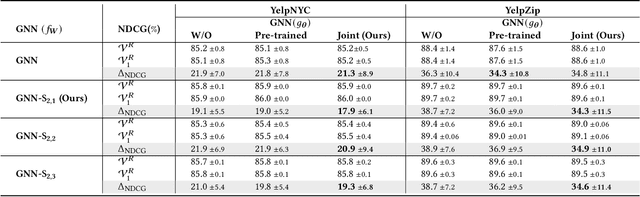

Fake reviews are prevalent on review websites such as Amazon and Yelp. GNN is the state-of-the-art method that can detect suspicious reviewers by exploiting the topologies of the graph connecting reviewers, reviews, and target products. However, the discrepancy in the detection accuracy over different groups of reviewers causes discriminative treatment of different reviewers of the websites, leading to less engagement and trustworthiness of such websites. The complex dependencies over the review graph introduce difficulties in teasing out subgroups of reviewers that are hidden within larger groups and are treated unfairly. There is no previous study that defines and discovers the subtle subgroups to improve equitable treatment of reviewers. This paper addresses the challenges of defining, discovering, and utilizing subgroup memberships for fair spam detection. We first define a subgroup membership that can lead to discrepant accuracy in the subgroups. Since the subgroup membership is usually not observable while also important to guide the GNN detector to balance the treatment, we design a model that jointly infers the hidden subgroup memberships and exploits the membership for calibrating the target GNN's detection accuracy across subgroups. Comprehensive results on two large Yelp review datasets demonstrate that the proposed model can be trained to treat the subgroups more fairly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge