Interpretable and Effective Reinforcement Learning for Attacking against Graph-based Rumor Detection

Paper and Code

Jan 15, 2022

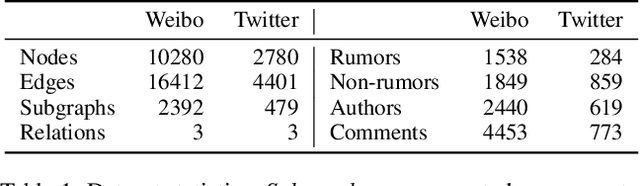

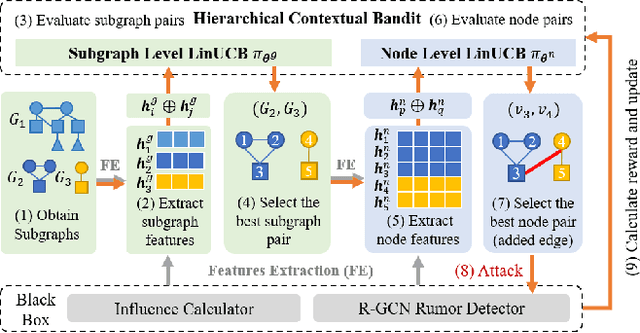

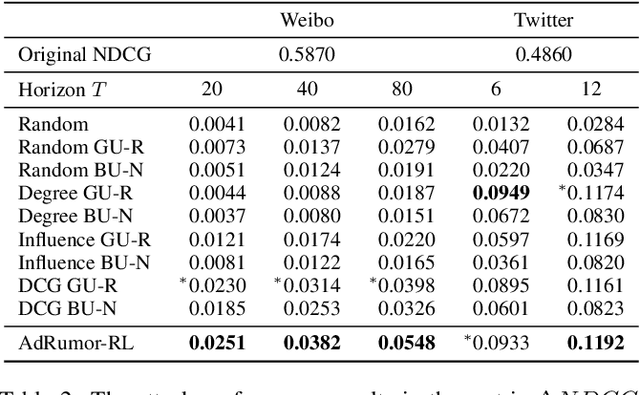

Social networks are polluted by rumors, which can be detected by machine learning models. However, the models are fragile and understanding the vulnerabilities is critical to rumor detection. Certain vulnerabilities are due to dependencies on the graphs and suspiciousness ranking and are difficult for end-to-end methods to learn from limited noisy data. With a black-box detector, we design features capturing the dependencies to allow a reinforcement learning to learn an effective and interpretable attack policy based on the detector output. To speed up learning, we devise: (i) a credit assignment method that decomposes delayed rewards to individual attacking steps proportional to their effects; (ii) a time-dependent control variate to reduce variance due to large graphs and many attacking steps. On two social rumor datasets, we demonstrate: (i) the effectiveness of the attacks compared to rule-based attacks and end-to-end approaches; (ii) the usefulness of the proposed credit assignment strategy and control variate; (iii) interpretability of the policy when generating strong attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge