Yuchen Qiu

School of Electrical and Computer Engineering, University of Oklahoma, Norman USA

Developing a Novel Image Marker to Predict the Responses of Neoadjuvant Chemotherapy for Ovarian Cancer Patients

Sep 13, 2023Abstract:Objective: Neoadjuvant chemotherapy (NACT) is one kind of treatment for advanced stage ovarian cancer patients. However, due to the nature of tumor heterogeneity, the patients' responses to NACT varies significantly among different subgroups. To address this clinical challenge, the purpose of this study is to develop a novel image marker to achieve high accuracy response prediction of the NACT at an early stage. Methods: For this purpose, we first computed a total of 1373 radiomics features to quantify the tumor characteristics, which can be grouped into three categories: geometric, intensity, and texture features. Second, all these features were optimized by principal component analysis algorithm to generate a compact and informative feature cluster. Using this cluster as the input, an SVM based classifier was developed and optimized to create a final marker, indicating the likelihood of the patient being responsive to the NACT treatment. To validate this scheme, a total of 42 ovarian cancer patients were retrospectively collected. A nested leave-one-out cross-validation was adopted for model performance assessment. Results: The results demonstrate that the new method yielded an AUC (area under the ROC [receiver characteristic operation] curve) of 0.745. Meanwhile, the model achieved overall accuracy of 76.2%, positive predictive value of 70%, and negative predictive value of 78.1%. Conclusion: This study provides meaningful information for the development of radiomics based image markers in NACT response prediction.

Evaluating the Effectiveness of 2D and 3D Features for Predicting Tumor Response to Chemotherapy

Apr 14, 2023

Abstract:2D and 3D tumor features are widely used in a variety of medical image analysis tasks. However, for chemotherapy response prediction, the effectiveness between different kinds of 2D and 3D features are not comprehensively assessed, especially in ovarian cancer-related applications. This investigation aims to accomplish such a comprehensive evaluation. For this purpose, CT images were collected retrospectively from 188 advanced-stage ovarian cancer patients. All the metastatic tumors that occurred in each patient were segmented and then processed by a set of six filters. Next, three categories of features, namely geometric, density, and texture features, were calculated from both the filtered results and the original segmented tumors, generating a total of 1595 and 1403 features for the 3D and 2D tumors, respectively. In addition to the conventional single-slice 2D and full-volume 3D tumor features, we also computed the incomplete-3D tumor features, which were achieved by sequentially adding one individual CT slice and calculating the corresponding features. Support vector machine (SVM) based prediction models were developed and optimized for each feature set. 5-fold cross-validation was used to assess the performance of each individual model. The results show that the 2D feature-based model achieved an AUC (area under the ROC curve [receiver operating characteristic]) of 0.84+-0.02. When adding more slices, the AUC first increased to reach the maximum and then gradually decreased to 0.86+-0.02. The maximum AUC was yielded when adding two adjacent slices, with a value of 0.91+-0.01. This initial result provides meaningful information for optimizing machine learning-based decision-making support tools in the future.

Transformers Improve Breast Cancer Diagnosis from Unregistered Multi-View Mammograms

Jun 21, 2022

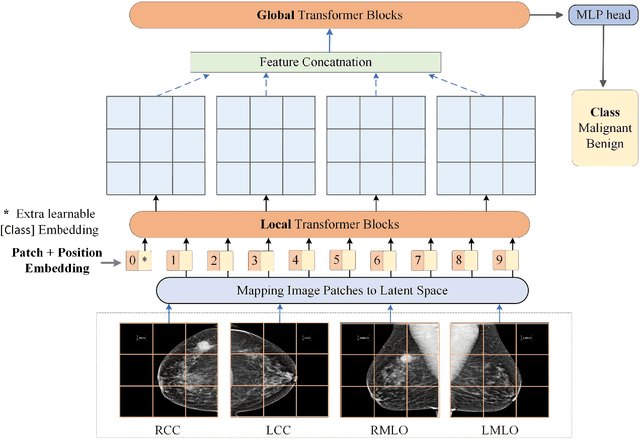

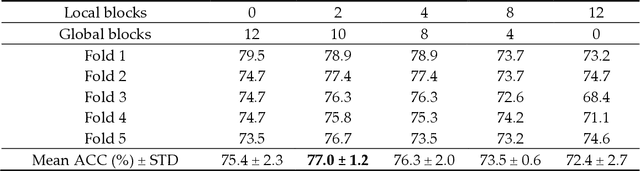

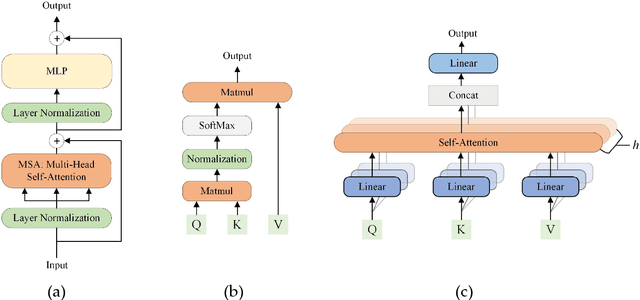

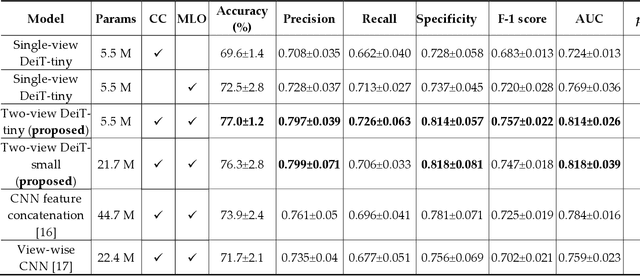

Abstract:Deep convolutional neural networks (CNNs) have been widely used in various medical imaging tasks. However, due to the intrinsic locality of convolution operation, CNNs generally cannot model long-range dependencies well, which are important for accurately identifying or mapping corresponding breast lesion features computed from unregistered multiple mammograms. This motivates us to leverage the architecture of Multi-view Vision Transformers to capture long-range relationships of multiple mammograms from the same patient in one examination. For this purpose, we employ local Transformer blocks to separately learn patch relationships within four mammograms acquired from two-view (CC/MLO) of two-side (right/left) breasts. The outputs from different views and sides are concatenated and fed into global Transformer blocks, to jointly learn patch relationships between four images representing two different views of the left and right breasts. To evaluate the proposed model, we retrospectively assembled a dataset involving 949 sets of mammograms, which include 470 malignant cases and 479 normal or benign cases. We trained and evaluated the model using a five-fold cross-validation method. Without any arduous preprocessing steps (e.g., optimal window cropping, chest wall or pectoral muscle removal, two-view image registration, etc.), our four-image (two-view-two-side) Transformer-based model achieves case classification performance with an area under ROC curve (AUC = 0.818), which significantly outperforms AUC = 0.784 achieved by the state-of-the-art multi-view CNNs (p = 0.009). It also outperforms two one-view-two-side models that achieve AUC of 0.724 (CC view) and 0.769 (MLO view), respectively. The study demonstrates the potential of using Transformers to develop high-performing computer-aided diagnosis schemes that combine four mammograms.

Virtual Adversarial Training for Semi-supervised Breast Mass Classification

Jan 25, 2022Abstract:This study aims to develop a novel computer-aided diagnosis (CAD) scheme for mammographic breast mass classification using semi-supervised learning. Although supervised deep learning has achieved huge success across various medical image analysis tasks, its success relies on large amounts of high-quality annotations, which can be challenging to acquire in practice. To overcome this limitation, we propose employing a semi-supervised method, i.e., virtual adversarial training (VAT), to leverage and learn useful information underlying in unlabeled data for better classification of breast masses. Accordingly, our VAT-based models have two types of losses, namely supervised and virtual adversarial losses. The former loss acts as in supervised classification, while the latter loss aims at enhancing model robustness against virtual adversarial perturbation, thus improving model generalizability. To evaluate the performance of our VAT-based CAD scheme, we retrospectively assembled a total of 1024 breast mass images, with equal number of benign and malignant masses. A large CNN and a small CNN were used in this investigation, and both were trained with and without the adversarial loss. When the labeled ratios were 40% and 80%, VAT-based CNNs delivered the highest classification accuracy of 0.740 and 0.760, respectively. The experimental results suggest that the VAT-based CAD scheme can effectively utilize meaningful knowledge from unlabeled data to better classify mammographic breast mass images.

Recent advances and clinical applications of deep learning in medical image analysis

May 27, 2021

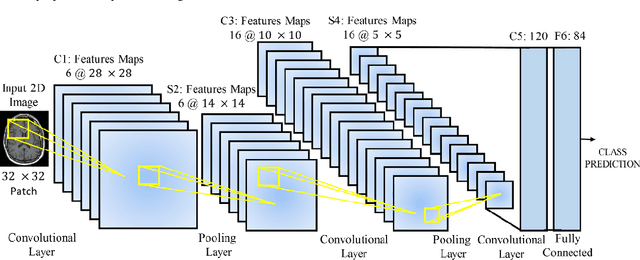

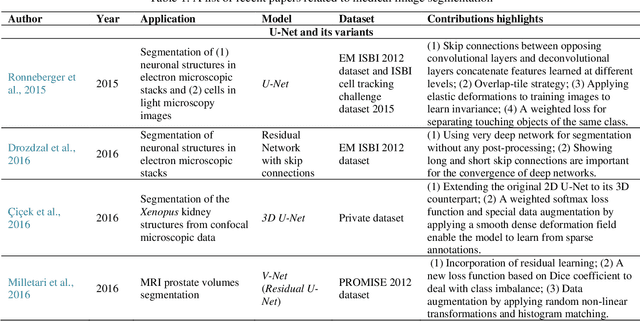

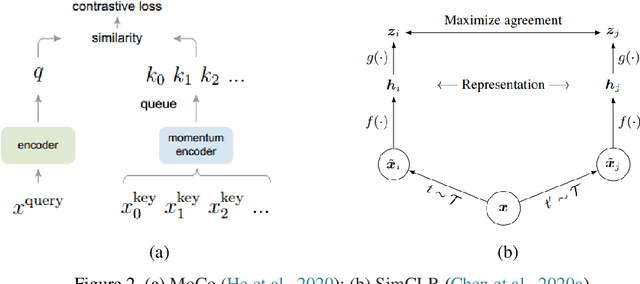

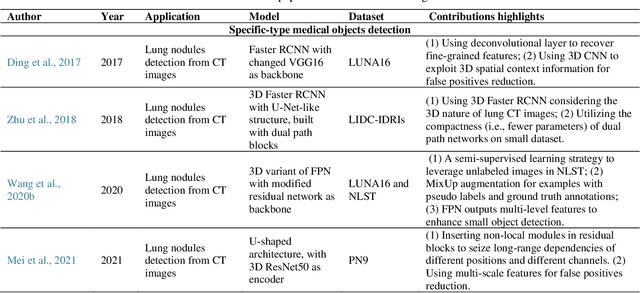

Abstract:Deep learning has become the mainstream technology in computer vision, and it has received extensive research interest in developing new medical image processing algorithms to support disease detection and diagnosis. As compared to conventional machine learning technologies, the major advantage of deep learning is that models can automatically identify and recognize representative features through the hierarchal model architecture, while avoiding the laborious development of hand-crafted features. In this paper, we reviewed and summarized more than 200 recently published papers to provide a comprehensive overview of applying deep learning methods in various medical image analysis tasks. Especially, we emphasize the latest progress and contributions of state-of-the-art unsupervised and semi-supervised deep learning in medical images, which are summarized based on different application scenarios, including lesion classification, segmentation, detection, and image registration. Additionally, we also discussed the major technical challenges and suggested the possible solutions in future research efforts.

Improving performance of CNN to predict likelihood of COVID-19 using chest X-ray images with preprocessing algorithms

Jun 11, 2020

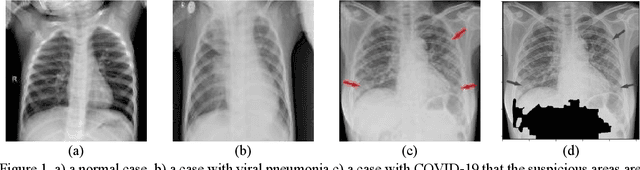

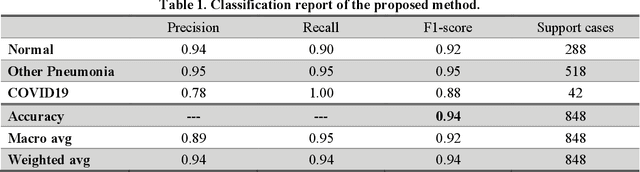

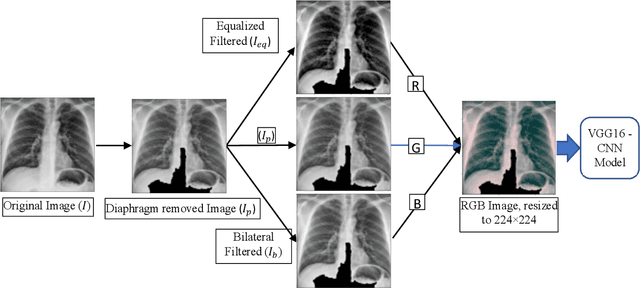

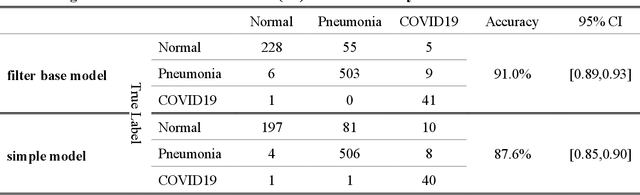

Abstract:As the rapid spread of coronavirus disease (COVID-19) worldwide, chest X-ray radiography has also been used to detect COVID-19 infected pneumonia and assess its severity or monitor its prognosis in the hospitals due to its low cost, low radiation dose, and wide accessibility. However, how to more accurately and efficiently detect COVID-19 infected pneumonia and distinguish it from other community-acquired pneumonia remains a challenge. In order to address this challenge, we in this study develop and test a new computer-aided diagnosis (CAD) scheme. It includes several image pre-processing algorithms to remove diaphragms, normalize image contrast-to-noise ratio, and generate three input images, then links to a transfer learning based convolutional neural network (a VGG16 based CNN model) to classify chest X-ray images into three classes of COVID-19 infected pneumonia, other community-acquired pneumonia and normal (non-pneumonia) cases. To this purpose, a publicly available dataset of 8,474 chest X-ray images is used, which includes 415 confirmed COVID-19 infected pneumonia, 5,179 community-acquired pneumonia, and 2,880 non-pneumonia cases. The dataset is divided into two subsets with 90% and 10% of images in each subset to train and test the CNN-based CAD scheme. The testing results achieve 94.0% of overall accuracy in classifying three classes and 98.6% accuracy in detecting Covid-19 infected cases. Thus, the study demonstrates the feasibility of developing a CAD scheme of chest X-ray images and providing radiologists useful decision-making supporting tools in detecting and diagnosis of COVID-19 infected pneumonia.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge