Yuanmeng Zhang

Edit As You Wish: Video Description Editing with Multi-grained Commands

May 15, 2023Abstract:Automatically narrating a video with natural language can assist people in grasping and managing massive videos on the Internet. From the perspective of video uploaders, they may have varied preferences for writing the desired video description to attract more potential followers, e.g. catching customers' attention for product videos. The Controllable Video Captioning task is therefore proposed to generate a description conditioned on the user demand and video content. However, existing works suffer from two shortcomings: 1) the control signal is fixed and can only express single-grained control; 2) the video description can not be further edited to meet dynamic user demands. In this paper, we propose a novel Video Description Editing (VDEdit) task to automatically revise an existing video description guided by flexible user requests. Inspired by human writing-revision habits, we design the user command as a {operation, position, attribute} triplet to cover multi-grained use requirements, which can express coarse-grained control (e.g. expand the description) as well as fine-grained control (e.g. add specified details in specified position) in a unified format. To facilitate the VDEdit task, we first automatically construct a large-scale benchmark dataset namely VATEX-EDIT in the open domain describing diverse human activities. Considering the real-life application scenario, we further manually collect an e-commerce benchmark dataset called EMMAD-EDIT. We propose a unified framework to convert the {operation, position, attribute} triplet into a textual control sequence to handle multi-grained editing commands. For VDEdit evaluation, we adopt comprehensive metrics to measure three aspects of model performance, including caption quality, caption-command consistency, and caption-video alignment.

CapOnImage: Context-driven Dense-Captioning on Image

Apr 27, 2022

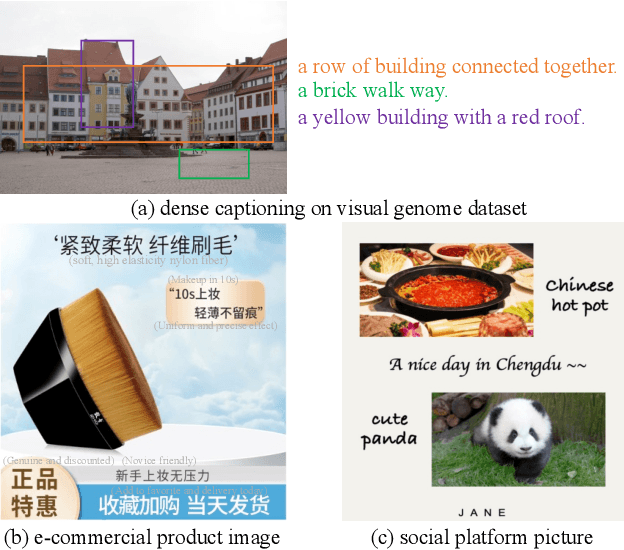

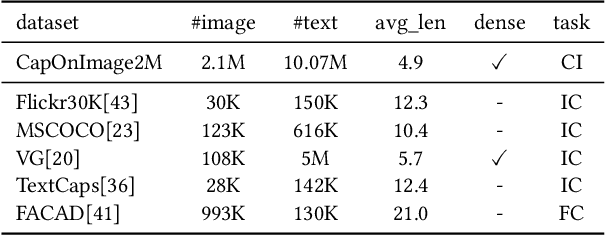

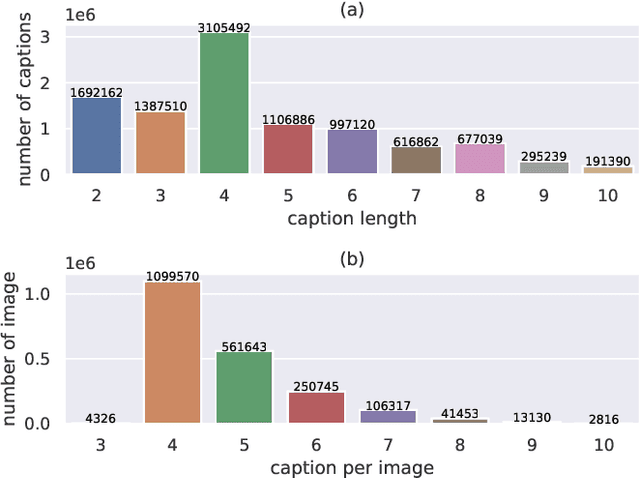

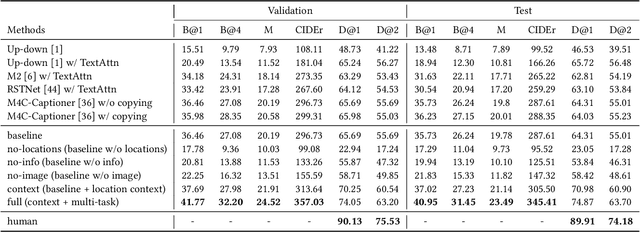

Abstract:Existing image captioning systems are dedicated to generating narrative captions for images, which are spatially detached from the image in presentation. However, texts can also be used as decorations on the image to highlight the key points and increase the attractiveness of images. In this work, we introduce a new task called captioning on image (CapOnImage), which aims to generate dense captions at different locations of the image based on contextual information. To fully exploit the surrounding visual context to generate the most suitable caption for each location, we propose a multi-modal pre-training model with multi-level pre-training tasks that progressively learn the correspondence between texts and image locations from easy to difficult. Since the model may generate redundant captions for nearby locations, we further enhance the location embedding with neighbor locations as context. For this new task, we also introduce a large-scale benchmark called CapOnImage2M, which contains 2.1 million product images, each with an average of 4.8 spatially localized captions. Compared with other image captioning model variants, our model achieves the best results in both captioning accuracy and diversity aspects. We will make code and datasets public to facilitate future research.

MultiHead MultiModal Deep Interest Recommendation Network

Oct 19, 2021

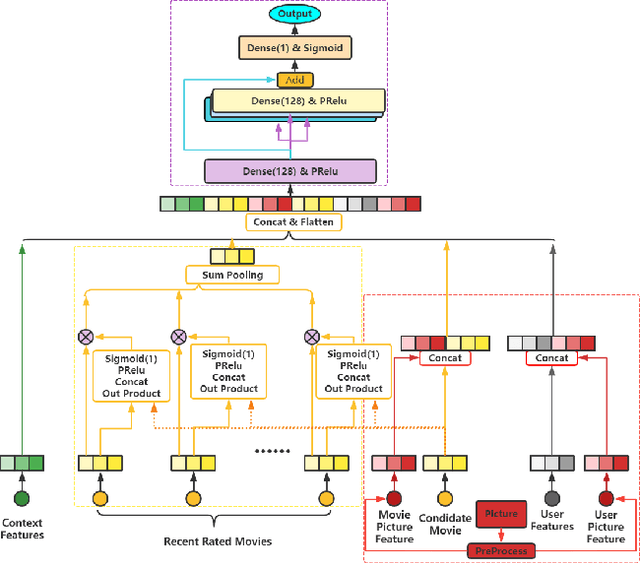

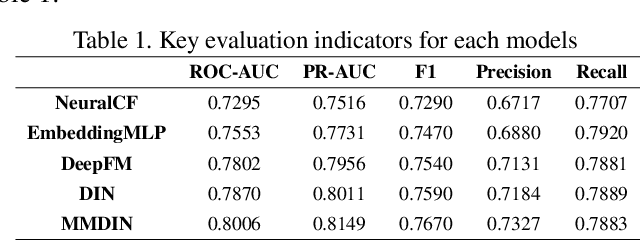

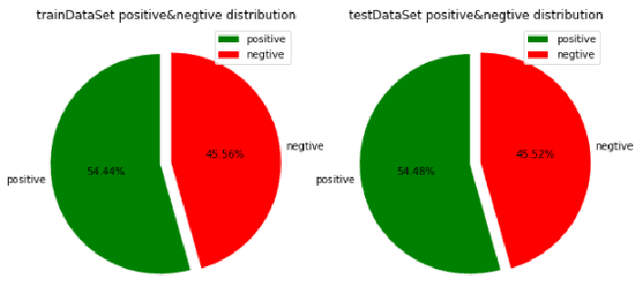

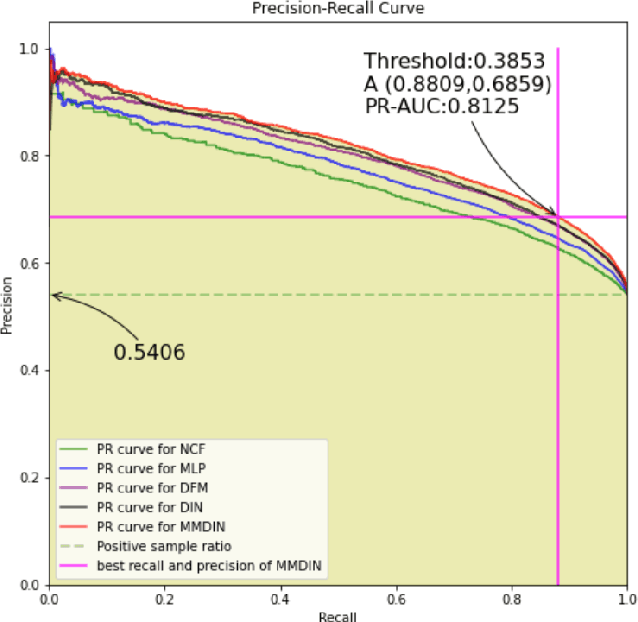

Abstract:With the development of information technology, human beings are constantly producing a large amount of information at all times. How to obtain the information that users are interested in from the large amount of information has become an issue of great concern to users and even business managers. In order to solve this problem, from traditional machine learning to deep learning recommendation systems, researchers continue to improve optimization models and explore solutions. Because researchers have optimized more on the recommendation model network structure, they have less research on enriching recommendation model features, and there is still room for in-depth recommendation model optimization. Based on the DIN\cite{Authors01} model, this paper adds multi-head and multi-modal modules, which enriches the feature sets that the model can use, and at the same time strengthens the cross-combination and fitting capabilities of the model. Experiments show that the multi-head multi-modal DIN improves the recommendation prediction effect, and outperforms current state-of-the-art methods on various comprehensive indicators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge