Ansi Zhang

MultiHead MultiModal Deep Interest Recommendation Network

Oct 19, 2021

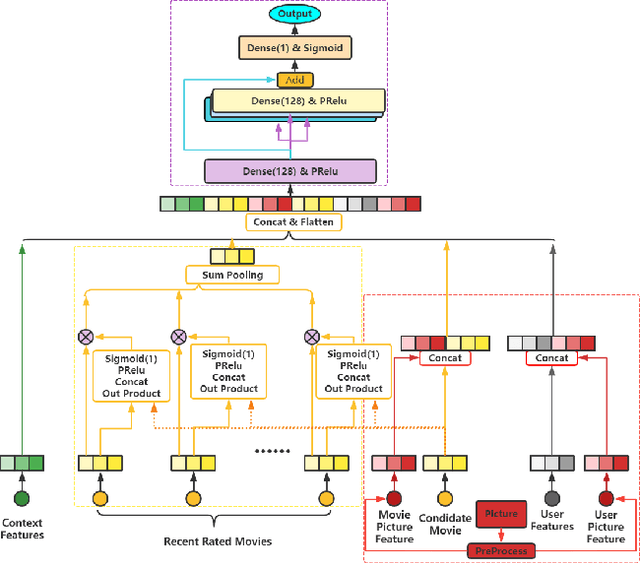

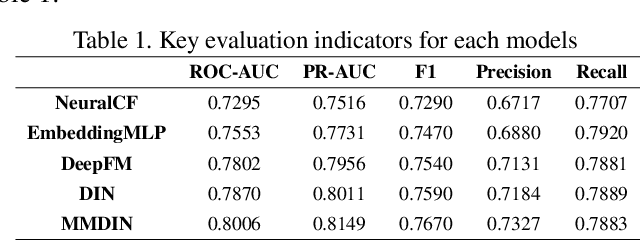

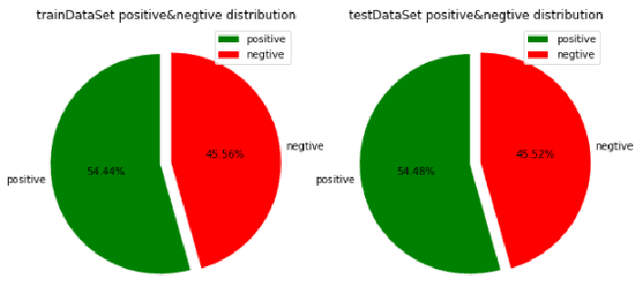

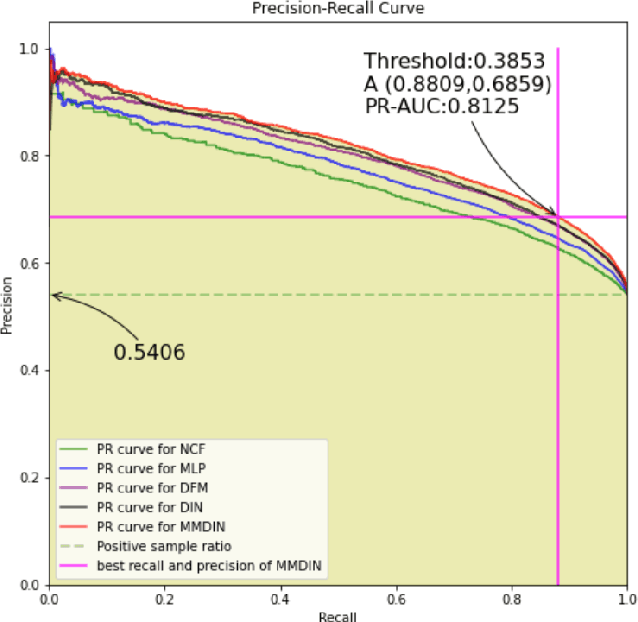

Abstract:With the development of information technology, human beings are constantly producing a large amount of information at all times. How to obtain the information that users are interested in from the large amount of information has become an issue of great concern to users and even business managers. In order to solve this problem, from traditional machine learning to deep learning recommendation systems, researchers continue to improve optimization models and explore solutions. Because researchers have optimized more on the recommendation model network structure, they have less research on enriching recommendation model features, and there is still room for in-depth recommendation model optimization. Based on the DIN\cite{Authors01} model, this paper adds multi-head and multi-modal modules, which enriches the feature sets that the model can use, and at the same time strengthens the cross-combination and fitting capabilities of the model. Experiments show that the multi-head multi-modal DIN improves the recommendation prediction effect, and outperforms current state-of-the-art methods on various comprehensive indicators.

RRLFSOR: An Efficient Self-Supervised Learning Strategy of Graph Convolutional Networks

Aug 17, 2021

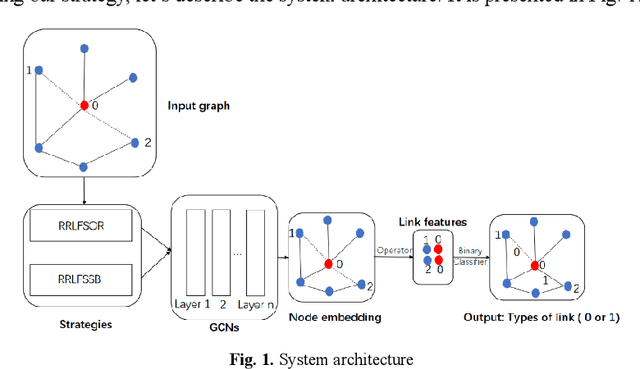

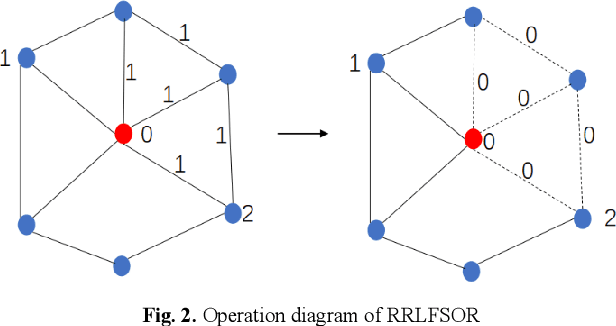

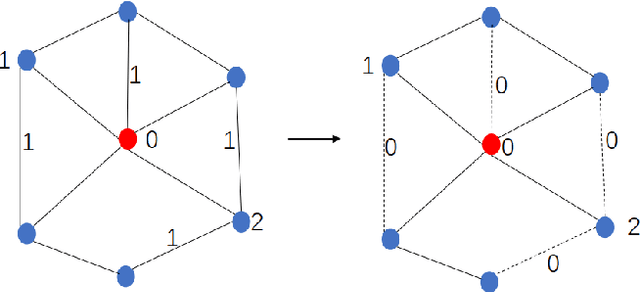

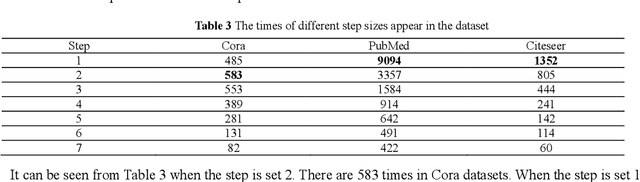

Abstract:To further improve the performance and the self-learning ability of GCNs, in this paper, we propose an efficient self-supervised learning strategy of GCNs, named randomly removed links with a fixed step at one region (RRLFSOR). In addition, we also propose another self-supervised learning strategy of GCNs, named randomly removing links with a fixed step at some blocks (RRLFSSB), to solve the problem that adjacent nodes have no selected step. Experiments on transductive link prediction tasks show that our strategies outperform the baseline models consistently by up to 21.34% in terms of accuracy on three benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge