Youngnam Lee

Prescribing Deep Attentive Score Prediction Attracts Improved Student Engagement

May 19, 2020

Abstract:Intelligent Tutoring Systems (ITSs) have been developed to provide students with personalized learning experiences by adaptively generating learning paths optimized for each individual. Within the vast scope of ITS, score prediction stands out as an area of study that enables students to construct individually realistic goals based on their current position. Via the expected score provided by the ITS, a student can instantaneously compare one's expected score to one's actual score, which directly corresponds to the reliability that the ITS can instill. In other words, refining the precision of predicted scores strictly correlates to the level of confidence that a student may have with an ITS, which will evidently ensue improved student engagement. However, previous studies have solely concentrated on improving the performance of a prediction model, largely lacking focus on the benefits generated by its practical application. In this paper, we demonstrate that the accuracy of the score prediction model deployed in a real-world setting significantly impacts user engagement by providing empirical evidence. To that end, we apply a state-of-the-art deep attentive neural network-based score prediction model to Santa, a multi-platform English ITS with approximately 780K users in South Korea that exclusively focuses on the TOEIC (Test of English for International Communications) standardized examinations. We run a controlled A/B test on the ITS with two models, respectively based on collaborative filtering and deep attentive neural networks, to verify whether the more accurate model engenders any student engagement. The results conclude that the attentive model not only induces high student morale (e.g. higher diagnostic test completion ratio, number of questions answered, etc.) but also encourages active engagement (e.g. higher purchase rate, improved total profit, etc.) on Santa.

Towards an Appropriate Query, Key, and Value Computation for Knowledge Tracing

Feb 14, 2020

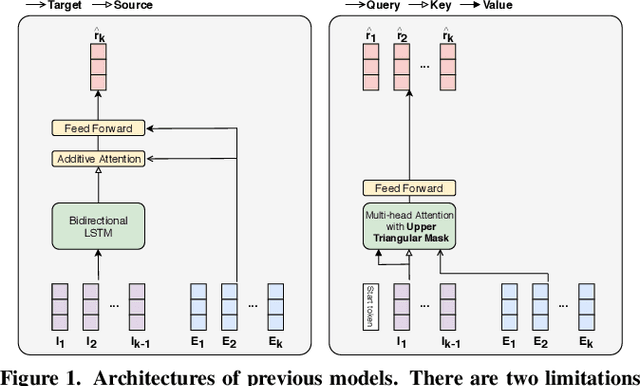

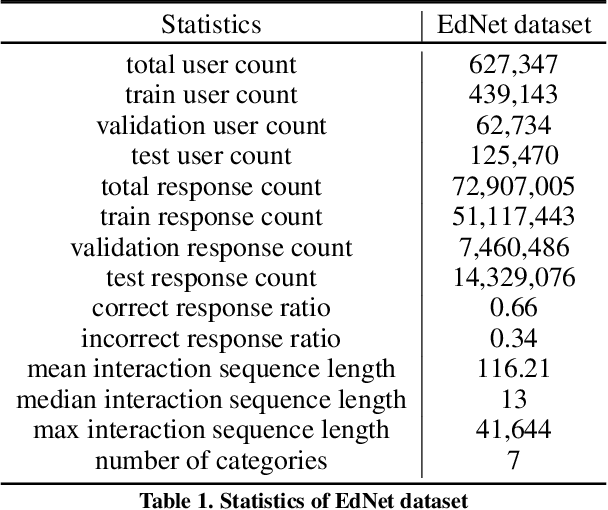

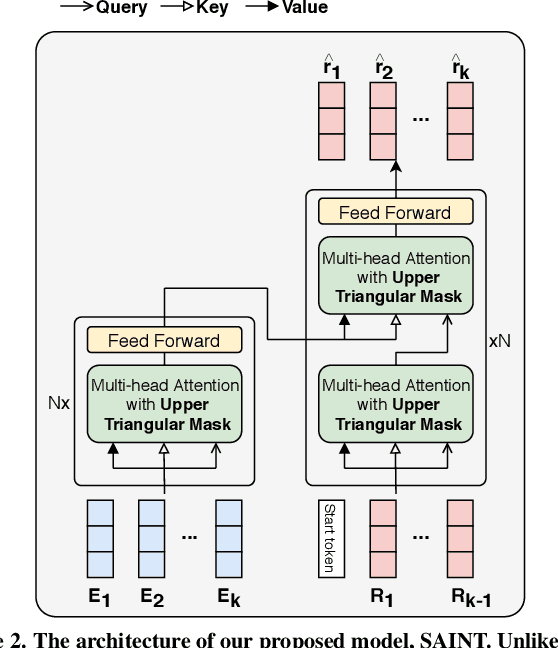

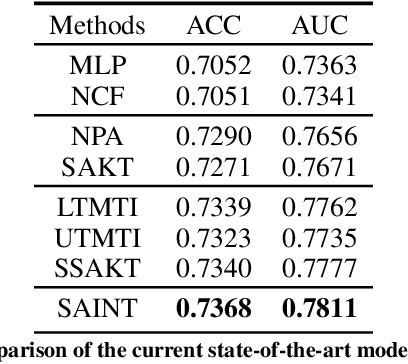

Abstract:Knowledge tracing, the act of modeling a student's knowledge through learning activities, is an extensively studied problem in the field of computer-aided education. Although models with attention mechanism have outperformed traditional approaches such as Bayesian knowledge tracing and collaborative filtering, they share two limitations. Firstly, the models rely on shallow attention layers and fail to capture complex relations among exercises and responses over time. Secondly, different combinations of queries, keys and values for the self-attention layer for knowledge tracing were not extensively explored. Usual practice of using exercises and interactions (exercise-response pairs) as queries and keys/values respectively lacks empirical support. In this paper, we propose a novel Transformer based model for knowledge tracing, SAINT: Separated Self-AttentIve Neural Knowledge Tracing. SAINT has an encoder-decoder structure where exercise and response embedding sequence separately enter the encoder and the decoder respectively, which allows to stack attention layers multiple times. To the best of our knowledge, this is the first work to suggest an encoder-decoder model for knowledge tracing that applies deep self-attentive layers to exercises and responses separately. The empirical evaluations on a large-scale knowledge tracing dataset show that SAINT achieves the state-of-the-art performance in knowledge tracing with the improvement of AUC by 1.8% compared to the current state-of-the-art models.

Deep Attentive Study Session Dropout Prediction in Mobile Learning Environment

Feb 14, 2020

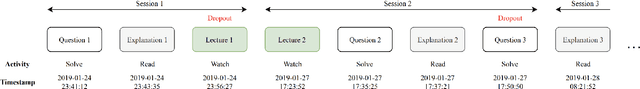

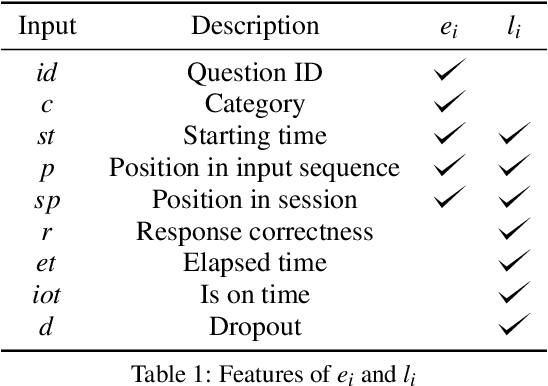

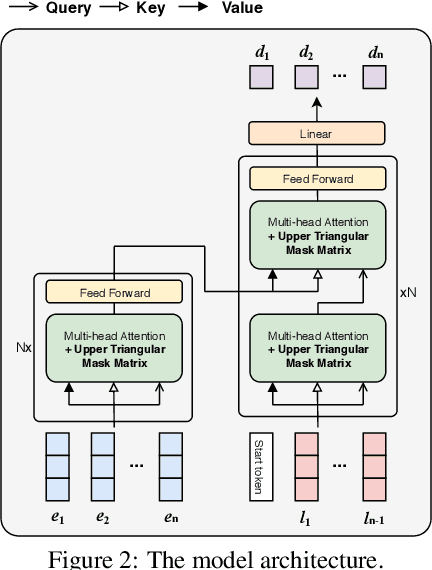

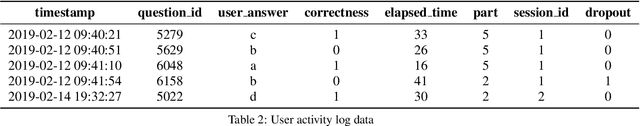

Abstract:Student dropout prediction provides an opportunity to improve student engagement, which maximizes the overall effectiveness of learning experiences. However, researches on student dropout were mainly conducted on school dropout or course dropout, and study session dropout in a mobile learning environment has not been considered thoroughly. In this paper, we investigate the study session dropout prediction problem in a mobile learning environment. First, we define the concept of the study session, study session dropout and study session dropout prediction task in a mobile learning environment. Based on the definitions, we propose a novel Transformer based model for predicting study session dropout, DAS: Deep Attentive Study Session Dropout Prediction in Mobile Learning Environment. DAS has an encoder-decoder structure which is composed of stacked multi-head attention and point-wise feed-forward networks. The deep attentive computations in DAS are capable of capturing complex relations among dynamic student interactions. To the best of our knowledge, this is the first attempt to investigate study session dropout in a mobile learning environment. Empirical evaluations on a large-scale dataset show that DAS achieves the best performance with a significant improvement in area under the receiver operating characteristic curve compared to baseline models.

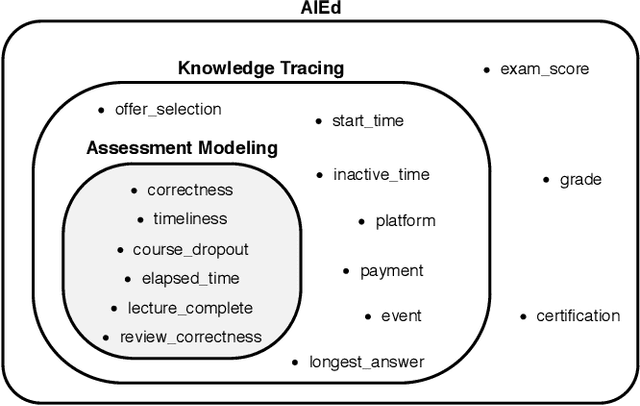

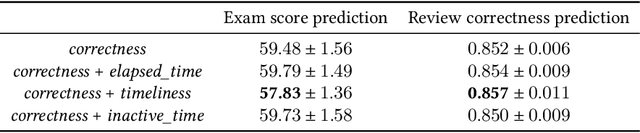

Assessment Modeling: Fundamental Pre-training Tasks for Interactive Educational Systems

Jan 01, 2020

Abstract:Interactive Educational Systems (IESs) have developed rapidly in recent years to address the issue of quality and affordability of education. Analogous to other domains in AI, there are specific tasks of AIEd for which labels are scarce. For instance, labels like exam score and grade are considered important in educational and social context. However, obtaining the labels is costly as they require student actions taken outside the system. Likewise, while student events like course dropout and review correctness are automatically recorded by IESs, they are few in number as the events occur sporadically in practice. A common way of circumventing the label-scarcity problem is the pre-train/fine-tine method. Accordingly, existing works pre-train a model to learn representations of contents in learning items. However, such methods fail to utilize the student interaction data available and model student learning behavior. To this end, we propose assessment modeling, fundamental pre-training tasks for IESs. An assessment is a feature of student-system interactions which can act as pedagogical evaluation, such as student response correctness or timeliness. Assessment modeling is the prediction of assessments conditioned on the surrounding context of interactions. Although it is natural to pre-train interactive features available in large amount, narrowing down the prediction targets to assessments holds relevance to the label-scarce educational problems while reducing irrelevant noises. To the best of our knowledge, this is the first work investigating appropriate pre-training method of predicting educational features from student-system interactions. While the effectiveness of different combinations of assessments is open for exploration, we suggest assessment modeling as a guiding principle for selecting proper pre-training tasks for the label-scarce educational problems.

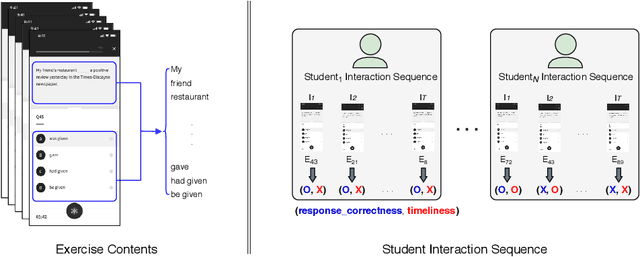

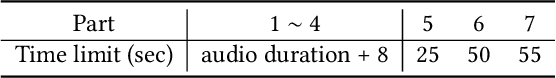

EdNet: A Large-Scale Hierarchical Dataset in Education

Dec 06, 2019

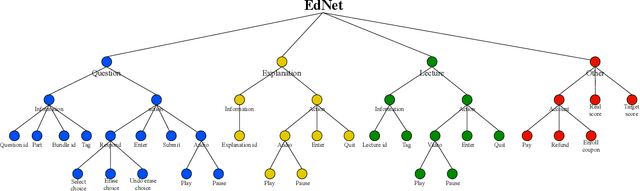

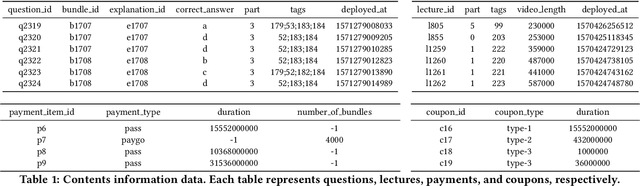

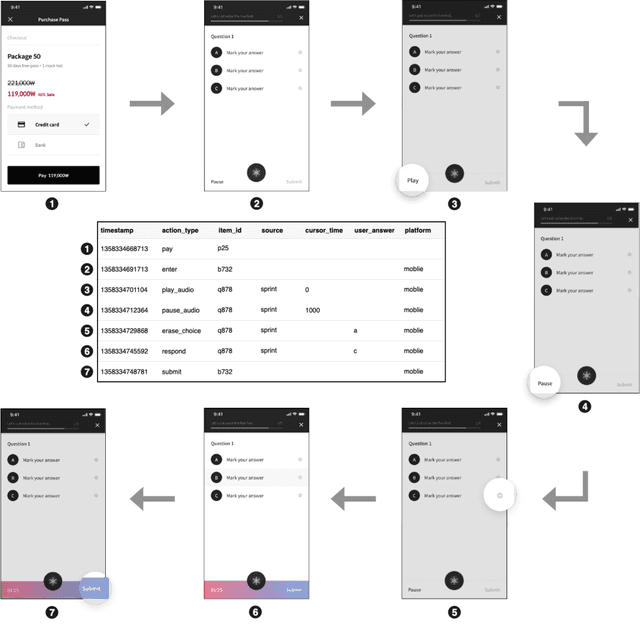

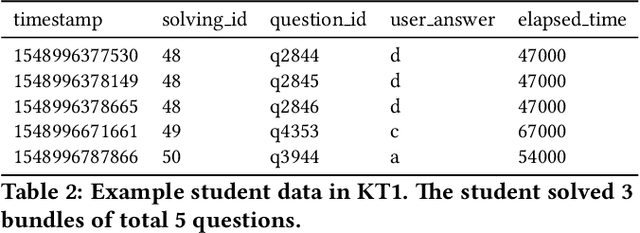

Abstract:With advances in Artificial Intelligence in Education (AIEd) and the ever-growing scale of Interactive Educational Systems (IESs), data-driven approach has become a common recipe for various tasks such as knowledge tracing and learning path recommendation. Unfortunately, collecting real students' interaction data is often challenging, which results in the lack of public large-scale benchmark dataset reflecting a wide variety of student behaviors in modern IESs. Although several datasets, such as ASSISTments, Junyi Academy, Synthetic and STATICS, are publicly available and widely used, they are not large enough to leverage the full potential of state-of-the-art data-driven models and limits the recorded behaviors to question-solving activities. To this end, we introduce EdNet, a large-scale hierarchical dataset of diverse student activities collected by Santa, a multi-platform self-study solution equipped with artificial intelligence tutoring system. EdNet contains 131,441,538 interactions from 784,309 students collected over more than 2 years, which is the largest among the ITS datasets released to the public so far. Unlike existing datasets, EdNet provides a wide variety of student actions ranging from question-solving to lecture consumption and item purchasing. Also, EdNet has a hierarchical structure where the student actions are divided into 4 different levels of abstractions. The features of EdNet are domain-agnostic, allowing EdNet to be extended to different domains easily. The dataset is publicly released under Creative Commons Attribution-NonCommercial 4.0 International license for research purposes. We plan to host challenges in multiple AIEd tasks with EdNet to provide a common ground for the fair comparison between different state of the art models and encourage the development of practical and effective methods.

Creating A Neural Pedagogical Agent by Jointly Learning to Review and Assess

Jul 01, 2019

Abstract:Machine learning plays an increasing role in intelligent tutoring systems as both the amount of data available and specialization among students grow. Nowadays, these systems are frequently deployed on mobile applications. Users on such mobile education platforms are dynamic, frequently being added, accessing the application with varying levels of focus, and changing while using the service. The education material itself, on the other hand, is often static and is an exhaustible resource whose use in tasks such as problem recommendation must be optimized. The ability to update user models with respect to educational material in real-time is thus essential; however, existing approaches require time-consuming re-training of user features whenever new data is added. In this paper, we introduce a neural pedagogical agent for real-time user modeling in the task of predicting user response correctness, a central task for mobile education applications. Our model, inspired by work in natural language processing on sequence modeling and machine translation, updates user features in real-time via bidirectional recurrent neural networks with an attention mechanism over embedded question-response pairs. We experiment on the mobile education application SantaTOEIC, which has 559k users, 66M response data points as well as a set of 10k study problems each expert-annotated with topic tags and gathered since 2016. Our model outperforms existing approaches over several metrics in predicting user response correctness, notably out-performing other methods on new users without large question-response histories. Additionally, our attention mechanism and annotated tag set allow us to create an interpretable education platform, with a smart review system that addresses the aforementioned issue of varied user attention and problem exhaustion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge