Youngjoo Jo

SC-FEGAN: Face Editing Generative Adversarial Network with User's Sketch and Color

Feb 18, 2019

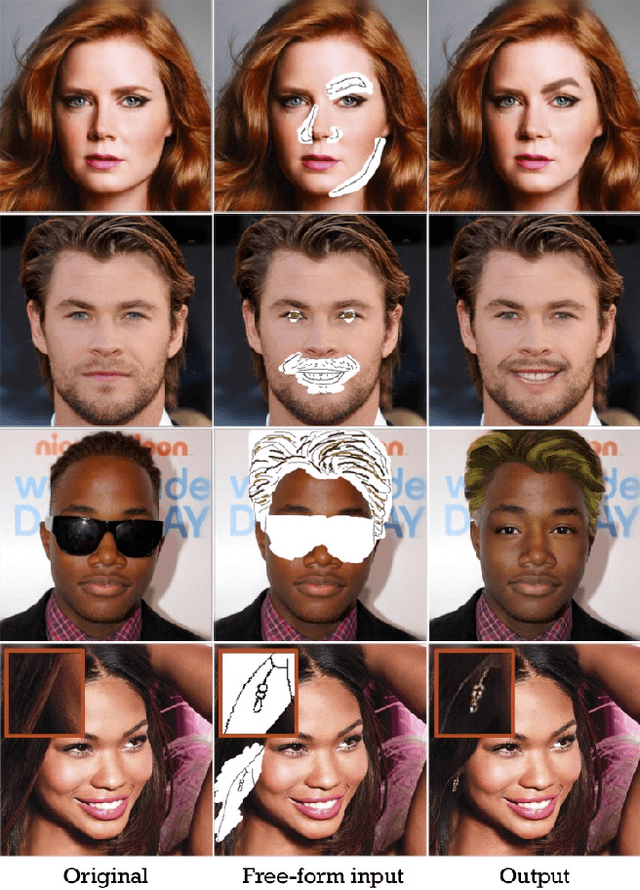

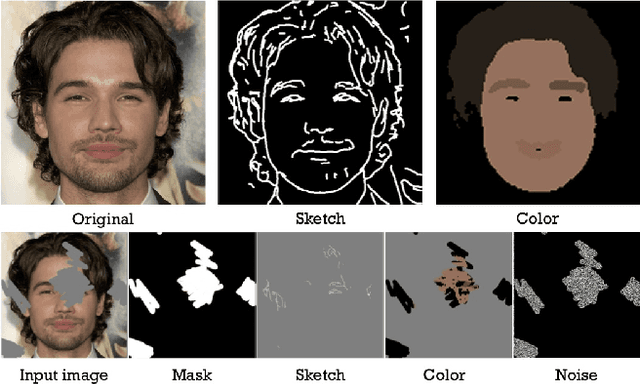

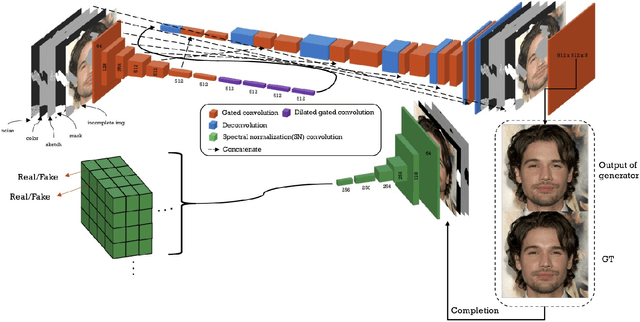

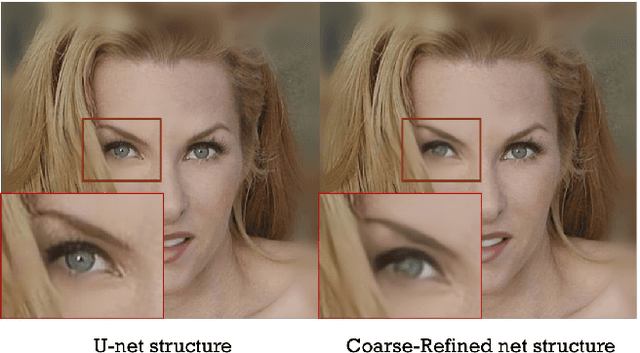

Abstract:We present a novel image editing system that generates images as the user provides free-form mask, sketch and color as an input. Our system consist of a end-to-end trainable convolutional network. Contrary to the existing methods, our system wholly utilizes free-form user input with color and shape. This allows the system to respond to the user's sketch and color input, using it as a guideline to generate an image. In our particular work, we trained network with additional style loss which made it possible to generate realistic results, despite large portions of the image being removed. Our proposed network architecture SC-FEGAN is well suited to generate high quality synthetic image using intuitive user inputs.

Rank of Experts: Detection Network Ensemble

Dec 01, 2017

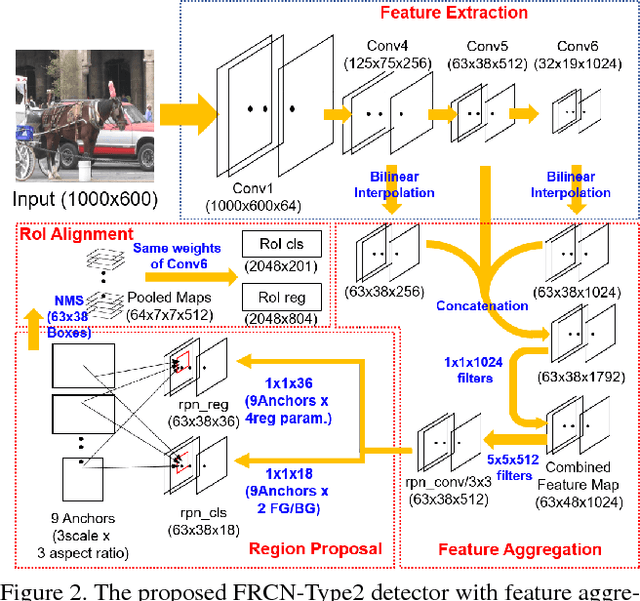

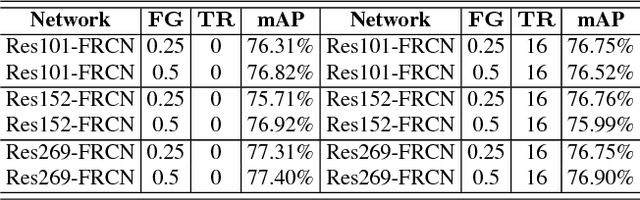

Abstract:The recent advances of convolutional detectors show impressive performance improvement for large scale object detection. However, in general, the detection performance usually decreases as the object classes to be detected increases, and it is a practically challenging problem to train a dominant model for all classes due to the limitations of detection models and datasets. In most cases, therefore, there are distinct performance differences of the modern convolutional detectors for each object class detection. In this paper, in order to build an ensemble detector for large scale object detection, we present a conceptually simple but very effective class-wise ensemble detection which is named as Rank of Experts. We first decompose an intractable problem of finding the best detections for all object classes into small subproblems of finding the best ones for each object class. We then solve the detection problem by ranking detectors in order of the average precision rate for each class, and then aggregate the responses of the top ranked detectors (i.e. experts) for class-wise ensemble detection. The main benefit of our method is easy to implement and does not require any joint training of experts for ensemble. Based on the proposed Rank of Experts, we won the 2nd place in the ILSVRC 2017 object detection competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge