Seung-Hwan Bae

SRF-GAN: Super-Resolved Feature GAN for Multi-Scale Representation

Nov 17, 2020

Abstract:Recent convolutional object detectors exploit multi-scale feature representations added with top-down pathway in order to detect objects at different scales and learn stronger semantic feature responses. In general, during the top-down feature propagation, the coarser feature maps are upsampled to be combined with the features forwarded from bottom-up pathway, and the combined stronger semantic features are inputs of detector's headers. However, simple interpolation methods (e.g. nearest neighbor and bilinear) are still used for increasing feature resolutions although they cause noisy and blurred features. In this paper, we propose a novel generator for super-resolving features of the convolutional object detectors. To achieve this, we first design super-resolved feature GAN (SRF-GAN) consisting of a detection-based generator and a feature patch discriminator. In addition, we present SRF-GAN losses for generating the high quality of super-resolved features and improving detection accuracy together. Our SRF generator can substitute for the traditional interpolation methods, and easily fine-tuned combined with other conventional detectors. To prove this, we have implemented our SRF-GAN by using the several recent one-stage and two-stage detectors, and improved detection accuracy over those detectors. Code is available at https://github.com/SHLee-cv/SRF-GAN.

Object Detection based on Region Decomposition and Assembly

Jan 24, 2019

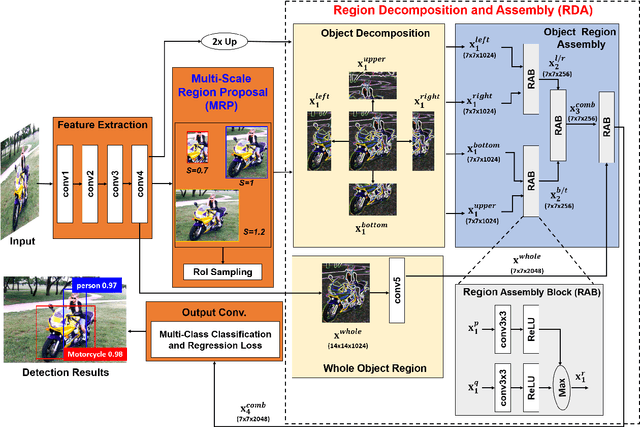

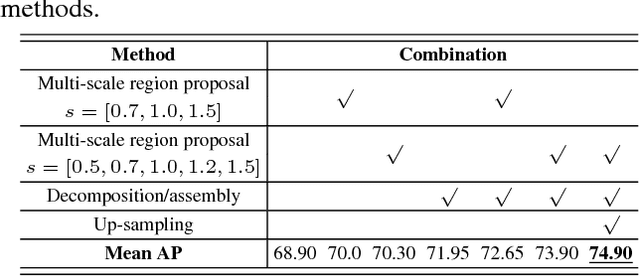

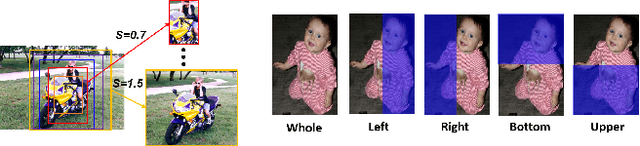

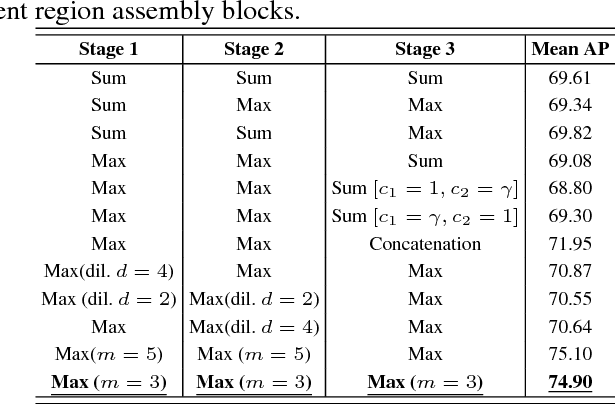

Abstract:Region-based object detection infers object regions for one or more categories in an image. Due to the recent advances in deep learning and region proposal methods, object detectors based on convolutional neural networks (CNNs) have been flourishing and provided the promising detection results. However, the detection accuracy is degraded often because of the low discriminability of object CNN features caused by occlusions and inaccurate region proposals. In this paper, we therefore propose a region decomposition and assembly detector (R-DAD) for more accurate object detection. In the proposed R-DAD, we first decompose an object region into multiple small regions. To capture an entire appearance and part details of the object jointly, we extract CNN features within the whole object region and decomposed regions. We then learn the semantic relations between the object and its parts by combining the multi-region features stage by stage with region assembly blocks, and use the combined and high-level semantic features for the object classification and localization. In addition, for more accurate region proposals, we propose a multi-scale proposal layer that can generate object proposals of various scales. We integrate the R-DAD into several feature extractors, and prove the distinct performance improvement on PASCAL07/12 and MSCOCO18 compared to the recent convolutional detectors.

Rank of Experts: Detection Network Ensemble

Dec 01, 2017

Abstract:The recent advances of convolutional detectors show impressive performance improvement for large scale object detection. However, in general, the detection performance usually decreases as the object classes to be detected increases, and it is a practically challenging problem to train a dominant model for all classes due to the limitations of detection models and datasets. In most cases, therefore, there are distinct performance differences of the modern convolutional detectors for each object class detection. In this paper, in order to build an ensemble detector for large scale object detection, we present a conceptually simple but very effective class-wise ensemble detection which is named as Rank of Experts. We first decompose an intractable problem of finding the best detections for all object classes into small subproblems of finding the best ones for each object class. We then solve the detection problem by ranking detectors in order of the average precision rate for each class, and then aggregate the responses of the top ranked detectors (i.e. experts) for class-wise ensemble detection. The main benefit of our method is easy to implement and does not require any joint training of experts for ensemble. Based on the proposed Rank of Experts, we won the 2nd place in the ILSVRC 2017 object detection competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge