Yonghyeok Seo

A Single Multi-Task Deep Neural Network with Post-Processing for Object Detection with Reasoning and Robotic Grasp Detection

Sep 16, 2019

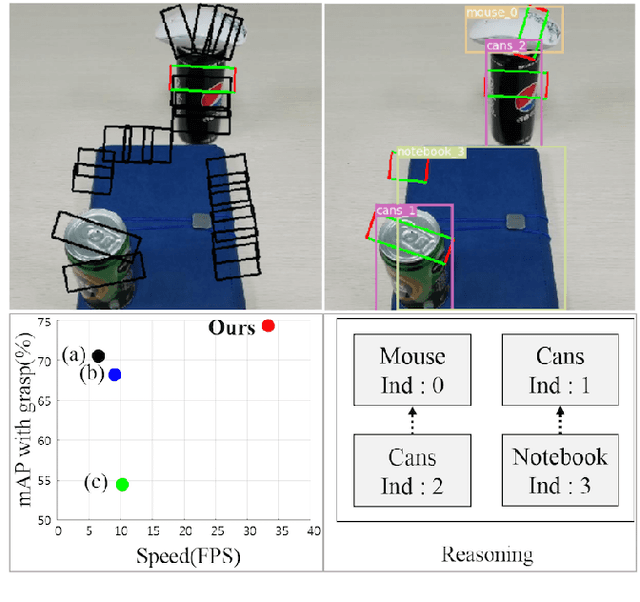

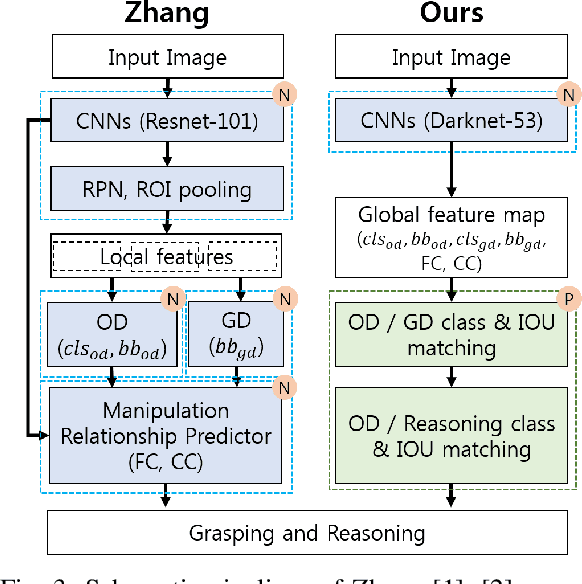

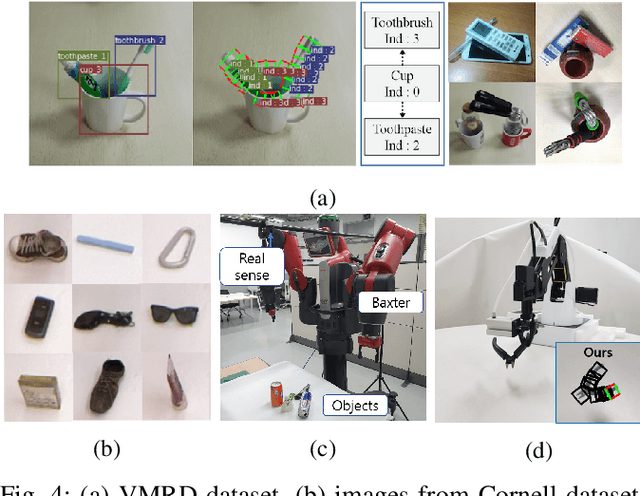

Abstract:Recently, robotic grasp detection (GD) and object detection (OD) with reasoning have been investigated using deep neural networks (DNNs). There have been works to combine these multi-tasks using separate networks so that robots can deal with situations of grasping specific target objects in the cluttered, stacked, complex piles of novel objects from a single RGB-D camera. We propose a single multi-task DNN that yields the information on GD, OD and relationship reasoning among objects with a simple post-processing. Our proposed methods yielded state-of-the-art performance with the accuracy of 98.6% and 74.2% and the computation speed of 33 and 62 frame per second on VMRD and Cornell datasets, respectively. Our methods also yielded 95.3% grasp success rate for single novel object grasping with a 4-axis robot arm and 86.7% grasp success rate in cluttered novel objects with a Baxter robot.

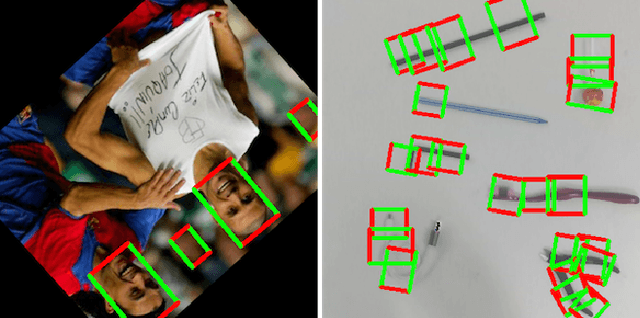

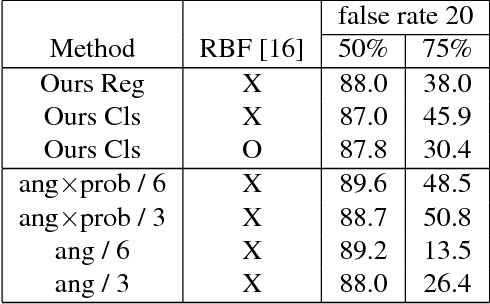

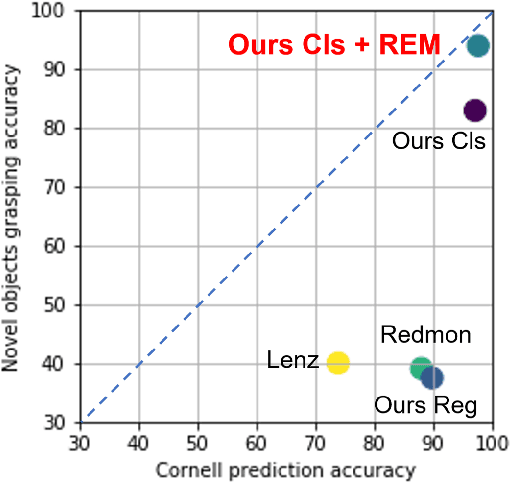

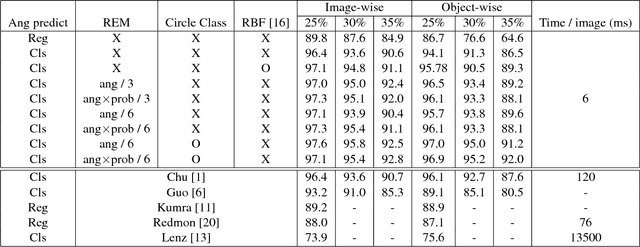

Rotation Ensemble Module for Detecting Rotation-Invariant Features

Dec 19, 2018

Abstract:Deep learning has improved many computer vision tasks by utilizing data-driven features instead of using hand-crafted features. However, geometric transformations of input images often degrade the performance of deep learning based methods. In particular, rotation-invariant features are important in computer vision tasks such as face detection, biological feature detection of microscopy images, or robot grasp detection since the input image can be fed into the network with any rotation angle. In this paper, we propose rotation ensemble module (REM) to efficiently train and utilize rotation-invariant features in a deep neural network for computer vision tasks. We evaluated our proposed REM with face detection tasks on FDDB dataset, robotic grasp detection tasks on Cornell dataset, and real robotic grasp tasks with several novel objects. REM based face detection deep neural networks yielded up to 50.8% accuracy in face detection task on FDDB dataset at false rate 20 with IOU 75%, which is about 10.7% higher than the baseline. Robotic grasp detection deep neural networks with our REM also yielded up to 97.6% accuracy in robotic grasp detection on Cornell dataset that is higher than current state-of-the-art performance. In robotic grasp task using a real 4-axis robotic arm with several novel objects, our REM based robotic grasp achieved up to 93.8%, which is significantly higher than the baseline robotic grasps (11.0-56.3%).

Real-Time, Highly Accurate Robotic Grasp Detection using Fully Convolutional Neural Networks with High-Resolution Images

Sep 16, 2018

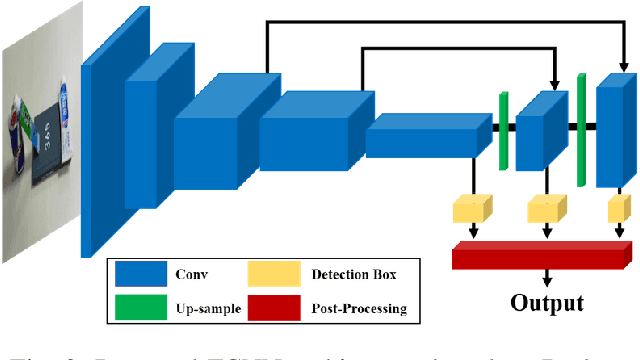

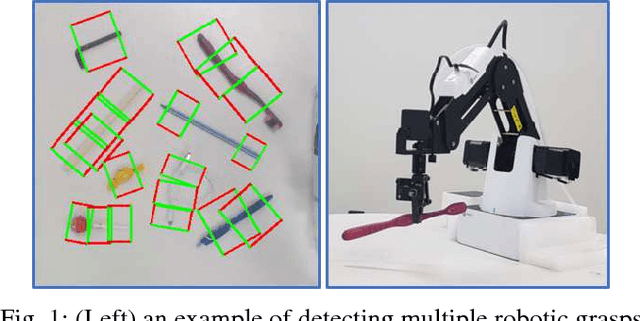

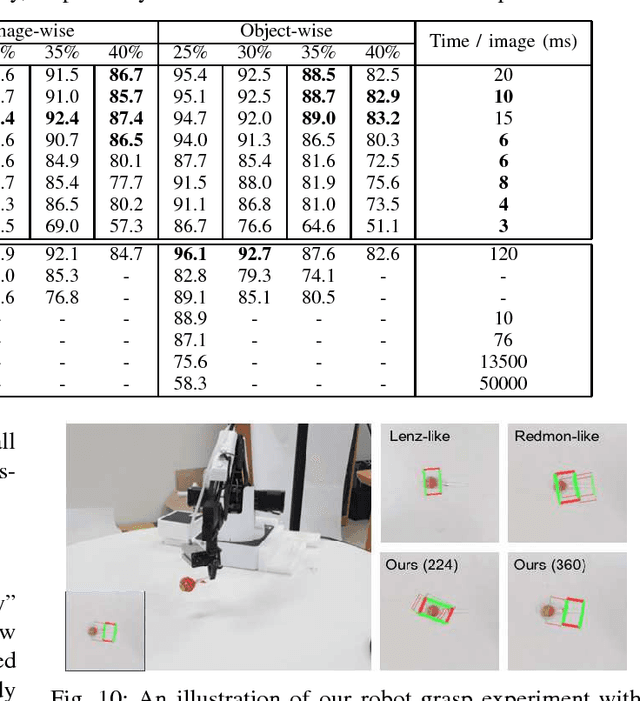

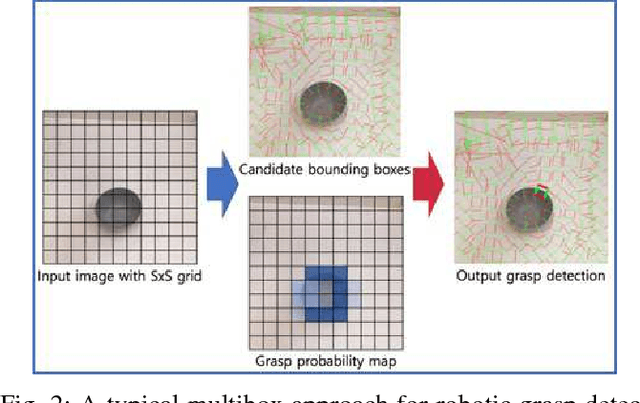

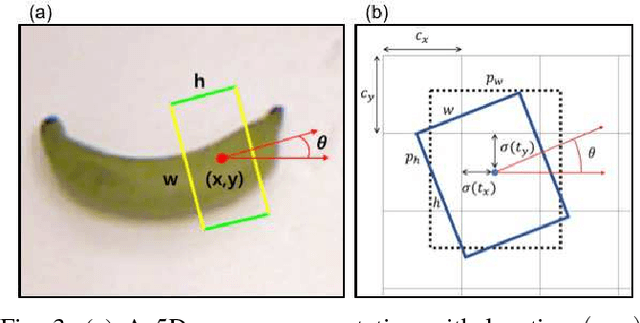

Abstract:Robotic grasp detection for novel objects is a challenging task, but for the last few years, deep learning based approaches have achieved remarkable performance improvements, up to 96.1% accuracy, with RGB-D data. In this paper, we propose fully convolutional neural network (FCNN) based methods for robotic grasp detection. Our methods also achieved state-of-the-art detection accuracy (up to 96.6%) with state-of- the-art real-time computation time for high-resolution images (6-20ms per 360x360 image) on Cornell dataset. Due to FCNN, our proposed method can be applied to images with any size for detecting multigrasps on multiobjects. Proposed methods were evaluated using 4-axis robot arm with small parallel gripper and RGB-D camera for grasping challenging small, novel objects. With accurate vision-robot coordinate calibration through our proposed learning-based, fully automatic approach, our proposed method yielded 90% success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge