Rotation Ensemble Module for Detecting Rotation-Invariant Features

Paper and Code

Dec 19, 2018

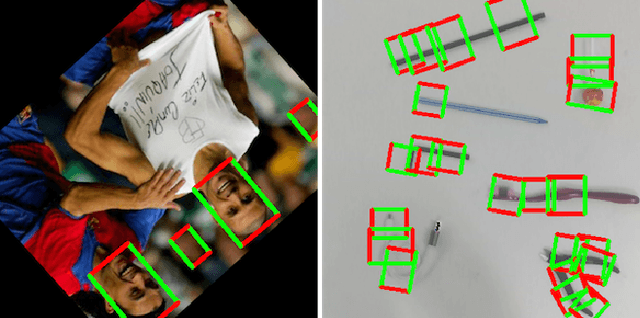

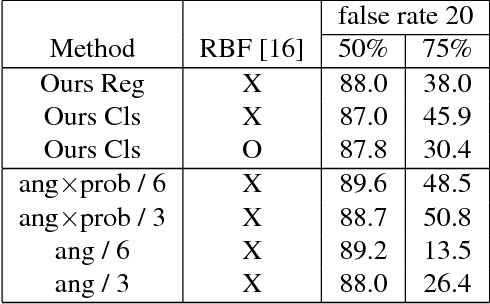

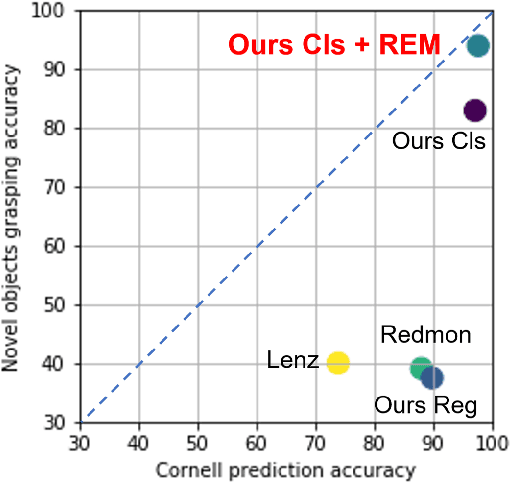

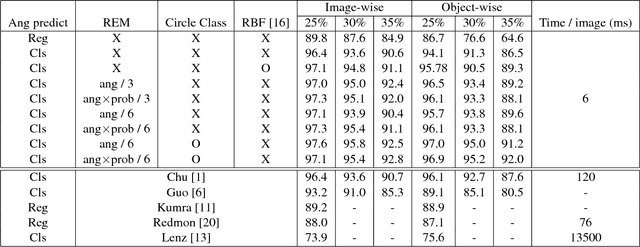

Deep learning has improved many computer vision tasks by utilizing data-driven features instead of using hand-crafted features. However, geometric transformations of input images often degrade the performance of deep learning based methods. In particular, rotation-invariant features are important in computer vision tasks such as face detection, biological feature detection of microscopy images, or robot grasp detection since the input image can be fed into the network with any rotation angle. In this paper, we propose rotation ensemble module (REM) to efficiently train and utilize rotation-invariant features in a deep neural network for computer vision tasks. We evaluated our proposed REM with face detection tasks on FDDB dataset, robotic grasp detection tasks on Cornell dataset, and real robotic grasp tasks with several novel objects. REM based face detection deep neural networks yielded up to 50.8% accuracy in face detection task on FDDB dataset at false rate 20 with IOU 75%, which is about 10.7% higher than the baseline. Robotic grasp detection deep neural networks with our REM also yielded up to 97.6% accuracy in robotic grasp detection on Cornell dataset that is higher than current state-of-the-art performance. In robotic grasp task using a real 4-axis robotic arm with several novel objects, our REM based robotic grasp achieved up to 93.8%, which is significantly higher than the baseline robotic grasps (11.0-56.3%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge