Yinyi Guo

Aligning Audio Captions with Human Preferences

Sep 18, 2025Abstract:Current audio captioning systems rely heavily on supervised learning with paired audio-caption datasets, which are expensive to curate and may not reflect human preferences in real-world scenarios. To address this limitation, we propose a preference-aligned audio captioning framework based on Reinforcement Learning from Human Feedback (RLHF). To effectively capture nuanced human preferences, we train a Contrastive Language-Audio Pretraining (CLAP)-based reward model using human-labeled pairwise preference data. This reward model is integrated into a reinforcement learning framework to fine-tune any baseline captioning system without relying on ground-truth caption annotations. Extensive human evaluations across multiple datasets show that our method produces captions preferred over those from baseline models, particularly in cases where the baseline models fail to provide correct and natural captions. Furthermore, our framework achieves performance comparable to supervised approaches with ground-truth data, demonstrating its effectiveness in aligning audio captioning with human preferences and its scalability in real-world scenarios.

Spatial Audio Motion Understanding and Reasoning

Sep 18, 2025Abstract:Spatial audio reasoning enables machines to interpret auditory scenes by understanding events and their spatial attributes. In this work, we focus on spatial audio understanding with an emphasis on reasoning about moving sources. First, we introduce a spatial audio encoder that processes spatial audio to detect multiple overlapping events and estimate their spatial attributes, Direction of Arrival (DoA) and source distance, at the frame level. To generalize to unseen events, we incorporate an audio grounding model that aligns audio features with semantic audio class text embeddings via a cross-attention mechanism. Second, to answer complex queries about dynamic audio scenes involving moving sources, we condition a large language model (LLM) on structured spatial attributes extracted by our model. Finally, we introduce a spatial audio motion understanding and reasoning benchmark dataset and demonstrate our framework's performance against the baseline model.

Comprehensive Audio Query Handling System with Integrated Expert Models and Contextual Understanding

Dec 05, 2024

Abstract:This paper presents a comprehensive chatbot system designed to handle a wide range of audio-related queries by integrating multiple specialized audio processing models. The proposed system uses an intent classifier, trained on a diverse audio query dataset, to route queries about audio content to expert models such as Automatic Speech Recognition (ASR), Speaker Diarization, Music Identification, and Text-to-Audio generation. A 3.8 B LLM model then takes inputs from an Audio Context Detection (ACD) module extracting audio event information from the audio and post processes text domain outputs from the expert models to compute the final response to the user. We evaluated the system on custom audio tasks and MMAU sound set benchmarks. The custom datasets were motivated by target use cases not covered in industry benchmarks and included ACD-timestamp-QA (Question Answering) as well as ACD-temporal-QA datasets to evaluate timestamp and temporal reasoning questions, respectively. First we determined that a BERT based Intent Classifier outperforms LLM-fewshot intent classifier in routing queries. Experiments further show that our approach significantly improves accuracy on some custom tasks compared to state-of-the-art Large Audio Language Models and outperforms models in the 7B parameter size range on the sound testset of the MMAU benchmark, thereby offering an attractive option for on device deployment.

Confidence Calibration for Audio Captioning Models

Sep 13, 2024

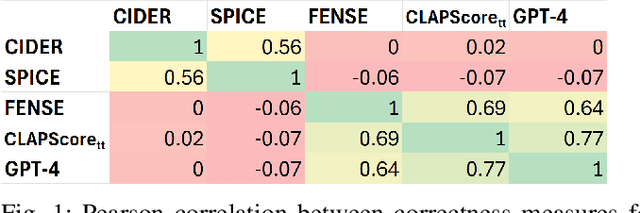

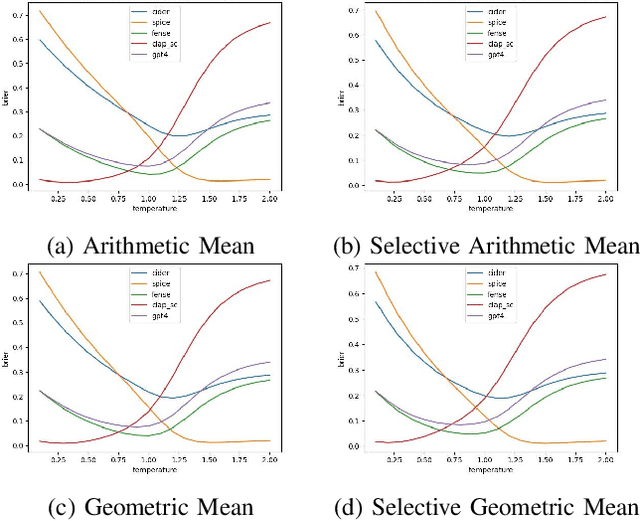

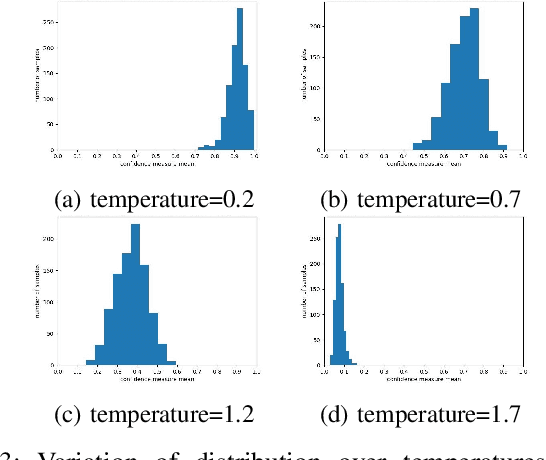

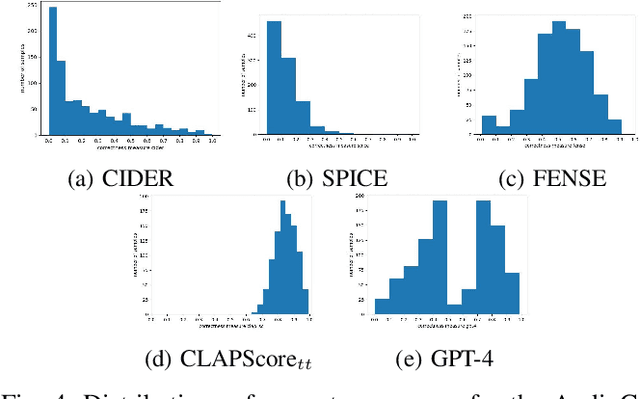

Abstract:Systems that automatically generate text captions for audio, images and video lack a confidence indicator of the relevance and correctness of the generated sequences. To address this, we build on existing methods of confidence measurement for text by introduce selective pooling of token probabilities, which aligns better with traditional correctness measures than conventional pooling does. Further, we propose directly measuring the similarity between input audio and text in a shared embedding space. To measure self-consistency, we adapt semantic entropy for audio captioning, and find that these two methods align even better than pooling-based metrics with the correctness measure that calculates acoustic similarity between captions. Finally, we explain why temperature scaling of confidences improves calibration.

Enhancing Temporal Understanding in Audio Question Answering for Large Audio Language Models

Sep 10, 2024Abstract:The Audio Question Answering task includes audio event classification, audio captioning, and open ended reasoning. Recently, Audio Question Answering has garnered attention due to the advent of Large Audio Language Models. Current literature focuses on constructing LALMs by integrating audio encoders with text only Large Language Models through a projection module. While Large Audio Language Models excel in general audio understanding, they are limited in temporal reasoning which may hinder their commercial applications and on device deployment. This paper addresses these challenges and limitations in audio temporal reasoning. First, we introduce a data augmentation technique for generating reliable audio temporal questions and answers using an LLM. Second, we propose a continued finetuning curriculum learning strategy to specialize in temporal reasoning without compromising performance on finetuned tasks. Finally, we develop a reliable and transparent automated metric, assisted by an LLM, to measure the correlation between Large Audio Language Model responses and ground truth data intelligently. We demonstrate the effectiveness of our proposed techniques using SOTA LALMs on public audio benchmark datasets.

Parameter Efficient Audio Captioning With Faithful Guidance Using Audio-text Shared Latent Representation

Sep 06, 2023

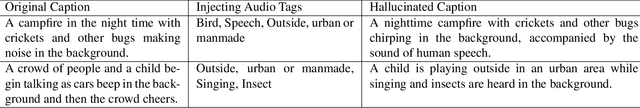

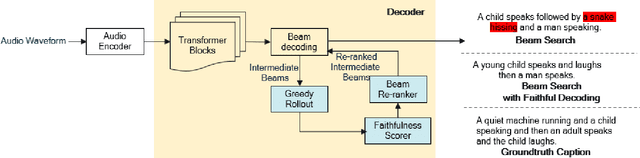

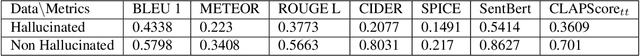

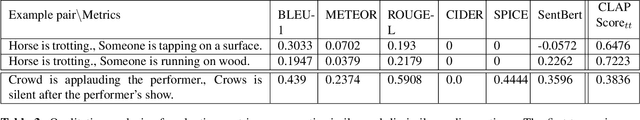

Abstract:There has been significant research on developing pretrained transformer architectures for multimodal-to-text generation tasks. Albeit performance improvements, such models are frequently overparameterized, hence suffer from hallucination and large memory footprint making them challenging to deploy on edge devices. In this paper, we address both these issues for the application of automated audio captioning. First, we propose a data augmentation technique for generating hallucinated audio captions and show that similarity based on an audio-text shared latent space is suitable for detecting hallucination. Then, we propose a parameter efficient inference time faithful decoding algorithm that enables smaller audio captioning models with performance equivalent to larger models trained with more data. During the beam decoding step, the smaller model utilizes an audio-text shared latent representation to semantically align the generated text with corresponding input audio. Faithful guidance is introduced into the beam probability by incorporating the cosine similarity between latent representation projections of greedy rolled out intermediate beams and audio clip. We show the efficacy of our algorithm on benchmark datasets and evaluate the proposed scheme against baselines using conventional audio captioning and semantic similarity metrics while illustrating tradeoffs between performance and complexity.

Incremental Learning Algorithm for Sound Event Detection

Mar 26, 2020

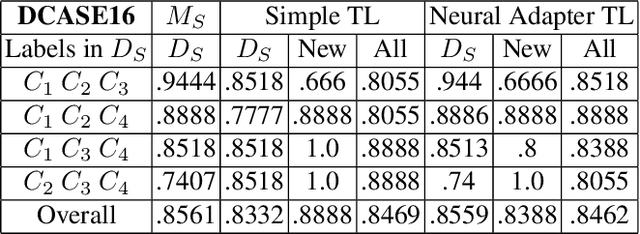

Abstract:This paper presents a new learning strategy for the Sound Event Detection (SED) system to tackle the issues of i) knowledge migration from a pre-trained model to a new target model and ii) learning new sound events without forgetting the previously learned ones without re-training from scratch. In order to migrate the previously learned knowledge from the source model to the target one, a neural adapter is employed on the top of the source model. The source model and the target model are merged via this neural adapter layer. The neural adapter layer facilitates the target model to learn new sound events with minimal training data and maintaining the performance of the previously learned sound events similar to the source model. Our extensive analysis on the DCASE16 and US-SED dataset reveals the effectiveness of the proposed method in transferring knowledge between source and target models without introducing any performance degradation on the previously learned sound events while obtaining a competitive detection performance on the newly learned sound events.

* IEEE ICME 2020 Camera Ready Version

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge