Yingyuan Yang

Trunk-branch Contrastive Network with Multi-view Deformable Aggregation for Multi-view Action Recognition

Feb 23, 2025

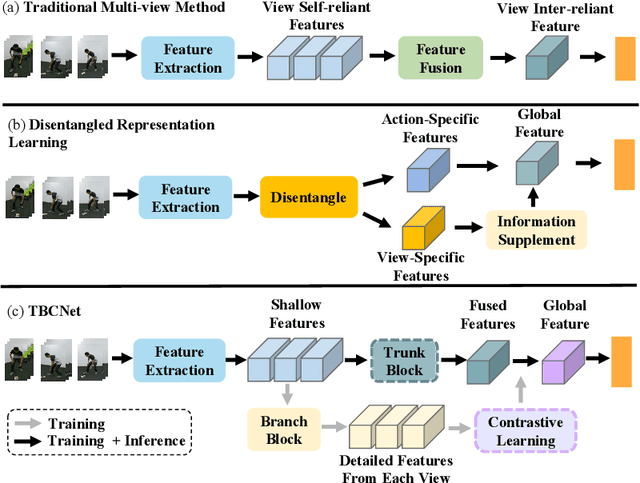

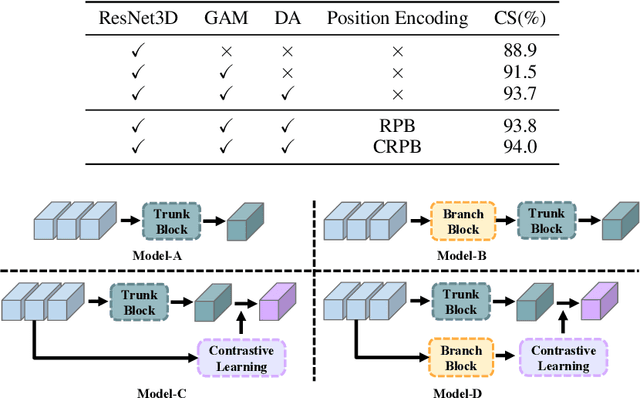

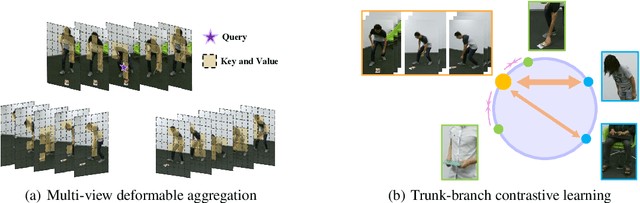

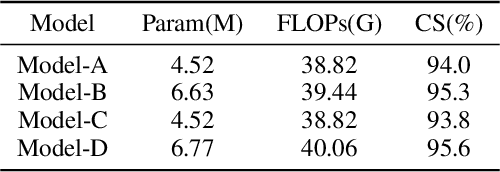

Abstract:Multi-view action recognition aims to identify actions in a given multi-view scene. Traditional studies initially extracted refined features from each view, followed by implemented paired interaction and integration, but they potentially overlooked the critical local features in each view. When observing objects from multiple perspectives, individuals typically form a comprehensive impression and subsequently fill in specific details. Drawing inspiration from this cognitive process, we propose a novel trunk-branch contrastive network (TBCNet) for RGB-based multi-view action recognition. Distinctively, TBCNet first obtains fused features in the trunk block and then implicitly supplements vital details provided by the branch block via contrastive learning, generating a more informative and comprehensive action representation. Within this framework, we construct two core components: the multi-view deformable aggregation and the trunk-branch contrastive learning. MVDA employed in the trunk block effectively facilitates multi-view feature fusion and adaptive cross-view spatio-temporal correlation, where a global aggregation module is utilized to emphasize significant spatial information and a composite relative position bias is designed to capture the intra- and cross-view relative positions. Moreover, a trunk-branch contrastive loss is constructed between aggregated features and refined details from each view. By incorporating two distinct weights for positive and negative samples, a weighted trunk-branch contrastive loss is proposed to extract valuable information and emphasize subtle inter-class differences. The effectiveness of TBCNet is verified by extensive experiments on four datasets including NTU-RGB+D 60, NTU-RGB+D 120, PKU-MMD, and N-UCLA dataset. Compared to other RGB-based methods, our approach achieves state-of-the-art performance in cross-subject and cross-setting protocols.

Subpixel Edge Localization Based on Converted Intensity Summation under Stable Edge Region

Feb 23, 2025

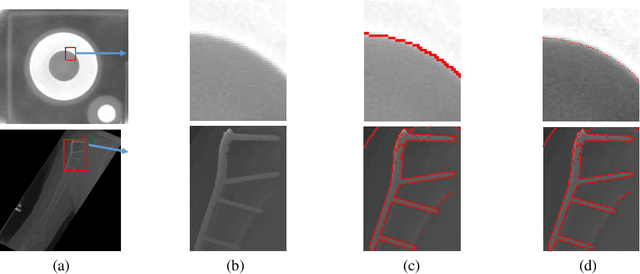

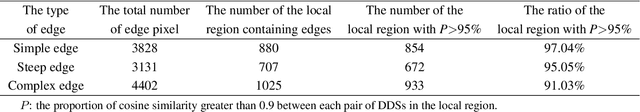

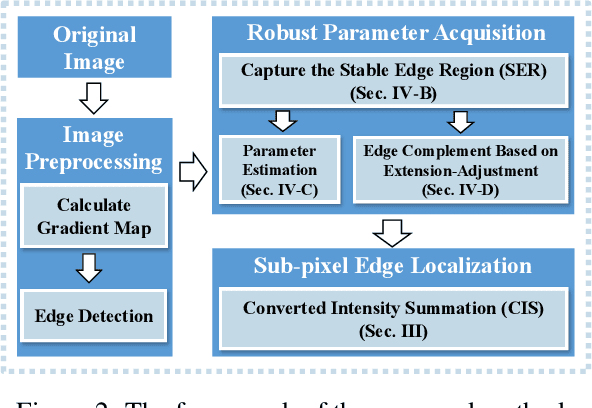

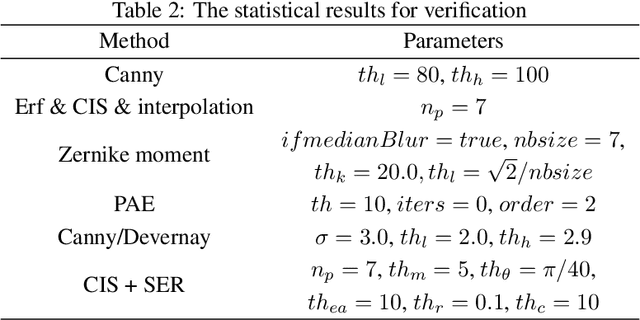

Abstract:To satisfy the rigorous requirements of precise edge detection in critical high-accuracy measurements, this article proposes a series of efficient approaches for localizing subpixel edge. In contrast to the fitting based methods, which consider pixel intensity as a sample value derived from a specific model. We take an innovative perspective by assuming that the intensity at the pixel level can be interpreted as a local integral mapping in the intensity model for subpixel localization. Consequently, we propose a straightforward subpixel edge localization method called Converted Intensity Summation (CIS). To address the limited robustness associated with focusing solely on the localization of individual edge points, a Stable Edge Region (SER) based algorithm is presented to alleviate local interference near edges. Given the observation that the consistency of edge statistics exists in the local region, the algorithm seeks correlated stable regions in the vicinity of edges to facilitate the acquisition of robust parameters and achieve higher precision positioning. In addition, an edge complement method based on extension-adjustment is also introduced to rectify the irregular edges through the efficient migration of SERs. A large number of experiments are conducted on both synthetic and real image datasets which cover common edge patterns as well as various real scenarios such as industrial PCB images, remote sensing and medical images. It is verified that CIS can achieve higher accuracy than the state-of-the-art method, while requiring less execution time. Moreover, by integrating SER into CIS, the proposed algorithm demonstrates excellent performance in further improving the anti-interference capability and positioning accuracy.

Towards Adversarial-Resilient Deep Neural Networks for False Data Injection Attack Detection in Power Grids

Feb 17, 2021

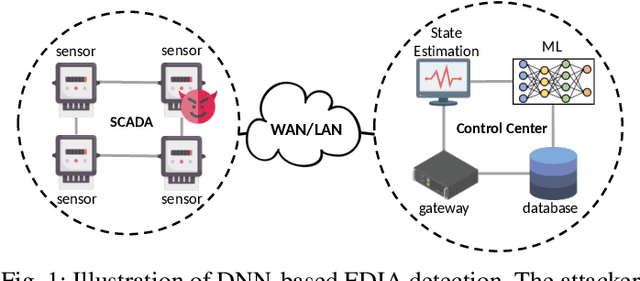

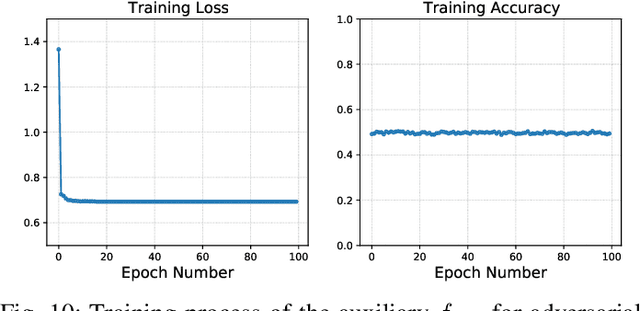

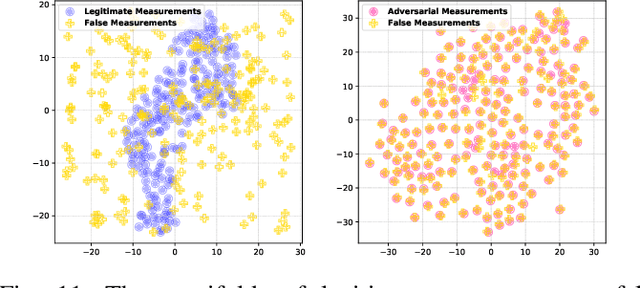

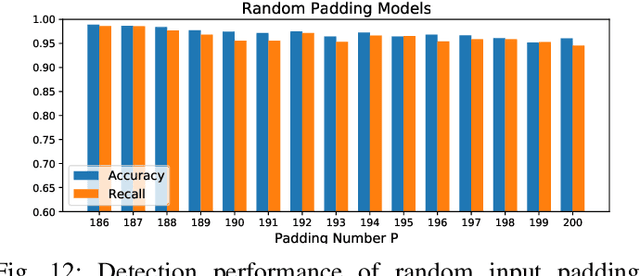

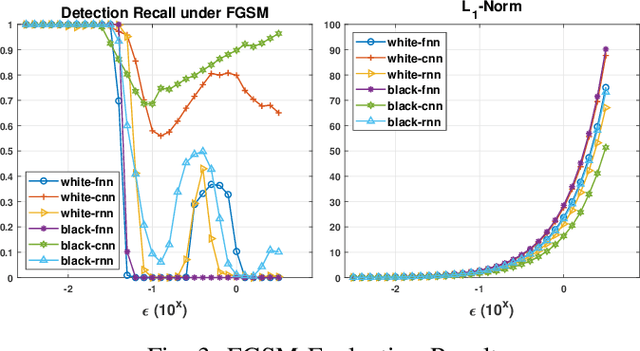

Abstract:False data injection attack (FDIA) is a critical security issue in power system state estimation. In recent years, machine learning (ML) techniques, especially deep neural networks (DNNs), have been proposed in the literature for FDIA detection. However, they have not considered the risk of adversarial attacks, which were shown to be threatening to DNN's reliability in different ML applications. In this paper, we evaluate the vulnerability of DNNs used for FDIA detection through adversarial attacks and study the defensive approaches. We analyze several representative adversarial defense mechanisms and demonstrate that they have intrinsic limitations in FDIA detection. We then design an adversarial-resilient DNN detection framework for FDIA by introducing random input padding in both the training and inference phases. Extensive simulations based on an IEEE standard power system show that our framework greatly reduces the effectiveness of adversarial attacks while having little impact on the detection performance of the DNNs.

Exploiting Vulnerabilities of Deep Learning-based Energy Theft Detection in AMI through Adversarial Attacks

Oct 16, 2020

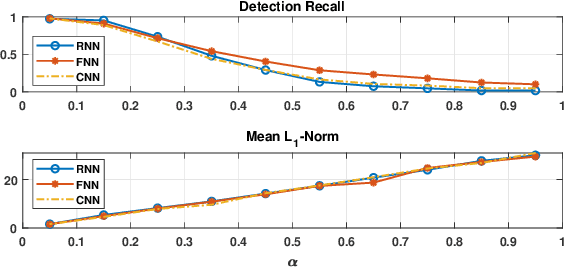

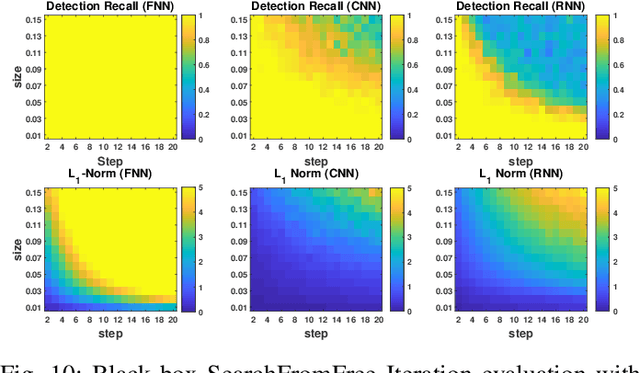

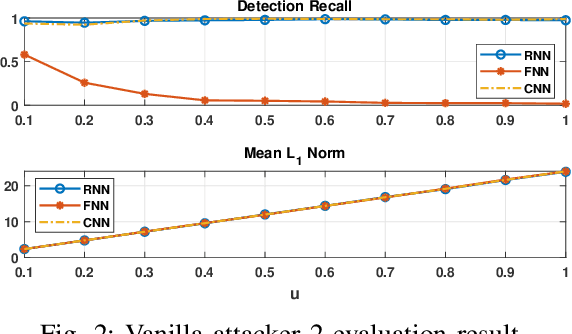

Abstract:Effective detection of energy theft can prevent revenue losses of utility companies and is also important for smart grid security. In recent years, enabled by the massive fine-grained smart meter data, deep learning (DL) approaches are becoming popular in the literature to detect energy theft in the advanced metering infrastructure (AMI). However, as neural networks are shown to be vulnerable to adversarial examples, the security of the DL models is of concern. In this work, we study the vulnerabilities of DL-based energy theft detection through adversarial attacks, including single-step attacks and iterative attacks. From the attacker's point of view, we design the \textit{SearchFromFree} framework that consists of 1) a randomly adversarial measurement initialization approach to maximize the stolen profit and 2) a step-size searching scheme to increase the performance of black-box iterative attacks. The evaluation based on three types of neural networks shows that the adversarial attacker can report extremely low consumption measurements to the utility without being detected by the DL models. We finally discuss the potential defense mechanisms against adversarial attacks in energy theft detection.

SearchFromFree: Adversarial Measurements for Machine Learning-based Energy Theft Detection

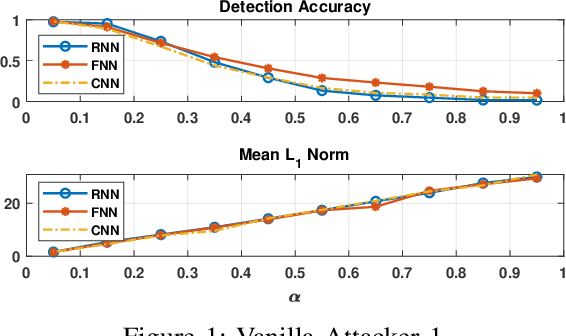

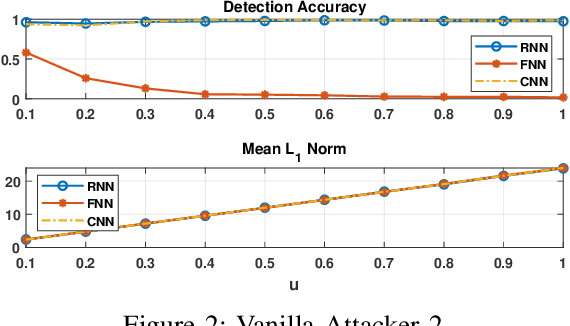

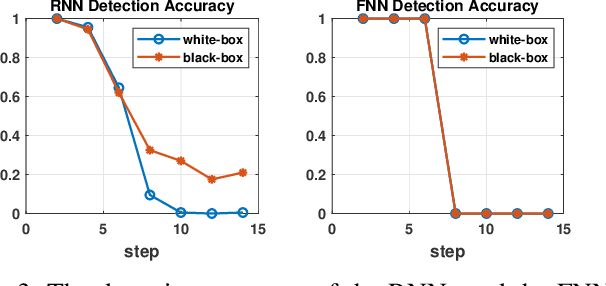

Jun 02, 2020

Abstract:Energy theft causes large economic losses to utility companies around the world. In recent years, energy theft detection approaches based on machine learning (ML) techniques, especially neural networks, are becoming popular in the research community and shown to achieve state-of-the-art detection performance. However, in this work, we demonstrate that the well-trained ML models for energy theft detection are highly vulnerable to adversarial attacks. In particular, we design an adversarial measurement generation approach that enables the attacker to report extremely low power consumption measurements to utilities while bypassing the ML energy theft detection. We evaluate our approach with three kinds of neural networks based on a real-world smart meter dataset. The evaluation results demonstrate that our approach is able to significantly decrease the ML models' detection accuracy, even for black-box attackers.

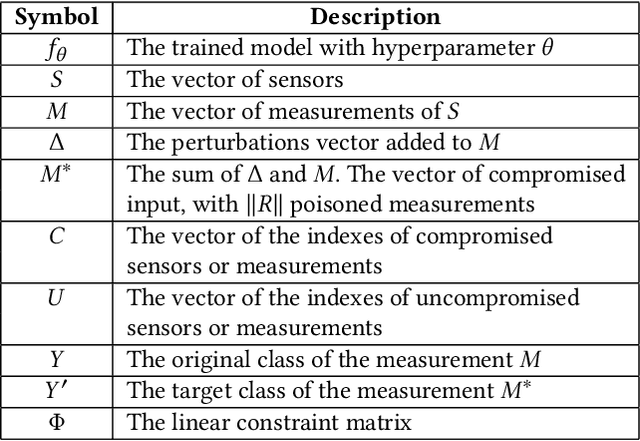

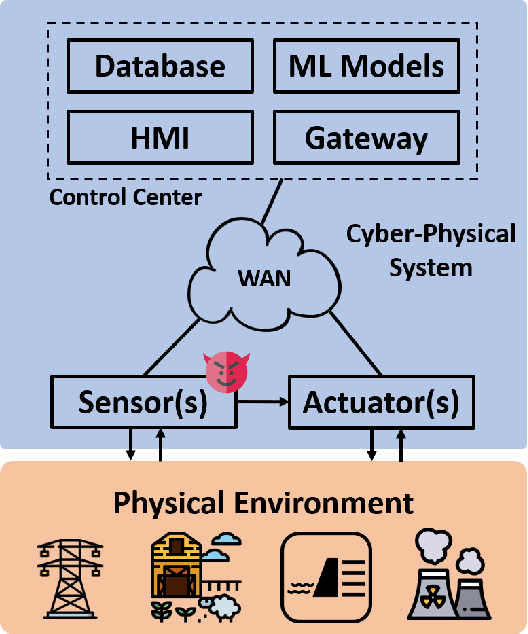

ConAML: Constrained Adversarial Machine Learning for Cyber-Physical Systems

Mar 12, 2020

Abstract:Recent research demonstrated that the superficially well-trained machine learning (ML) models are highly vulnerable to adversarial examples. As ML techniques are rapidly employed in cyber-physical systems (CPSs), the security of these applications is of concern. However, current studies on adversarial machine learning (AML) mainly focus on computer vision and related fields. The risks the adversarial examples can bring to the CPS applications have not been well investigated. In particular, due to the distributed property of data sources and the inherent physical constraints imposed by CPSs, the widely-used threat models in previous research and the state-of-the-art AML algorithms are no longer practical when applied to CPS applications. We study the vulnerabilities of ML applied in CPSs by proposing Constrained Adversarial Machine Learning (ConAML), which generates adversarial examples used as ML model input that meet the intrinsic constraints of the physical systems. We first summarize the difference between AML in CPSs and AML in existing cyber systems and propose a general threat model for ConAML. We then design a best-effort search algorithm to iteratively generate adversarial examples with linear physical constraints. As proofs of concept, we evaluate the vulnerabilities of ML models used in the electric power grid and water treatment systems. The results show that our ConAML algorithms can effectively generate adversarial examples which significantly decrease the performance of the ML models even under practical physical constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge