SearchFromFree: Adversarial Measurements for Machine Learning-based Energy Theft Detection

Paper and Code

Jun 02, 2020

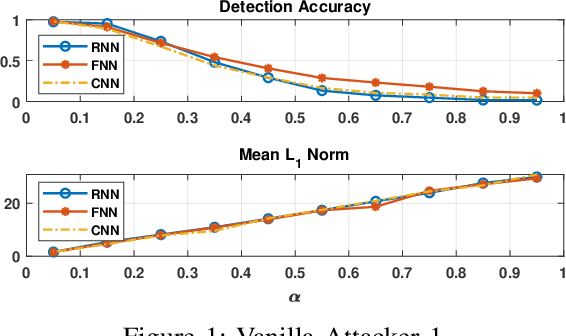

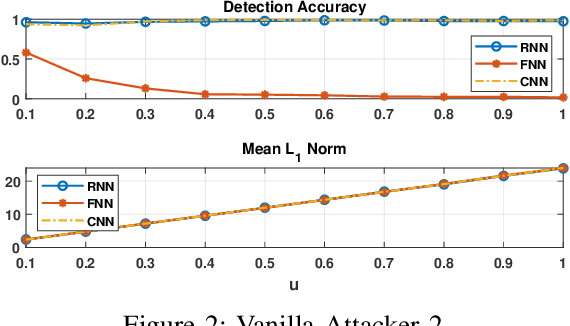

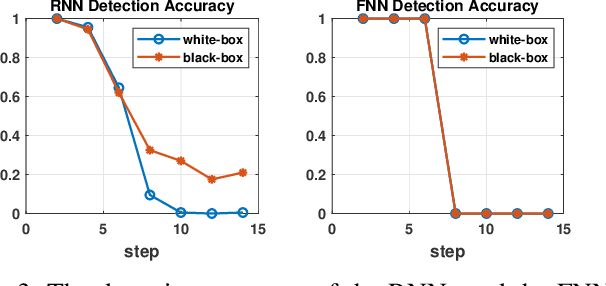

Energy theft causes large economic losses to utility companies around the world. In recent years, energy theft detection approaches based on machine learning (ML) techniques, especially neural networks, are becoming popular in the research community and shown to achieve state-of-the-art detection performance. However, in this work, we demonstrate that the well-trained ML models for energy theft detection are highly vulnerable to adversarial attacks. In particular, we design an adversarial measurement generation approach that enables the attacker to report extremely low power consumption measurements to utilities while bypassing the ML energy theft detection. We evaluate our approach with three kinds of neural networks based on a real-world smart meter dataset. The evaluation results demonstrate that our approach is able to significantly decrease the ML models' detection accuracy, even for black-box attackers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge