Yik San Cheng

DINeuro: Distilling Knowledge from 2D Natural Images via Deformable Tubular Transferring Strategy for 3D Neuron Reconstruction

Oct 29, 2024

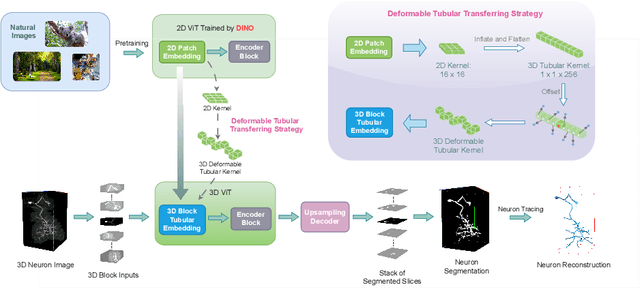

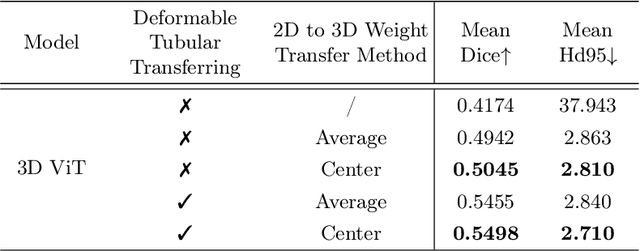

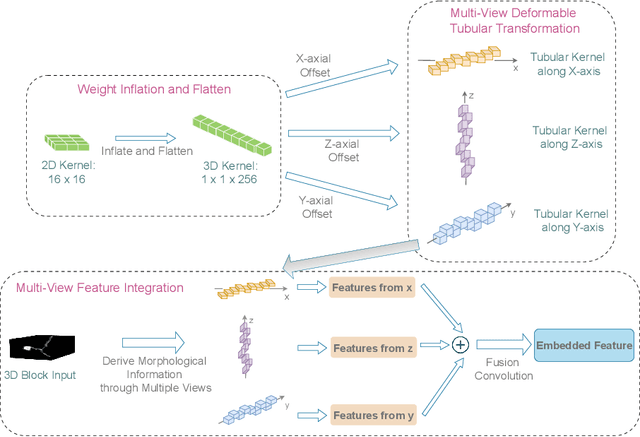

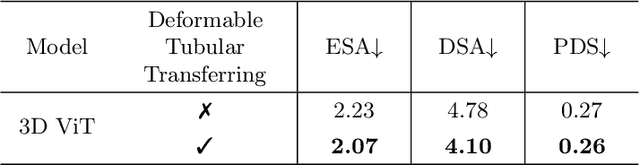

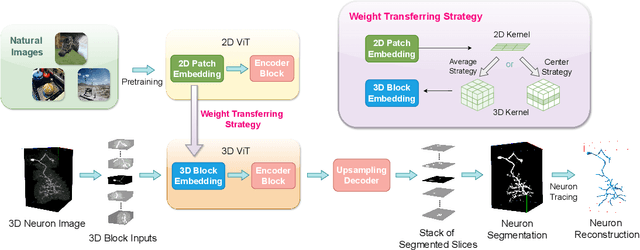

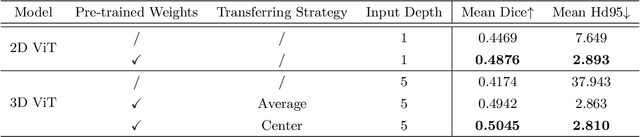

Abstract:Reconstructing neuron morphology from 3D light microscope imaging data is critical to aid neuroscientists in analyzing brain networks and neuroanatomy. With the boost from deep learning techniques, a variety of learning-based segmentation models have been developed to enhance the signal-to-noise ratio of raw neuron images as a pre-processing step in the reconstruction workflow. However, most existing models directly encode the latent representative features of volumetric neuron data but neglect their intrinsic morphological knowledge. To address this limitation, we design a novel framework that distills the prior knowledge from a 2D Vision Transformer pre-trained on extensive 2D natural images to facilitate neuronal morphological learning of our 3D Vision Transformer. To bridge the knowledge gap between the 2D natural image and 3D microscopic morphologic domains, we propose a deformable tubular transferring strategy that adapts the pre-trained 2D natural knowledge to the inherent tubular characteristics of neuronal structure in the latent embedding space. The experimental results on the Janelia dataset of the BigNeuron project demonstrate that our method achieves a segmentation performance improvement of 4.53% in mean Dice and 3.56% in mean 95% Hausdorff distance.

Boosting 3D Neuron Segmentation with 2D Vision Transformer Pre-trained on Natural Images

May 04, 2024

Abstract:Neuron reconstruction, one of the fundamental tasks in neuroscience, rebuilds neuronal morphology from 3D light microscope imaging data. It plays a critical role in analyzing the structure-function relationship of neurons in the nervous system. However, due to the scarcity of neuron datasets and high-quality SWC annotations, it is still challenging to develop robust segmentation methods for single neuron reconstruction. To address this limitation, we aim to distill the consensus knowledge from massive natural image data to aid the segmentation model in learning the complex neuron structures. Specifically, in this work, we propose a novel training paradigm that leverages a 2D Vision Transformer model pre-trained on large-scale natural images to initialize our Transformer-based 3D neuron segmentation model with a tailored 2D-to-3D weight transferring strategy. Our method builds a knowledge sharing connection between the abundant natural and the scarce neuron image domains to improve the 3D neuron segmentation ability in a data-efficiency manner. Evaluated on a popular benchmark, BigNeuron, our method enhances neuron segmentation performance by 8.71% over the model trained from scratch with the same amount of training samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge