Yicheng Zhu

SatFusion: A Unified Framework for Enhancing Satellite IoT Images via Multi-Temporal and Multi-Source Data Fusion

Oct 09, 2025

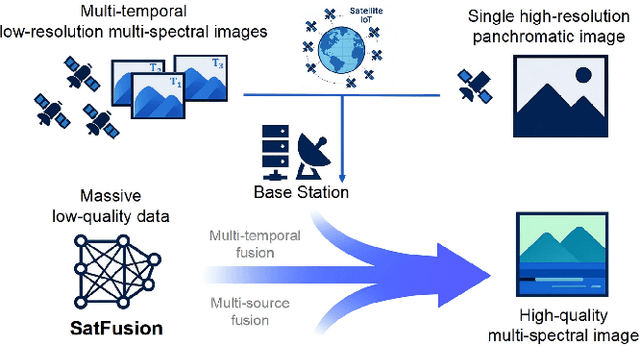

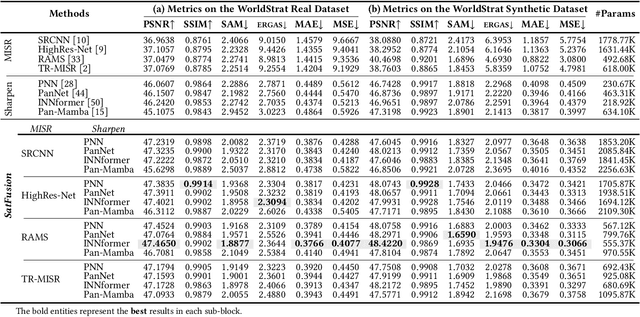

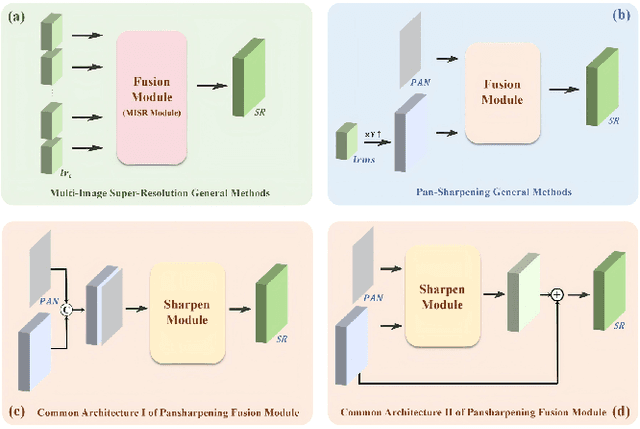

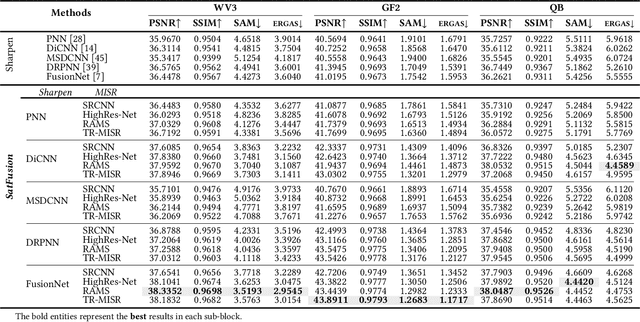

Abstract:With the rapid advancement of the digital society, the proliferation of satellites in the Satellite Internet of Things (Sat-IoT) has led to the continuous accumulation of large-scale multi-temporal and multi-source images across diverse application scenarios. However, existing methods fail to fully exploit the complementary information embedded in both temporal and source dimensions. For example, Multi-Image Super-Resolution (MISR) enhances reconstruction quality by leveraging temporal complementarity across multiple observations, yet the limited fine-grained texture details in input images constrain its performance. Conversely, pansharpening integrates multi-source images by injecting high-frequency spatial information from panchromatic data, but typically relies on pre-interpolated low-resolution inputs and assumes noise-free alignment, making it highly sensitive to noise and misregistration. To address these issues, we propose SatFusion: A Unified Framework for Enhancing Satellite IoT Images via Multi-Temporal and Multi-Source Data Fusion. Specifically, SatFusion first employs a Multi-Temporal Image Fusion (MTIF) module to achieve deep feature alignment with the panchromatic image. Then, a Multi-Source Image Fusion (MSIF) module injects fine-grained texture information from the panchromatic data. Finally, a Fusion Composition module adaptively integrates the complementary advantages of both modalities while dynamically refining spectral consistency, supervised by a weighted combination of multiple loss functions. Extensive experiments on the WorldStrat, WV3, QB, and GF2 datasets demonstrate that SatFusion significantly improves fusion quality, robustness under challenging conditions, and generalizability to real-world Sat-IoT scenarios. The code is available at: https://github.com/dllgyufei/SatFusion.git.

AutoBio: A Simulation and Benchmark for Robotic Automation in Digital Biology Laboratory

May 20, 2025Abstract:Vision-language-action (VLA) models have shown promise as generalist robotic policies by jointly leveraging visual, linguistic, and proprioceptive modalities to generate action trajectories. While recent benchmarks have advanced VLA research in domestic tasks, professional science-oriented domains remain underexplored. We introduce AutoBio, a simulation framework and benchmark designed to evaluate robotic automation in biology laboratory environments--an application domain that combines structured protocols with demanding precision and multimodal interaction. AutoBio extends existing simulation capabilities through a pipeline for digitizing real-world laboratory instruments, specialized physics plugins for mechanisms ubiquitous in laboratory workflows, and a rendering stack that support dynamic instrument interfaces and transparent materials through physically based rendering. Our benchmark comprises biologically grounded tasks spanning three difficulty levels, enabling standardized evaluation of language-guided robotic manipulation in experimental protocols. We provide infrastructure for demonstration generation and seamless integration with VLA models. Baseline evaluations with two SOTA VLA models reveal significant gaps in precision manipulation, visual reasoning, and instruction following in scientific workflows. By releasing AutoBio, we aim to catalyze research on generalist robotic systems for complex, high-precision, and multimodal professional environments. The simulator and benchmark are publicly available to facilitate reproducible research.

FDDet: Frequency-Decoupling for Boundary Refinement in Temporal Action Detection

Apr 01, 2025Abstract:Temporal action detection aims to locate and classify actions in untrimmed videos. While recent works focus on designing powerful feature processors for pre-trained representations, they often overlook the inherent noise and redundancy within these features. Large-scale pre-trained video encoders tend to introduce background clutter and irrelevant semantics, leading to context confusion and imprecise boundaries. To address this, we propose a frequency-aware decoupling network that improves action discriminability by filtering out noisy semantics captured by pre-trained models. Specifically, we introduce an adaptive temporal decoupling scheme that suppresses irrelevant information while preserving fine-grained atomic action details, yielding more task-specific representations. In addition, we enhance inter-frame modeling by capturing temporal variations to better distinguish actions from background redundancy. Furthermore, we present a long-short-term category-aware relation network that jointly models local transitions and long-range dependencies, improving localization precision. The refined atomic features and frequency-guided dynamics are fed into a standard detection head to produce accurate action predictions. Extensive experiments on THUMOS14, HACS, and ActivityNet-1.3 show that our method, powered by InternVideo2-6B features, achieves state-of-the-art performance on temporal action detection benchmarks.

Tech Report: Divide and Conquer 3D Real-Time Reconstruction for Improved IGS

Dec 31, 2024

Abstract:Tracking surgical modifications based on endoscopic videos is technically feasible and of great clinical advantages; however, it still remains challenging. This report presents a modular pipeline to divide and conquer the clinical challenges in the process. The pipeline integrates frame selection, depth estimation, and 3D reconstruction components, allowing for flexibility and adaptability in incorporating new methods. Recent advancements, including the integration of Depth-Anything V2 and EndoDAC for depth estimation, as well as improvements in the Iterative Closest Point (ICP) alignment process, are detailed. Experiments conducted on the Hamlyn dataset demonstrate the effectiveness of the integrated methods. System capability and limitations are both discussed.

Topic Driven Adaptive Network for Cross-Domain Sentiment Classification

Nov 28, 2021

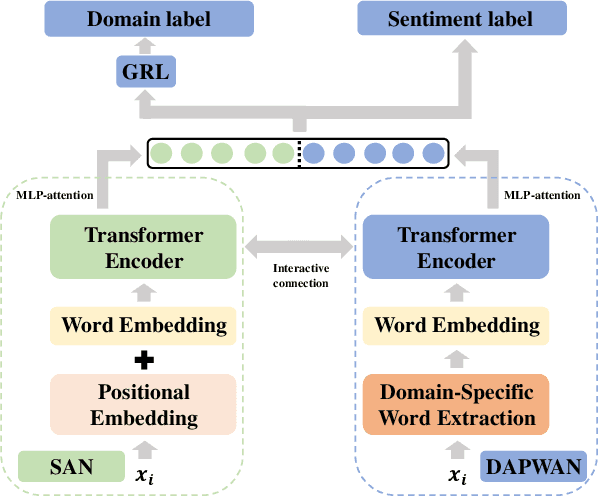

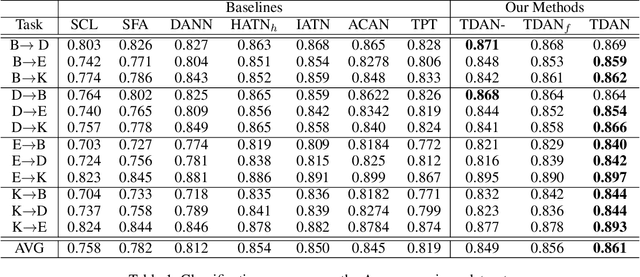

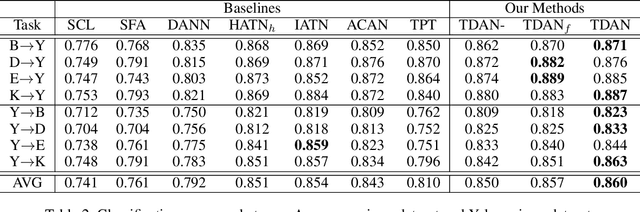

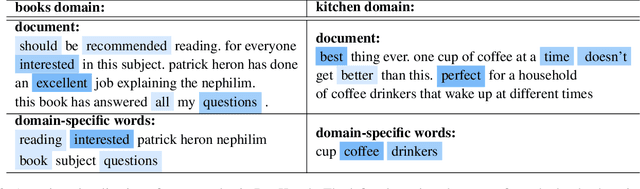

Abstract:Cross-domain sentiment classification has been a hot spot these years, which aims to learn a reliable classifier using labeled data from the source domain and evaluate it on the target domain. In this vein, most approaches utilized domain adaptation that maps data from different domains into a common feature space. To further improve the model performance, several methods targeted to mine domain-specific information were proposed. However, most of them only utilized a limited part of domain-specific information. In this study, we first develop a method of extracting domain-specific words based on the topic information. Then, we propose a Topic Driven Adaptive Network (TDAN) for cross-domain sentiment classification. The network consists of two sub-networks: semantics attention network and domain-specific word attention network, the structures of which are based on transformers. These sub-networks take different forms of input and their outputs are fused as the feature vector. Experiments validate the effectiveness of our TDAN on sentiment classification across domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge